Hello,

I have two identical GPU, Nvidia 1050 TI.

I managed to follow explanation to passthrough my GPU to my VM thanks to

https://pve.proxmox.com/wiki/PCI(e)_Passthrough

https://pve.proxmox.com/wiki/Pci_passthrough

https://gist.github.com/qubidt/64f617e959725e934992b080e677656f

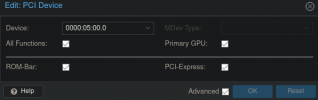

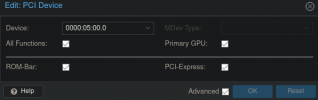

and I added the PCI device to each VM like this

Both VM are working nicely with the GPU I indicate but not simultaneously.

If one VM is started and I launch the second one, then the first one stops immediately.

Is it because both GPU have the same vendor and device IDs so I hav created only one file /etc/modprobe.d/vfio.conf with only this content

?

I modify GRUB the minimum possible for now :

GPU are isolated in different iommu groups, group 14 and group 18.

Thank you in advance for your help.

Best regards,

Thatoo

I have two identical GPU, Nvidia 1050 TI.

I managed to follow explanation to passthrough my GPU to my VM thanks to

https://pve.proxmox.com/wiki/PCI(e)_Passthrough

https://pve.proxmox.com/wiki/Pci_passthrough

https://gist.github.com/qubidt/64f617e959725e934992b080e677656f

cat /etc/modprobe.d/vfio.conf

options vfio-pci ids=10de:1c82,10de:0fb9 disable_vga=1and I added the PCI device to each VM like this

Both VM are working nicely with the GPU I indicate but not simultaneously.

If one VM is started and I launch the second one, then the first one stops immediately.

Is it because both GPU have the same vendor and device IDs so I hav created only one file /etc/modprobe.d/vfio.conf with only this content

options vfio-pci ids=10de:1c82,10de:0fb9 disable_vga=1?

I modify GRUB the minimum possible for now :

GRUB_CMDLINE_LINUX_DEFAULT="quiet intel_iommu=on iommu=pt pci=noaer"GPU are isolated in different iommu groups, group 14 and group 18.

root@dsqf:~# find /sys/kernel/iommu_groups/ -type l

/sys/kernel/iommu_groups/17/devices/0000:04:00.0

/sys/kernel/iommu_groups/7/devices/0000:00:16.0

/sys/kernel/iommu_groups/15/devices/0000:02:00.0

/sys/kernel/iommu_groups/5/devices/10000:e0:17.0

/sys/kernel/iommu_groups/5/devices/0000:00:0e.0

/sys/kernel/iommu_groups/13/devices/0000:00:1f.0

/sys/kernel/iommu_groups/13/devices/0000:00:1f.5

/sys/kernel/iommu_groups/13/devices/0000:00:1f.3

/sys/kernel/iommu_groups/13/devices/0000:00:1f.4

/sys/kernel/iommu_groups/3/devices/0000:00:08.0

/sys/kernel/iommu_groups/11/devices/0000:00:1c.3

/sys/kernel/iommu_groups/1/devices/0000:00:01.0

/sys/kernel/iommu_groups/18/devices/0000:05:00.1

/sys/kernel/iommu_groups/18/devices/0000:05:00.0

/sys/kernel/iommu_groups/8/devices/0000:00:17.0

/sys/kernel/iommu_groups/16/devices/0000:03:00.0

/sys/kernel/iommu_groups/6/devices/0000:00:14.2

/sys/kernel/iommu_groups/6/devices/0000:00:14.0

/sys/kernel/iommu_groups/14/devices/0000:01:00.0

/sys/kernel/iommu_groups/14/devices/0000:01:00.1

/sys/kernel/iommu_groups/4/devices/0000:00:0a.0

/sys/kernel/iommu_groups/12/devices/0000:00:1c.4

/sys/kernel/iommu_groups/2/devices/0000:00:02.0

/sys/kernel/iommu_groups/10/devices/0000:00:1c.2

/sys/kernel/iommu_groups/0/devices/0000:00:00.0

/sys/kernel/iommu_groups/9/devices/0000:00:1c.0Thank you in advance for your help.

Best regards,

Thatoo

Last edited: