Create a Ubuntu 24.04 LXC container.

I used the excellent tteck script but you can also do using any other method you are comfortable with.

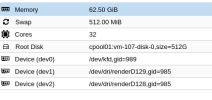

Give it plenty of specs regarding storage, RAM and CPU (according to Ollama's recommendations)

I chose 32GB and all available cores. Give it plenty of storage to accomodate your models (can be expanded using the GUI)

I chose 60 GB to start. ROCm takes up ~30 GB.

In the LXC container:

install ROCm according to official instructions but do not use DKMS as the drivers are already installed on Proxmox by default.

This is the purpose of the --no-dkms flag

Set the environment variables as discussed in this thread:

Check what group IDs render and video is, in the container.

On the Proxmox host:

Locate your AMD GPU's render device path to use in the next step:

In the proxmox GUI, go to Options and set Device Passthrough.

Use the container's GID for render group inside the container and uid left to 0.

Use the GID for video group and uid left to 0.

Restart container and proceed to install ollama according to instructions on the github repo.

You can check on the host using radeontop if your GPU is working and is used.

I used the excellent tteck script but you can also do using any other method you are comfortable with.

Give it plenty of specs regarding storage, RAM and CPU (according to Ollama's recommendations)

I chose 32GB and all available cores. Give it plenty of storage to accomodate your models (can be expanded using the GUI)

I chose 60 GB to start. ROCm takes up ~30 GB.

In the LXC container:

install ROCm according to official instructions but do not use DKMS as the drivers are already installed on Proxmox by default.

This is the purpose of the --no-dkms flag

Code:

wget https://repo.radeon.com/amdgpu-install/6.2.4/ubuntu/noble/amdgpu-install_6.2.60204-1_all.deb

sudo apt install ./amdgpu-install_6.2.60204-1_all.deb

amdgpu-install --usecase=rocm --no-dkmsSet the environment variables as discussed in this thread:

- for GCN 5th gen based GPUs and APUs HSA_OVERRIDE_GFX_VERSION=9.0.0

- for RDNA 1 based GPUs and APUs HSA_OVERRIDE_GFX_VERSION=10.1.0

- for RDNA 2 based GPUs and APUs HSA_OVERRIDE_GFX_VERSION=10.3.0

- for RDNA 3 based GPUs and APUs HSA_OVERRIDE_GFX_VERSION=11.0.0

Check what group IDs render and video is, in the container.

Code:

cat /etc/group | grep -w 'render\|\video'On the Proxmox host:

Locate your AMD GPU's render device path to use in the next step:

Code:

ls -l /sys/class/drm/renderD*/device/driverIn the proxmox GUI, go to Options and set Device Passthrough.

Code:

/dev/kbd

Code:

/dev/dri/renderD***Restart container and proceed to install ollama according to instructions on the github repo.

You can check on the host using radeontop if your GPU is working and is used.

Code:

apt update && apt install radeontop

Last edited: