So this is giving me headaches for a few days. I have TrueNAS Scale running in a VM with a dedicated nic and drives. My Proxmox host has a separate dedicated nic and drives. I am up to date on everything. I have set up datasets and shares in TrueNAS and can access the SMB shares with out issue from my Windows clients. The NFS shares are another issue.

When in a vm on the same host or another host, I can mount the nfs shares and have RW capabilities to the share. These are tested with vanilla Debian v11.3 vm's with nothing but the nfs client added. Simple "mount -vvv xxx.xxx.xxx.xxx:/mnt/data-01/ds-proxmox /opt/truenas" and no issues coping, editing, or deleting files/directories.

When I am on the Proxmox host or any other Proxmox host and try to the same thing, I can mount and list the files but cannot edit them and attempting to edit anything locks up the system where I have to reboot the host to clear it. Probably a NFS busy state and the session is dead.

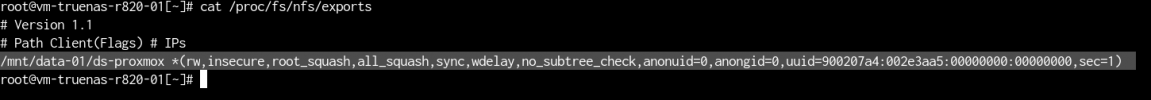

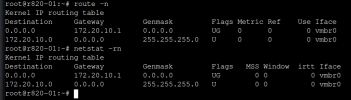

I have tried many different permissions and NFS3/4 on the TrueNAS scale side but nothing works from any Proxmox host. This prevents me from created NFS storage, shared or not, on any Proxmox host where the NFS share is running on TrueNAS scale.

At this point I have to believe there is something going on with Promox related to accessing TrueNAS Scale NFS shares. as it is the only common denominator that does not work properly. Any help would be appreciated.

When in a vm on the same host or another host, I can mount the nfs shares and have RW capabilities to the share. These are tested with vanilla Debian v11.3 vm's with nothing but the nfs client added. Simple "mount -vvv xxx.xxx.xxx.xxx:/mnt/data-01/ds-proxmox /opt/truenas" and no issues coping, editing, or deleting files/directories.

When I am on the Proxmox host or any other Proxmox host and try to the same thing, I can mount and list the files but cannot edit them and attempting to edit anything locks up the system where I have to reboot the host to clear it. Probably a NFS busy state and the session is dead.

I have tried many different permissions and NFS3/4 on the TrueNAS scale side but nothing works from any Proxmox host. This prevents me from created NFS storage, shared or not, on any Proxmox host where the NFS share is running on TrueNAS scale.

At this point I have to believe there is something going on with Promox related to accessing TrueNAS Scale NFS shares. as it is the only common denominator that does not work properly. Any help would be appreciated.