Occasionally I'm having issues when trying to exit CTs that I've used pct enter to enter. I usually use ctrl-d to exit but I've found that typing exit gives the same result. I have cloned one of the CTs to 100 and am using to test this.

When I exit the terminal hangs as in the image below. Then I can either kill the terminal window and log in again or wait for over 2 minutes for the exit process to complete. The CT seems unaffected by this and keeps running as expected.

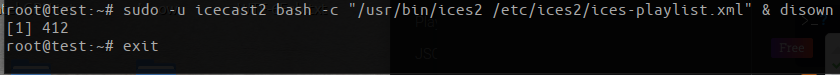

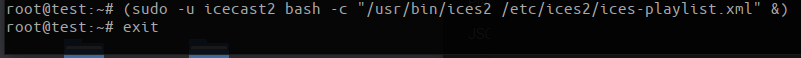

This specific container is running icecast2 alone with ices2 to stream some audio files to icecast. What I have noticed is this happens after running the command:

The command exits as expected and I'm able to continue using the CT until I try and exit it.

Any help would be very much appreciated

systemctl status pve-container@100.service (at the point it hangs)

/etc/pve/lxc/100.conf

pveversion -v

When I exit the terminal hangs as in the image below. Then I can either kill the terminal window and log in again or wait for over 2 minutes for the exit process to complete. The CT seems unaffected by this and keeps running as expected.

This specific container is running icecast2 alone with ices2 to stream some audio files to icecast. What I have noticed is this happens after running the command:

Bash:

sudo -u icecast2 bash -c "/usr/bin/ices2 /etc/ices2/ices-playlist.xml"The command exits as expected and I'm able to continue using the CT until I try and exit it.

Any help would be very much appreciated

systemctl status pve-container@100.service (at the point it hangs)

● pve-container@100.service - PVE LXC Container: 100

Loaded: loaded (/lib/systemd/system/pve-container@.service; static; vendor preset: enabled)

Active: active (running) since Wed 2021-04-28 16:12:45 BST; 1 months 17 days ago

Docs: man:lxc-start

man:lxc

manct

Main PID: 10933 (lxc-start)

Tasks: 1 (limit: 4915)

Memory: 4.0M

CGroup: /system.slice/system-pve\x2dcontainer.slice/pve-container@100.service

‣ 10933 /usr/bin/lxc-start -F -n 100

Apr 28 16:12:45 ns396 systemd[1]: Started PVE LXC Container: 100.

/etc/pve/lxc/100.conf

arch: amd64

cores: 3

cpulimit: 3

cpuunits: 900

hostname: test

memory: 9632

net0: name=eth0,bridge=vmbr0,firewall=1,gw=10.0.0.1,hwaddr=CA:87:6D:F8:5E:C8,ip=10.0.0.100/32,type=veth

onboot: 1

ostype: ubuntu

rootfs: zfs:subvol-100-disk-0,size=20G

snaptime: 1597069095

startup: order=10

swap: 2048

unprivileged: 1

pveversion -v

proxmox-ve: 6.4-1 (running kernel: 5.4.106-1-pve)

pve-manager: 6.4-8 (running version: 6.4-8/185e14db)

pve-kernel-5.4: 6.4-3

pve-kernel-helper: 6.4-3

pve-kernel-5.4.119-1-pve: 5.4.119-1

pve-kernel-5.4.114-1-pve: 5.4.114-1

pve-kernel-5.4.106-1-pve: 5.4.106-1

pve-kernel-4.15: 5.4-19

pve-kernel-4.15.18-30-pve: 4.15.18-58

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.1.2-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: 0.8.35+pve1

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.20-pve1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.0.3-1

libpve-access-control: 6.4-1

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-3

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-3

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.9-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.5-6

pve-cluster: 6.4-1

pve-container: 3.3-5

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.2-4

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-6

pve-xtermjs: 4.7.0-3

pve-zsync: 2.2

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.4-pve1