I have a bit of a puzzle here.

I'm trying to make a web server running in a VM internet accessible.

Doesn't have to be pretty. I don't even need SSL. Just need to see the the "Hello World" page on port 80.

Once I get that far I'm pretty sure I can build upon it myself.

The server in a colo, to which I do not have physical access.

I am working with a single physical network card, which is 10G. We'll call this eno123

I have a single publicly routable IPv4 address for this server. We'll call this 150.0.0.150.

Maybe I can get more in the future, but not many, and I need to get this working with one for now.

The interface with this address must be tagged vlan 6 (named eno123.6)

I have a private subnet, which we'll call 10.0.0.0/24

This is tagged vlan 10, running on the same physical network card (named eno123.10)

The Proxmox node has a bridge, which we'll call vmbr0, which has bridge port eno123.10 and is assigned IP 10.0.0.14.

If I change the bridge port, I can no longer access the node and have to resort to iDRAC to recover.

vmbr0 is NOT vlan-aware. Yes I've tried turning it on. Doesn't seem to make a difference.

There is a VM called http-server (10.0.0.5/24) which has apache2 running on port 80.

ufw within the VM is set to allow all

VM Firewall rules are set to ACCEPT HTTP on all. Firewall is on (and checked for the virtual network card as well)

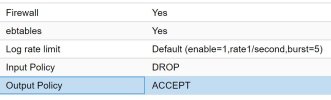

Node Firewall rules are set to ACCEPT HTTP on all. Firewall is on.

Datacenter Firewall rules are set to ACCEPT HTTP on all. Firewall is on.

If I boot up another VM that has a desktop environment (which we'll call ubuntu-desktop), I can see the "Hello World" page on 10.0.0.5:80, so VMs can talk to each other no problem.

I can set up basic masquerading to give VMs internet access for downloading updates and whatnot (iptables -t nat -A POSTROUTING -s '10.0.0.0/24' -o eno123.6 -j MASQUERADE)

I have an account on a server running on bare metal in the same rack with which I access the Proxmox UI via SSH tunnel.

This account is limited, and can basically just be used for tunneling.

The tunnel requires vlan 10, which I cannot change.

Using this old bare metal server I can ping VMs running in the Proxmox server. I am certain it is running on vlan 10, so this should imply that the VMs are also on vlan 10.

I can also tunnel to a VM through this old bare metal server and open the "Hello World" page in a local browser on 127.0.0.1.

This would imply that any device on the subnet can access http-server (assuming they're tagged vlan 10).

I need, at some point, to use NAT to direct internet traffic to the VM. In the future yes, this is best done with a reverse proxy, but any future reverse proxy will *also* be a VM running in this Proxmox node, so it'll have the same issue.

This is what I've tried:

iptables -t nat -A PREROUTING -i eno123.6 -p tcp -d 150.0.0.150 --dport 80 -j DNAT --to-destination 10.0.0.5:80

iptables -t nat -A POSTROUTING -p tcp -d 10.0.0.5 --dport 80 -j SNAT --to-source 150.0.0.150

Didn't work.

I've obviously tried a lot of variations. Spent about two days messing about with different combinations of various things turned on/of. No joy, or else I wouldn't be here.

I'm wondering if there's some limitation in Proxmox that makes this particular setup non-viable. Maybe something to do with routing from vlan to vlan with a single physical interface. This one physical connection is having to do a lot of stuff so I can see how that might be the case.

Will I need to get another physical network card in this thing? Or is there some trick I'm not seeing here? Is there some additional secret firewall on Proxmox VE 8.2 that I'm unaware of?

Things I haven't yet tried:

-NATing from 150.0.0.150 to 10.0.0.14, and *then* from 10.0.0.14 to 10.0.0.5 (and back) - seemed kinda silly so I thought I'd ask first.

-Begging the colo for help.

-Just buying another physical network card & cable and hoping there's an empty port in the switch.

I'm trying to make a web server running in a VM internet accessible.

Doesn't have to be pretty. I don't even need SSL. Just need to see the the "Hello World" page on port 80.

Once I get that far I'm pretty sure I can build upon it myself.

The server in a colo, to which I do not have physical access.

I am working with a single physical network card, which is 10G. We'll call this eno123

I have a single publicly routable IPv4 address for this server. We'll call this 150.0.0.150.

Maybe I can get more in the future, but not many, and I need to get this working with one for now.

The interface with this address must be tagged vlan 6 (named eno123.6)

I have a private subnet, which we'll call 10.0.0.0/24

This is tagged vlan 10, running on the same physical network card (named eno123.10)

The Proxmox node has a bridge, which we'll call vmbr0, which has bridge port eno123.10 and is assigned IP 10.0.0.14.

If I change the bridge port, I can no longer access the node and have to resort to iDRAC to recover.

vmbr0 is NOT vlan-aware. Yes I've tried turning it on. Doesn't seem to make a difference.

There is a VM called http-server (10.0.0.5/24) which has apache2 running on port 80.

ufw within the VM is set to allow all

VM Firewall rules are set to ACCEPT HTTP on all. Firewall is on (and checked for the virtual network card as well)

Node Firewall rules are set to ACCEPT HTTP on all. Firewall is on.

Datacenter Firewall rules are set to ACCEPT HTTP on all. Firewall is on.

If I boot up another VM that has a desktop environment (which we'll call ubuntu-desktop), I can see the "Hello World" page on 10.0.0.5:80, so VMs can talk to each other no problem.

I can set up basic masquerading to give VMs internet access for downloading updates and whatnot (iptables -t nat -A POSTROUTING -s '10.0.0.0/24' -o eno123.6 -j MASQUERADE)

I have an account on a server running on bare metal in the same rack with which I access the Proxmox UI via SSH tunnel.

This account is limited, and can basically just be used for tunneling.

The tunnel requires vlan 10, which I cannot change.

Using this old bare metal server I can ping VMs running in the Proxmox server. I am certain it is running on vlan 10, so this should imply that the VMs are also on vlan 10.

I can also tunnel to a VM through this old bare metal server and open the "Hello World" page in a local browser on 127.0.0.1.

This would imply that any device on the subnet can access http-server (assuming they're tagged vlan 10).

I need, at some point, to use NAT to direct internet traffic to the VM. In the future yes, this is best done with a reverse proxy, but any future reverse proxy will *also* be a VM running in this Proxmox node, so it'll have the same issue.

This is what I've tried:

iptables -t nat -A PREROUTING -i eno123.6 -p tcp -d 150.0.0.150 --dport 80 -j DNAT --to-destination 10.0.0.5:80

iptables -t nat -A POSTROUTING -p tcp -d 10.0.0.5 --dport 80 -j SNAT --to-source 150.0.0.150

Didn't work.

I've obviously tried a lot of variations. Spent about two days messing about with different combinations of various things turned on/of. No joy, or else I wouldn't be here.

I'm wondering if there's some limitation in Proxmox that makes this particular setup non-viable. Maybe something to do with routing from vlan to vlan with a single physical interface. This one physical connection is having to do a lot of stuff so I can see how that might be the case.

Will I need to get another physical network card in this thing? Or is there some trick I'm not seeing here? Is there some additional secret firewall on Proxmox VE 8.2 that I'm unaware of?

Things I haven't yet tried:

-NATing from 150.0.0.150 to 10.0.0.14, and *then* from 10.0.0.14 to 10.0.0.5 (and back) - seemed kinda silly so I thought I'd ask first.

-Begging the colo for help.

-Just buying another physical network card & cable and hoping there's an empty port in the switch.