Hello,

we've got problems with our PBS setup.

Setup:

We got an PBS Server that holds 30 Backups from multiple VMs. (Daily Backup Task + GC set to 30)

We got another PBS that is located offsite. We added an sync job on the offsite PBS to "transfer last" 12 versions from the other PBS.

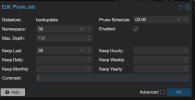

We added an GC-Task on the offsite to "Keep Last" 30 versions for this namespace.

What we except:

The Sync will be initial transfer the 12 latest Backups. After that. Day by day the Versions on the offsite should be increase up to 30. Then the GC will cleanup every day the oldest Version. So the versioncount should be between 30-31, depending on whether the GC has already run that day.

What we got after weeks of running:

Different Version Count on Vms in the same namespace. 80% of the VMs got "7" versions. 15% got "9" versions. 5% 8-10 versions.

How can this be explained?

The Sync log shows following after hitting it manualy:

Are we missing something fundamental?

EDIT: We installed on all PBS Version 3.2-2

we've got problems with our PBS setup.

Setup:

We got an PBS Server that holds 30 Backups from multiple VMs. (Daily Backup Task + GC set to 30)

We got another PBS that is located offsite. We added an sync job on the offsite PBS to "transfer last" 12 versions from the other PBS.

We added an GC-Task on the offsite to "Keep Last" 30 versions for this namespace.

What we except:

The Sync will be initial transfer the 12 latest Backups. After that. Day by day the Versions on the offsite should be increase up to 30. Then the GC will cleanup every day the oldest Version. So the versioncount should be between 30-31, depending on whether the GC has already run that day.

What we got after weeks of running:

Different Version Count on Vms in the same namespace. 80% of the VMs got "7" versions. 15% got "9" versions. 5% 8-10 versions.

How can this be explained?

The Sync log shows following after hitting it manualy:

Code:

2024-06-05T13:03:07+02:00: skipped: 30 snapshot(s) (2024-05-06T00:00:23Z .. 2024-06-04T00:00:26Z) - older than the newest local snapshot

2024-06-05T13:03:07+02:00: re-sync snapshot vm/107/2024-06-05T00:00:22Z

2024-06-05T13:03:07+02:00: no data changes

2024-06-05T13:03:07+02:00: percentage done: 14.29% (2/14 groups)

2024-06-05T13:03:07+02:00: skipped: 30 snapshot(s) (2024-05-06T00:00:02Z .. 2024-06-04T00:00:11Z) - older than the newest local snapshot

2024-06-05T13:03:07+02:00: re-sync snapshot vm/109/2024-06-05T00:00:04Z

2024-06-05T13:03:07+02:00: no data changesAre we missing something fundamental?

EDIT: We installed on all PBS Version 3.2-2

Last edited: