Yes, it is working pretty well since I found out how to properly passthrough.

It was a test server, so I made a few clean Proxmox install, and it turned out it was really easy, if you know what to do.

You don't have to install custom drivers, and by default IOMMU is enabled for AMD (drivers are preinstalled).

BUT somehow at some point of testing, I lost the

/dev/dri/renderD128 folder and this is bad, if you don't have this folder that means you have to get it back somehow, because this folder contains the AMD Ryzen iGPU Driver, and you need to passthrough this in LXC container config file.

But, because I use other PCI devices (TPU CORAL) I did this:

nano /etc/default/grub

Code:

GRUB_CMDLINE_LINUX="quiet amd_iommu=on iommu=pt"

update-grub

nano /etc/modules

Code:

vfio

vfio_iommu_type1

vfio_pci

vfio_virqfd

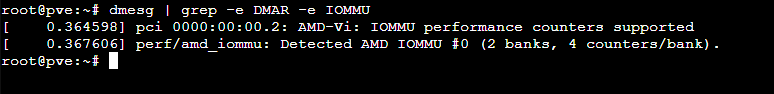

Restart and see if IOMMU still works:

dmesg | grep -e DMAR -e IOMMU

It should show something like this:

The easiest if you use VM, because you just have to give the VM the

Pheonix1 PCI device.

If you use LXC and I strongly suggest, because you can share the GPU across infinite LXCs and not limit to just 1 VM.

To install Frigate in an LXC docker I followed this guide.

When you reach the "

Mapping through the USB Coral TPU and Hardware Acceleration"

nano /etc/pve/lxc/[B]XXX[/B].conf

Add these lines to the end, first for AMD Ryzen 780m igpu and the second for M2 TPU Coral.

Code:

lxc.mount.entry: /dev/dri/renderD128 dev/dri/renderD128 none bind,optional,create=file 0, 0

lxc.mount.entry: /dev/apex_0 dev/apex_0 none bind,optional,create=file 0, 0

This is the compose file I use and ignore the intel part, it works for AMD too! (Devices: Apex=CoralTPU, renderD128=AMD iGPU)

YAML:

services:

frigate:

container_name: frigate

restart: unless-stopped

image: ghcr.io/blakeblackshear/frigate:stable

shm_size: "256mb" # update for your cameras based on calculation above

devices:

- /dev/apex_0:/dev/apex_0

- /dev/dri/renderD128:/dev/dri/renderD128 # for intel hwaccel, needs to be updated for your hardware

volumes:

- /etc/localtime:/etc/localtime

- /opt/frigate/config:/config

- /mnt/pve/Surveillance:/media/frigate

- type: tmpfs # Optional: 1GB of memory, reduces SSD/SD Card wear

target: /tmp/cache

tmpfs:

size: 1073741824

ports:

- "5000:5000"

- "1935:1935" # RTMP feeds

environment:

FRIGATE_RTSP_PASSWORD: "password"

NVIDIA_VISIBLE_DEVICES: void

LIBVA_DRIVER_NAME: radeonsi

And this was my starter frigate config for Annke C800, to see if iGPU works:

YAML:

mqtt:

enabled: false

ffmpeg:

hwaccel_args: preset-vaapi

detectors:

coral:

type: edgetpu

#Global Object Settings

objects:

track:

- person

cameras:

annkec800: # <------ Name the camera

ffmpeg:

output_args:

record: -f segment -segment_time 10 -segment_format mp4 -reset_timestamps 1 -strftime 1 -c:v copy -tag:v hvc1 -bsf:v hevc_mp4toannexb -c:a aac

inputs:

- path: rtsp://admin:password@192.168.0.200:554/H264/ch1/main/av_stream # <----- Update for your camera

roles:

- detect

- record

And here is a pic in Frigate showing the iGPU: