Hello everyone,

we encounterd some strange behavior when we try to migrate a VM from one node to an other.

Proxmox Version: Virtual Environment 7.4-3 (PVE Manager Version pve-manager/7.4-3/9002ab8a)

We have 2 nodes with subscription and one without.

We tried to move one VM (101) from node without subscription to node with subscription.

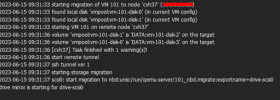

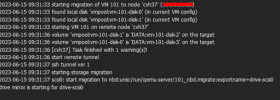

Migration stops at this point and at the target cvh37 we see timouts in the log files to various VMs running on this host. (see Part-of-syslog.txt)

Example entries:

Even some windows systems rebooted!

VM 101 Configuration:

pveversion (cvh37):

Migration between the other 2 nodes run without problems.

regards

Bastian

we encounterd some strange behavior when we try to migrate a VM from one node to an other.

Proxmox Version: Virtual Environment 7.4-3 (PVE Manager Version pve-manager/7.4-3/9002ab8a)

We have 2 nodes with subscription and one without.

We tried to move one VM (101) from node without subscription to node with subscription.

Migration stops at this point and at the target cvh37 we see timouts in the log files to various VMs running on this host. (see Part-of-syslog.txt)

Example entries:

Code:

Jun 15 09:33:50 cvh37 pvestatd[3535]: VM 104 qmp command failed - VM 104 qmp command 'query-proxmox-support' failed - unable to connect to VM 104 qmp socket - timeout after 51 retries

Jun 15 09:33:55 cvh37 pvestatd[3535]: VM 108 qmp command failed - VM 108 qmp command 'query-proxmox-support' failed - unable to connect to VM 108 qmp socket - timeout after 51 retries

Jun 15 09:34:00 cvh37 pvestatd[3535]: VM 123 qmp command failed - VM 123 qmp command 'query-proxmox-support' failed - unable to connect to VM 123 qmp socket - timeout after 51 retriesEven some windows systems rebooted!

VM 101 Configuration:

pveversion (cvh37):

Code:

proxmox-ve: 7.4-1 (running kernel: 5.15.102-1-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.3-3

pve-kernel-5.15.102-1-pve: 5.15.102-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-1

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-3

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-1

libpve-rs-perl: 0.7.5

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.3.3-1

proxmox-backup-file-restore: 2.3.3-1

proxmox-kernel-helper: 7.4-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.6.3

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20221111-1

pve-firewall: 4.3-1

pve-firmware: 3.6-4

pve-ha-manager: 3.6.0

pve-i18n: 2.11-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1Migration between the other 2 nodes run without problems.

regards

Bastian

Attachments

Last edited: