Hi

I have exactly the same problem. I have installed the latest version of pve 8.2.2. The root is on an md0 raid1 array. Everything else is on ZFS.

The operating system boots inconsistently; that is, during one restart, it may hang for 2-4 minutes and

then load, while during another, it may hang for 5 minutes and

fail to load.

This happens completely randomly.

The problem is observed specifically during boot-up. No issues have been observed during operation so far.

Key moments are captured in screenshots:

Grub - 1:16

Immediately after Grub - 1:19

Immediately after Grub - 1:19

Booting Proxmox VE GNU/Linux

Loading Initial ramdisk...

When the OS is loading, it hangs in this position for 2 minutes and then begins to boot. But as we know, after Grub, the boot should start immediately, or at most within 2 seconds.However, in this case, when the OS did not load, it hung on this screen until 03:30, i.e., it hung for 2 minutes and 20 seconds.

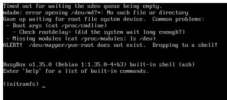

Here it writes: Timed out waiting for the udev queue to be empty.

And it hangs like this for almost 2 more minutes, until 05:14.

at 05:14: Timed out waiting for the udev queue to be empty. mdadm: error opening /dev/md?*: No such file or directory.

The root is specifically on this raid.

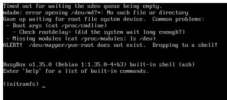

After another 3 seconds

At this window,

it does not respond to the keyboard, nor to ctrl alt del.

After one or two restarts, the system will boot up with a long hang lasting 2-4 minutes.

All hardware components were sequentially replaced from a ZIP kit for testing (motherboard, RAM, power supply, NVMe disks, SAS controllers).

Only the processor was not replaced.

Therefore, hardware issues can almost certainly be ruled out.

An important observation is that

if all HDD and SSD drives are disconnected, leaving only the NVMe drives where the root is located,

the system boots immediately without any delays.

It seems like the bootloader doesn't like that there are so many drives in the server—18 in total.

If you need me to send any additional data or command outputs, I'll send them all.