Hi there, I'm new to the Proxmox forums.

First of all, thanks for developing Proxmox and let us experiment with this incredible piece of software. I am still in the "trial and error" phase but I am enjoying every second of it. I have searched the forums but maybe I was not using the correct keywords so I am posting this new thread.

I have a Debian 12 VM set up with two disks:

Disk 1: 32GB for the root filesystem created in "local-lvm" storage (LVM-Thin) on the OS drive which is a 2TB WD SA500 M.2 SATA SSD.

Disk 2: 2TB for the /home partition created in "timechain" storage (LVM-Thin) on a second drive which is a 2TB WD SA500 2.5" SATA SSD.

Both disks have SSD Emulation and Discard enabled.

I have noticed that there is a substantial discrepancy between the usage reported in the "timechain" thinpool and the actual usage of the /home folder in the VM.

If I run

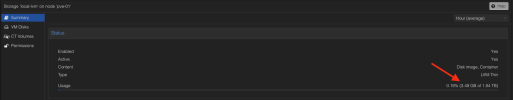

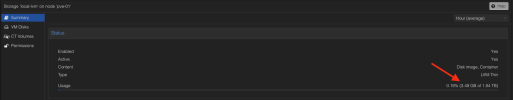

In the Proxmox web UI instead, I see that the usage for the "local-lvm" thinpool is 3.49GB (screenshot below for reference).

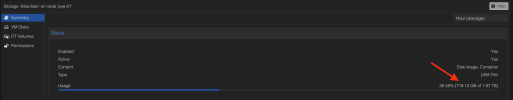

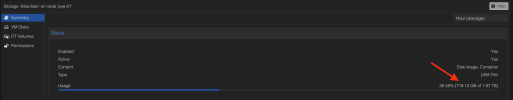

And the usage for the "timechain" thinpool is almost 720GB (screenshot below for reference).

There is most probably something I have misconfigured but I can't figure out what it is. I appreciate any input.

First of all, thanks for developing Proxmox and let us experiment with this incredible piece of software. I am still in the "trial and error" phase but I am enjoying every second of it. I have searched the forums but maybe I was not using the correct keywords so I am posting this new thread.

I have a Debian 12 VM set up with two disks:

Disk 1: 32GB for the root filesystem created in "local-lvm" storage (LVM-Thin) on the OS drive which is a 2TB WD SA500 M.2 SATA SSD.

Disk 2: 2TB for the /home partition created in "timechain" storage (LVM-Thin) on a second drive which is a 2TB WD SA500 2.5" SATA SSD.

Both disks have SSD Emulation and Discard enabled.

I have noticed that there is a substantial discrepancy between the usage reported in the "timechain" thinpool and the actual usage of the /home folder in the VM.

If I run

df -h inside the VM, I get this:

Code:

/dev/sda1 32G 2.5G 28G 9% /

/dev/sdb1 1.8T 633G 1.1T 37% /homeIn the Proxmox web UI instead, I see that the usage for the "local-lvm" thinpool is 3.49GB (screenshot below for reference).

And the usage for the "timechain" thinpool is almost 720GB (screenshot below for reference).

There is most probably something I have misconfigured but I can't figure out what it is. I appreciate any input.