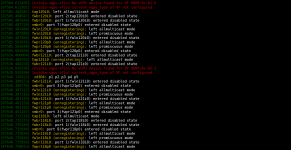

I used PVE+A16(64G)+256G memory and allocated 10 virtual machines, one of which was allocated 4G video memory. When the virtual machine is started, 3-5 virtual machines can be started normally, and after a few hours, other virtual machines cannot be started. Error [NVIDIA-vgpu-vfio] no vgpu device found for vf0000: 8b: 01.7. [228095.276939] [NVIDIA-vgpu-vfio] current _ vgpu _ type of vfnot configured, if the graphics card allocated by the virtual machine is removed, it can be started normally, but my memory 64G has not been fully allocated. If the PVE server is restarted, it is ok to start all virtual machines immediately after the restart. If one machine is not started, it will not start after a few hours. I really don't understand. Help me.

The VPUG virtual machine of A16 cannot be started.

- Thread starter yiyang5188

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi, it looks like your Virtual Function (VF) for vGPU passthrough is not properly bound to VFIO at the time the VM starts.

Your VF (0000:8c:02.2) belongs to IOMMU group 221, but that group is not attached to VFIO yet.

Run:

and see what driver is bound.

If it’s not vfio-pci, rebind it manually:

(Replace 10de 2237 with your actual vendor:device ID from lspci -nn.)

See if it works.

Your VF (0000:8c:02.2) belongs to IOMMU group 221, but that group is not attached to VFIO yet.

Run:

Code:

lspci -nnk | grep -A 3 8c:02.2and see what driver is bound.

If it’s not vfio-pci, rebind it manually:

Code:

echo "0000:8c:02.2" > /sys/bus/pci/devices/0000:8c:02.2/driver/unbind

echo "10de 2237" > /sys/bus/pci/drivers/vfio-pci/new_idSee if it works.

It's all NVIDIAHi, it looks like your Virtual Function (VF) for vGPU passthrough is not properly bound to VFIO at the time the VM starts.

Your VF (0000:8c:02.2) belongs to IOMMU group 221, but that group is not attached to VFIO yet.

Run:

Code:lspci -nnk | grep -A 3 8c:02.2

and see what driver is bound.

If it’s not vfio-pci, rebind it manually:

(Replace 10de 2237 with your actual vendor:device ID from lspci -nn.)Code:echo "0000:8c:02.2" > /sys/bus/pci/devices/0000:8c:02.2/driver/unbind echo "10de 2237" > /sys/bus/pci/drivers/vfio-pci/new_id

See if it works.

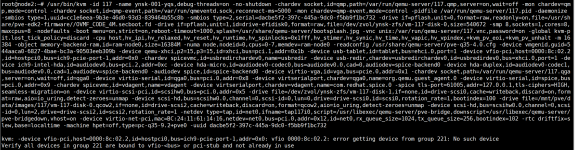

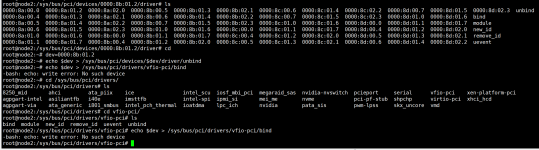

I executed two orders, but it still didn't work.

Attachments

From the screenshots we can see a few key points:

All of your A16 GPU VFs (Virtual Functions) are still bound to the nvidia driver, not vfio-pci.

That’s why your VM start command shows:

When nvidia is still controlling those VFs, Proxmox cannot attach them to a VM through VFIO.

All of your A16 GPU VFs (Virtual Functions) are still bound to the nvidia driver, not vfio-pci.

That’s why your VM start command shows:

Code:

kvm: error getting device from group 221: No such device

Verify all devices in group 221 are bound to vfio or pci-stubWhen nvidia is still controlling those VFs, Proxmox cannot attach them to a VM through VFIO.

How can I bind to vfio or pci-stub?From the screenshots we can see a few key points:

All of your A16 GPU VFs (Virtual Functions) are still bound to the nvidia driver, not vfio-pci.

That’s why your VM start command shows:

Code:kvm: error getting device from group 221: No such device Verify all devices in group 221 are bound to vfio or pci-stub

When nvidia is still controlling those VFs, Proxmox cannot attach them to a VM through VFIO.

You should bind the GPU VFs to vfio-pci (don’t use pci-stub unless you really must). Example for

To bind :-

Make sure IOMMU is enabled (e.g.

If you prefer a tool:

Please try it

0000:8c:00.6 (ID 10de:25b6):To bind :-

Code:

dev=0000:8c:00.6

echo $dev > /sys/bus/pci/devices/$dev/driver/unbind

echo $dev > /sys/bus/pci/drivers/vfio-pci/bind

lspci -nnk -s $dev # should show: Kernel driver in use: vfio-pciMake sure IOMMU is enabled (e.g.

intel_iommu=on iommu=pt or amd_iommu=on in kernel cmdline). If you prefer a tool:

apt install driverctl then driverctl set-override 0000:8c:00.6 vfio-pci.Please try it

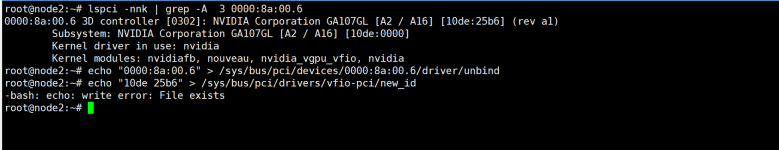

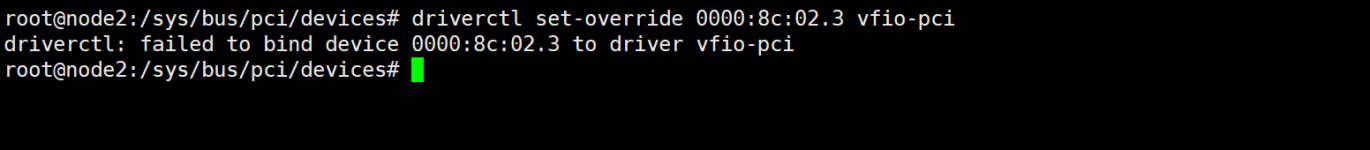

These operations failed.You should bind the GPU VFs to vfio-pci (don’t use pci-stub unless you really must). Example for0000:8c:00.6(ID10de:25b6):

To bind :-

Code:dev=0000:8c:00.6 echo $dev > /sys/bus/pci/devices/$dev/driver/unbind echo $dev > /sys/bus/pci/drivers/vfio-pci/bind lspci -nnk -s $dev # should show: Kernel driver in use: vfio-pci

Make sure IOMMU is enabled (e.g.intel_iommu=on iommu=ptoramd_iommu=onin kernel cmdline).

If you prefer a tool:apt install driverctlthendriverctl set-override 0000:8c:00.6 vfio-pci.

Please try it

Attachments

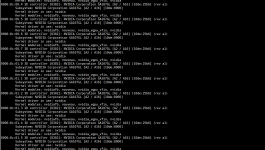

OK, from your latest screenshots, now i see that your setup is already running in vGPU mode — the nvidia-vgpu-vfio messages confirm that the vGPU Manager is active.

That means you shouldn’t bind those GPU addresses to vfio-pci; instead, create and assign mdev (vGPU) devices through Proxmox.

In short: you’re in shared vGPU mode, not full passthrough mode — so continue using the mdev method for your VMs.

So now since it is in shared vGPU mode, you need to undo the vfio changes, reboot, then create vGPUs under

Simple rule: Full GPU passthrough = vfio-pci, Shared GPU = mdev (vGPU Manager).

Hope this helps.

That means you shouldn’t bind those GPU addresses to vfio-pci; instead, create and assign mdev (vGPU) devices through Proxmox.

In short: you’re in shared vGPU mode, not full passthrough mode — so continue using the mdev method for your VMs.

So now since it is in shared vGPU mode, you need to undo the vfio changes, reboot, then create vGPUs under

/sys/class/mdev_bus/.../mdev_supported_types/ and then attach them to VMs using Add → Mediated Device (mdev) in Proxmox.Simple rule: Full GPU passthrough = vfio-pci, Shared GPU = mdev (vGPU Manager).

Hope this helps.