There is a pve 7.1-10 cluster with three nodes, the ceph version is 16.2.7, with two pools : hdd_pool and ssd pool

The problem is once create a VM or restore a VM with one disk , these two pools would have two same image.

For example: I have a VM with ID is 135 only one disk, and I will see vm-135-disk0 in both hdd-pool and ssd-pool with both web UI and command line(like rbd ls -p ssd-pool(or hdd-pool) |grep 135) .

Once I delete one of this, it will delete both, whatever in web UI VM configuration and command line "rbd rm"

And in the web UI, to see the two pools VM image list, it would appear the same list.

So There is some bugs in PVE 7.1-10 or/and Ceph 16.2.7? And how to solve it?

There is some further information in below screenshot:

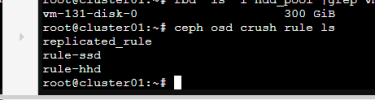

two pools with two rules:

rules details:

some scrips when create this cluster:

The problem is once create a VM or restore a VM with one disk , these two pools would have two same image.

For example: I have a VM with ID is 135 only one disk, and I will see vm-135-disk0 in both hdd-pool and ssd-pool with both web UI and command line(like rbd ls -p ssd-pool(or hdd-pool) |grep 135) .

Once I delete one of this, it will delete both, whatever in web UI VM configuration and command line "rbd rm"

And in the web UI, to see the two pools VM image list, it would appear the same list.

So There is some bugs in PVE 7.1-10 or/and Ceph 16.2.7? And how to solve it?

There is some further information in below screenshot:

two pools with two rules:

rules details:

some scrips when create this cluster:

Last edited: