Hi,

we have VM that using outgong traffic arounf 140 mbp/s, which is much higher that our limits, we have set many values in the netwok limit such as 12.5 ,5 0.5 etc none seems working, can anyone advice on this? tried different models virtIO, intel E1000 all.

++++

qm config 119

agent: 1

boot: c

bootdisk: scsi0

cipassword: **********

ciuser: root

cores: 1

ide0: local-lvm:vm-119-cloudinit,media=cdrom,size=4M

ide2: none,media=cdrom

ipconfig0: ip

memory: 1024

name: test.com

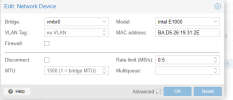

net0: e1000=BA 5:26:19:31:2E,bridge=vmbr0,rate=0.5

5:26:19:31:2E,bridge=vmbr0,rate=0.5

numa: 1

ostype: l26

parent: AsmCldo-new

scsi0: local-lvm:vm-119-disk-0,discard=on,size=20G

scsihw: virtio-scsi-pci

smbios1: uuid=7e077122-cb73-4d4d-9759-744cd8f6ff7c

sockets: 1

vmgenid: aae877db-03f9-46e7-a3a3-b1e8ed3487dc

Thank you

we have VM that using outgong traffic arounf 140 mbp/s, which is much higher that our limits, we have set many values in the netwok limit such as 12.5 ,5 0.5 etc none seems working, can anyone advice on this? tried different models virtIO, intel E1000 all.

++++

qm config 119

agent: 1

boot: c

bootdisk: scsi0

cipassword: **********

ciuser: root

cores: 1

ide0: local-lvm:vm-119-cloudinit,media=cdrom,size=4M

ide2: none,media=cdrom

ipconfig0: ip

memory: 1024

name: test.com

net0: e1000=BA

numa: 1

ostype: l26

parent: AsmCldo-new

scsi0: local-lvm:vm-119-disk-0,discard=on,size=20G

scsihw: virtio-scsi-pci

smbios1: uuid=7e077122-cb73-4d4d-9759-744cd8f6ff7c

sockets: 1

vmgenid: aae877db-03f9-46e7-a3a3-b1e8ed3487dc

Thank you