I got few server where have consumer grade SSDs, earlier was using ZFS but apparently apart of good amount of RAM, ZFS also need enterprise SSD with some inbuilt cache / fault protection. So I built next server with mdraid and LVM thin (for snapshot features).

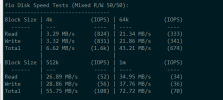

But my benchmarks are terribly bad for RAID10 SW setup.

Here :

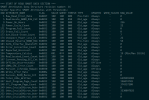

This is my raid output :

Disk layout : (other 2 disks has same layout)

Disk info:

But my benchmarks are terribly bad for RAID10 SW setup.

Here :

This is my raid output :

Code:

/mnt/remote-fs/dump# cat /proc/mdstat

Personalities : [raid10] [linear] [multipath] [raid0] [raid1] [raid6] [raid5] [raid4]

md127 : active raid10 sdb3[1] sdd3[3] sdc3[2] sda3[4]

1952209920 blocks super 1.2 512K chunks 2 near-copies [4/4] [UUUU]

bitmap: 6/15 pages [24KB], 65536KB chunk

unused devices: <none>

Code:

sdc 8:32 0 931.5G 0 disk

├─sdc1 8:33 0 1007K 0 part

├─sdc2 8:34 0 512M 0 part

└─sdc3 8:35 0 931G 0 part

└─md127 9:127 0 1.8T 0 raid10

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 96G 0 lvm /

├─pve-data_tmeta 253:2 0 15.8G 0 lvm

│ └─pve-data-tpool 253:4 0 1.7T 0 lvm

│ ├─pve-data 253:5 0 1.7T 1 lvm

│ └─pve-vm--5001--disk--0 253:6 0 649.8G 0 lvm

└─pve-data_tdata 253:3 0 1.7T 0 lvm

└─pve-data-tpool 253:4 0 1.7T 0 lvm

├─pve-data 253:5 0 1.7T 1 lvm

└─pve-vm--5001--disk--0 253:6 0 649.8G 0 lvm

sdd 8:48 0 931.5G 0 disk

├─sdd1 8:49 0 1007K 0 part

├─sdd2 8:50 0 512M 0 part

└─sdd3 8:51 0 931G 0 part

└─md127 9:127 0 1.8T 0 raid10

├─pve-swap 253:0 0 8G 0 lvm [SWAP]

├─pve-root 253:1 0 96G 0 lvm /

├─pve-data_tmeta 253:2 0 15.8G 0 lvm

│ └─pve-data-tpool 253:4 0 1.7T 0 lvm

│ ├─pve-data 253:5 0 1.7T 1 lvm

│ └─pve-vm--5001--disk--0 253:6 0 649.8G 0 lvm

└─pve-data_tdata 253:3 0 1.7T 0 lvm

└─pve-data-tpool 253:4 0 1.7T 0 lvm

├─pve-data 253:5 0 1.7T 1 lvm

└─pve-vm--5001--disk--0 253:6 0 649.8G 0 lvmDisk info:

Code:

Model Family: Crucial/Micron Client SSDs

Device Model: CT1000BX500SSD1

Serial Number: 23BB9E6904DF

LU WWN Device Id: 5 00a075 1e6904a0f

Firmware Version: M6CR061

User Capacity: 1,000,204,886,016 bytes [1.00 TB]

Sector Size: 512 bytes logical/physical

Rotation Rate: Solid State Device

Form Factor: 2.5 inches

TRIM Command: Available

Device is: In smartctl database [for details use: -P show]

ATA Version is: ACS-3 T13/2161-D revision 4

SATA Version is: SATA 3.3, 6.0 Gb/s (current: 6.0 Gb/s)

Local Time is: Mon Mar 27 21:00:44 2023 BST

SMART support is: Available - device has SMART capability.

SMART support is: Enabled

Code:

pveversion -v

proxmox-ve: 7.3-1 (running kernel: 5.15.102-1-pve)

pve-manager: 7.4-3 (running version: 7.4-3/9002ab8a)

pve-kernel-5.15: 7.3-3

pve-kernel-helper: 7.2-14

pve-kernel-5.15.102-1-pve: 5.15.102-1

pve-kernel-5.15.74-1-pve: 5.15.74-1

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.4

libproxmox-backup-qemu0: 1.3.1-1

libproxmox-rs-perl: 0.2.1

libpve-access-control: 7.4-2

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-3

libpve-guest-common-perl: 4.2-4

libpve-http-server-perl: 4.2-1

libpve-rs-perl: 0.7.5

libpve-storage-perl: 7.4-2

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.2-2

lxcfs: 5.0.3-pve1

novnc-pve: 1.4.0-1

proxmox-backup-client: 2.3.3-1

proxmox-backup-file-restore: 2.3.3-1

proxmox-mail-forward: 0.1.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-widget-toolkit: 3.6.3

pve-cluster: 7.3-3

pve-container: 4.4-3

pve-docs: 7.4-2

pve-edk2-firmware: 3.20221111-2

pve-firewall: 4.3-1

pve-firmware: 3.6-4

pve-ha-manager: 3.6.0

pve-i18n: 2.11-1

pve-qemu-kvm: 7.2.0-8

pve-xtermjs: 4.16.0-1

qemu-server: 7.4-2

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+3

vncterm: 1.7-1

zfsutils-linux: 2.1.9-pve1[code]

Where I am going wrong ?