I understand that LXC has less overhead than a VM, but the difference is so big that I suspect misconfiguration on the VM. Hopefully I can get help to correctly configure a VM for better performance.

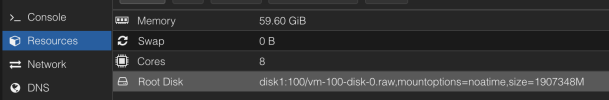

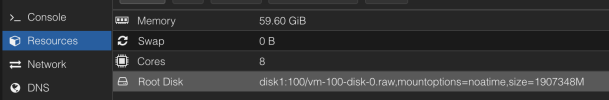

LXC specs:

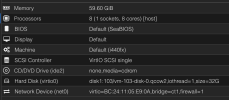

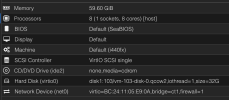

VM specs:

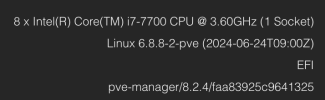

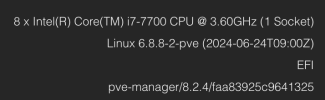

Host specs:

Using this fio configuration:

LXC result:

VM results:

LXC specs:

VM specs:

Host specs:

Using this fio configuration:

Code:

[global]

ioengine=libaio

direct=1

time_based

runtime=180

group_reporting

filename=/tmp/test_file

size=10G

[random-read]

description=Simulates reading pieces from different parts of files

rw=randread

bs=256K

iodepth=32

numjobs=4

percentage_random=100

[random-write]

description=Simulates writing newly downloaded pieces

rw=randwrite

bs=256K

iodepth=16

numjobs=2

percentage_random=100

[mixed-readwrite]

description=Simulates simultaneous reading and writing

rw=randrw

bs=128K

rwmixread=70

iodepth=24

numjobs=3

percentage_random=100

[sequential-read]

description=Simulates reading larger sequential chunks

rw=read

bs=1M

iodepth=8

numjobs=1

percentage_random=0

[sequential-write]

description=Simulates writing larger sequential chunks

rw=write

bs=1M

iodepth=8

numjobs=1

percentage_random=0LXC result:

Code:

random-read: (g=0): rw=randread, bs=(R) 256KiB-256KiB, (W) 256KiB-256KiB, (T) 256KiB-256KiB, ioengine=libaio, iodepth=32

...

random-write: (g=0): rw=randwrite, bs=(R) 256KiB-256KiB, (W) 256KiB-256KiB, (T) 256KiB-256KiB, ioengine=libaio, iodepth=16

...

mixed-readwrite: (g=0): rw=randrw, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=24

...

sequential-read: (g=0): rw=read, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=8

sequential-write: (g=0): rw=write, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=8

fio-3.37

Starting 11 processes

random-read: Laying out IO file (1 file / 10240MiB)

random-read: (groupid=0, jobs=11): err= 0: pid=697: Sat Jul 27 18:23:09 2024

Description : [Simulates reading pieces from different parts of files]

read: IOPS=7374, BW=2038MiB/s (2138MB/s)(358GiB/180010msec)

slat (usec): min=4, max=916643, avg=885.67, stdev=4949.76

clat (msec): min=4, max=1040, avg=24.35, stdev=31.35

lat (msec): min=4, max=1040, avg=25.24, stdev=32.11

clat percentiles (msec):

| 1.00th=[ 9], 5.00th=[ 10], 10.00th=[ 13], 20.00th=[ 16],

| 30.00th=[ 18], 40.00th=[ 20], 50.00th=[ 22], 60.00th=[ 23],

| 70.00th=[ 25], 80.00th=[ 27], 90.00th=[ 29], 95.00th=[ 33],

| 99.00th=[ 124], 99.50th=[ 201], 99.90th=[ 405], 99.95th=[ 760],

| 99.99th=[ 944]

bw ( MiB/s): min= 59, max= 2644, per=100.00%, avg=2052.03, stdev=106.38, samples=2856

iops : min= 224, max= 9688, avg=7423.90, stdev=324.22, samples=2856

write: IOPS=3544, BW=1269MiB/s (1331MB/s)(223GiB/180010msec); 0 zone resets

slat (usec): min=6, max=915863, avg=922.73, stdev=5002.70

clat (msec): min=3, max=1021, avg=16.53, stdev=24.19

lat (msec): min=3, max=1022, avg=17.45, stdev=25.06

clat percentiles (msec):

| 1.00th=[ 9], 5.00th=[ 9], 10.00th=[ 10], 20.00th=[ 11],

| 30.00th=[ 12], 40.00th=[ 13], 50.00th=[ 14], 60.00th=[ 16],

| 70.00th=[ 17], 80.00th=[ 18], 90.00th=[ 21], 95.00th=[ 23],

| 99.00th=[ 85], 99.50th=[ 140], 99.90th=[ 296], 99.95th=[ 451],

| 99.99th=[ 860]

bw ( MiB/s): min= 33, max= 1638, per=100.00%, avg=1276.91, stdev=107.54, samples=2142

iops : min= 94, max= 4730, avg=3565.55, stdev=225.36, samples=2142

lat (msec) : 4=0.01%, 10=10.32%, 20=46.63%, 50=40.48%, 100=1.42%

lat (msec) : 250=0.88%, 500=0.20%, 750=0.02%, 1000=0.04%, 2000=0.01%

cpu : usr=1.16%, sys=2.79%, ctx=2214429, majf=0, minf=10352

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=12.2%, 16=48.0%, 32=39.8%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.1%, 16=0.1%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=1327535,638073,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=8

Run status group 0 (all jobs):

READ: bw=2038MiB/s (2138MB/s), 2038MiB/s-2038MiB/s (2138MB/s-2138MB/s), io=358GiB (385GB), run=180010-180010msec

WRITE: bw=1269MiB/s (1331MB/s), 1269MiB/s-1269MiB/s (1331MB/s-1331MB/s), io=223GiB (240GB), run=180010-180010msec

Disk stats (read/write):

loop0: ios=1445458/638070, sectors=751500496/467976536, merge=0/0, ticks=15383195/7059551, in_queue=22569810, util=100.00%VM results:

Code:

random-read: (g=0): rw=randread, bs=(R) 256KiB-256KiB, (W) 256KiB-256KiB, (T) 256KiB-256KiB, ioengine=libaio, iodepth=32

...

random-write: (g=0): rw=randwrite, bs=(R) 256KiB-256KiB, (W) 256KiB-256KiB, (T) 256KiB-256KiB, ioengine=libaio, iodepth=16

...

mixed-readwrite: (g=0): rw=randrw, bs=(R) 128KiB-128KiB, (W) 128KiB-128KiB, (T) 128KiB-128KiB, ioengine=libaio, iodepth=24

...

sequential-read: (g=0): rw=read, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=8

sequential-write: (g=0): rw=write, bs=(R) 1024KiB-1024KiB, (W) 1024KiB-1024KiB, (T) 1024KiB-1024KiB, ioengine=libaio, iodepth=8

fio-3.37

Starting 11 processes

random-read: Laying out IO file (1 file / 10240MiB)

random-read: (groupid=0, jobs=11): err= 0: pid=5769: Sat Jul 27 18:14:39 2024

Description : [Simulates reading pieces from different parts of files]

read: IOPS=252, BW=62.1MiB/s (65.1MB/s)(10.9GiB/180570msec)

slat (usec): min=4, max=1275.3k, avg=2148.51, stdev=36456.46

clat (usec): min=176, max=3068.6k, avg=729867.16, stdev=356792.63

lat (msec): min=11, max=3068, avg=732.02, stdev=354.75

clat percentiles (msec):

| 1.00th=[ 93], 5.00th=[ 211], 10.00th=[ 292], 20.00th=[ 414],

| 30.00th=[ 514], 40.00th=[ 600], 50.00th=[ 693], 60.00th=[ 793],

| 70.00th=[ 902], 80.00th=[ 1028], 90.00th=[ 1200], 95.00th=[ 1351],

| 99.00th=[ 1670], 99.50th=[ 1838], 99.90th=[ 2265], 99.95th=[ 2433],

| 99.99th=[ 2970]

bw ( KiB/s): min= 4864, max=225452, per=100.00%, avg=71179.55, stdev=5200.61, samples=2616

iops : min= 16, max= 766, avg=273.29, stdev=19.40, samples=2616

write: IOPS=78, BW=22.4MiB/s (23.4MB/s)(4036MiB/180574msec); 0 zone resets

slat (usec): min=7, max=1134.8k, avg=5045.61, stdev=54908.75

clat (msec): min=16, max=2458, avg=795.13, stdev=365.74

lat (msec): min=39, max=2458, avg=800.18, stdev=368.67

clat percentiles (msec):

| 1.00th=[ 126], 5.00th=[ 224], 10.00th=[ 317], 20.00th=[ 460],

| 30.00th=[ 575], 40.00th=[ 684], 50.00th=[ 785], 60.00th=[ 885],

| 70.00th=[ 978], 80.00th=[ 1099], 90.00th=[ 1267], 95.00th=[ 1418],

| 99.00th=[ 1754], 99.50th=[ 1854], 99.90th=[ 2140], 99.95th=[ 2265],

| 99.99th=[ 2400]

bw ( KiB/s): min= 3840, max=78710, per=100.00%, avg=31710.32, stdev=2369.85, samples=1765

iops : min= 12, max= 262, avg=95.45, stdev= 8.78, samples=1765

lat (usec) : 250=0.01%, 500=0.11%, 750=0.08%, 1000=0.01%

lat (msec) : 20=0.02%, 50=0.17%, 100=0.59%, 250=5.91%, 500=20.11%

lat (msec) : 750=26.61%, 1000=22.61%, 2000=23.55%, >=2000=0.23%

cpu : usr=0.02%, sys=0.06%, ctx=12126, majf=0, minf=10353

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=5.8%, 16=42.2%, 32=51.9%, >=64=0.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.1%, 16=0.1%, 32=0.1%, 64=0.0%, >=64=0.0%

issued rwts: total=45594,14225,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=8

Run status group 0 (all jobs):

READ: bw=62.1MiB/s (65.1MB/s), 62.1MiB/s-62.1MiB/s (65.1MB/s-65.1MB/s), io=10.9GiB (11.8GB), run=180570-180570msec

WRITE: bw=22.4MiB/s (23.4MB/s), 22.4MiB/s-22.4MiB/s (23.4MB/s-23.4MB/s), io=4036MiB (4232MB), run=180574-180574msec

Disk stats (read/write):

vda: ios=46281/14508, sectors=22949376/8270400, merge=0/158, ticks=32239266/11054196, in_queue=43356955, util=99.27%