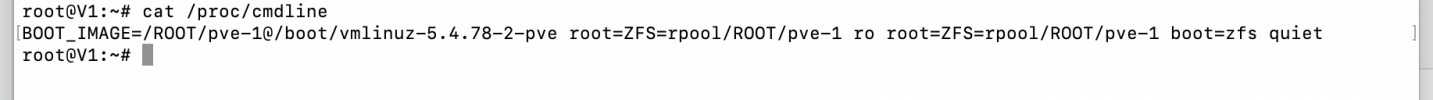

I'm asuming you have an Intel CPU because the AMD IOMMU is enabled by default. Your /proc/cmdline does not contain intel_iommu=on and that is probably why it is not working. Have a look at /etc/default/grub and /etc/kernel/cmdline to check if intel_iommu=on is there. You are booting from ZFS, so it is likely you are using systemd-boot instead of GRUB. But it also looks like your system is much behind in updates, so you could be booting with GRUB. Don't update now, as that will only make debugging this a moving target and maybe it only looks old because of this issue.

Maybe all you need is to run update-grub or update-initramfs -u (which will also update systemd-boot).

I ran updates you suggested, no change.

/etc/kernel/cmdline looks like this:

root=ZFS=rpool/ROOT/pve-1 boot=zfs intel_iommu=on textonly video=astdrmfb video=efifb

ff pcie_acs_override=downstream

/etc/default/grub looks like this:

# If you change this file, run 'update-grub' afterwards to update

# /boot/grub/grub.cfg.

# For full documentation of the options in this file, see:

# info -f grub -n 'Simple configuration'

GRUB_DEFAULT=0

GRUB_TIMEOUT=5

GRUB_DISTRIBUTOR="Proxmox Virtual Environment"

GRUB_CMDLINE_LINUX_DEFAULT="quiet"

GRUB_CMDLINE_LINUX="root=ZFS=rpool/ROOT/pve-1 boot=zfs"

# Disable os-prober, it might add menu entries for each guest

GRUB_DISABLE_OS_PROBER=true

# Uncomment to enable BadRAM filtering, modify to suit your needs

# This works with Linux (no patch required) and with any kernel that obtains

# the memory map information from GRUB (GNU Mach, kernel of FreeBSD ...)

#GRUB_BADRAM="0x01234567,0xfefefefe,0x89abcdef,0xefefefef"

# Uncomment to disable graphical terminal (grub-pc only)

#GRUB_TERMINAL=console

# The resolution used on graphical terminal

# note that you can use only modes which your graphic card supports via VBE

# you can see them in real GRUB with the command `vbeinfo'

#GRUB_GFXMODE=640x480

# Uncomment if you don't want GRUB to pass "root=UUID=xxx" parameter to Linux

#GRUB_DISABLE_LINUX_UUID=true

# Disable generation of recovery mode menu entries

GRUB_DISABLE_RECOVERY="true"

# Uncomment to get a beep at grub start

#GRUB_INIT_TUNE="480 440 1"