Hello,

Yesterday I installed the latest updates from the pve-no-subscription branch.

After rebooting i discovered that 2 systems using the PVSCSI controller were not booting anymore. The systems are using the pc-i440fx-7.0 machine and OVMF (UEFI) BIOS.

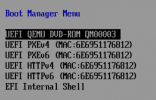

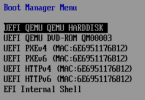

Checking the BIOS it seems that the system is not detecting the Hard Disk.

The systems using the VirtIO SCSI controller are working without any problem.

We are currently running pve-qemu-kvm v 7.2.0-8.

I have tried downgrading to 7.1.0-4 without any success.

We have tried creating new machines and attached the existing hard drives, also unsuccessfully.

Only if i change the SCSI adapter to VirtIO SCSI the system attempts to boot but fails due to the lack of drivers.

When selecting the LSI controllers, the BIOS also fails to recognize the hard drives.

Both systems were running the PVSCSI driver because they were migrated from the ESXi environment by an external party.

We are running 4 PVE servers, 3 of which have the updates applied. I have tried migrating the server to each host, and the systems are booting on the server without the updates installed.

Currently the systems are running on the unpatched server, and we will try to replace the SCSI controller in the following days.

However, it seems that something in the updates has caused this issue to appear.

Yesterday I installed the latest updates from the pve-no-subscription branch.

After rebooting i discovered that 2 systems using the PVSCSI controller were not booting anymore. The systems are using the pc-i440fx-7.0 machine and OVMF (UEFI) BIOS.

Checking the BIOS it seems that the system is not detecting the Hard Disk.

The systems using the VirtIO SCSI controller are working without any problem.

We are currently running pve-qemu-kvm v 7.2.0-8.

I have tried downgrading to 7.1.0-4 without any success.

We have tried creating new machines and attached the existing hard drives, also unsuccessfully.

Only if i change the SCSI adapter to VirtIO SCSI the system attempts to boot but fails due to the lack of drivers.

When selecting the LSI controllers, the BIOS also fails to recognize the hard drives.

Both systems were running the PVSCSI driver because they were migrated from the ESXi environment by an external party.

We are running 4 PVE servers, 3 of which have the updates applied. I have tried migrating the server to each host, and the systems are booting on the server without the updates installed.

Currently the systems are running on the unpatched server, and we will try to replace the SCSI controller in the following days.

However, it seems that something in the updates has caused this issue to appear.