The full version of this content is published here, which includes an intro and more background details. I've tried to share the full steps/guide in this post, but I reached the post size limit, so you'll want to refer to the link provided for the full steps/guide.

Missing emojis: It looks like the forum supports a limited set of emoticons. I've used some to draw attention to key info, warnings and tips. These emojis are not present in the forum post. See the full version for those.

Inspiration: @whytf shared steps here for using

It is your responsibility to:

Challenges switching to proxmox-boot-tool:

The proxmox v4 installer used a partition for the ZFS rpool instead of whole-disk mode. ZFS relies on the partition offset to locate ZFS objects and their blocks on the vdev children. So moving or shrinking the partition sector offsets of the rpool mirror to make space for a vfat/efi partition was not possible. i.e. creating a suitable vfat/efi partition was not possible without breaking ZFS.

This means the following approach would not work: https://pve.proxmox.com/wiki/ZFS:_Switch_Legacy-Boot_to_Proxmox_Boot_Tool

Problems:

You might wish to study this flowchart rather than reading the actual verbose steps to get an idea if any of this content can be useful to your scenario.

Its important that you are aware and cognitive of the following points... and that you adjust your procedure accordingly:

Now it is time to to break the mirror and repurpose one of the child vdevs

For the next steps, it helps to have a USB hard drive attached to the system for backups and such (or a similar mountable storage device with free space).

The proxmox console installer does attempt to obtain a DHCP IP address should you wish to mount network storage.

Missing emojis: It looks like the forum supports a limited set of emoticons. I've used some to draw attention to key info, warnings and tips. These emojis are not present in the forum post. See the full version for those.

Inspiration: @whytf shared steps here for using

proxmox-boot-tool with a USB stick to boot an existing proxmox ZFS installation.Disclaimer

I’ve tried to include some guardrails and warnings in this content. Some of the steps are destructive. This content comes with no warranties or guarantees.It is your responsibility to:

- Create a tested and verified backup and a rollback strategy in case something goes wrong.

- Check that the commands you execute do what you expect, prepending

echoto a command will give you a preview of what your shell will interpret. - Consider performing these steps on a lab/vm setup before performing them on a critical system.

Background

As of writing the proxmox VE is now on release 8. Since a few major releases of Proxmox VE, the release notes have strongly suggested switching the bootloader from legacy-boot to theproxmox-boot-tool. Sounds good in principle and the steps are well documented here. However the steps do not cover the case where there is no space to create an ESP partition (as per the proxmox v4 installer partition scheme), so this documentation captures what I did to switch in the case where an ESP partition cannot be created on the existing rpool drives.Related to Fabian's quote, which comes from an indirectly related forum post... The system originated from a Proxmox v4 installer that used the legacy boot approach, where grub loaded the kernel and co from /boot on the ZFS rpool. This setup suffered from fragile compatibility between grub and ZFS. zpool upgrades were known to create unbootable systems with this setup. Read more.“So this was a legacy system with grub without an ESP? That is known to be problematic (it's basically a timebomb that can explode at any write to /boot), which is why we switched to a different setup.” ~Fabian - Proxmox Staff Member [citation link].

Challenges switching to proxmox-boot-tool:

The proxmox v4 installer used a partition for the ZFS rpool instead of whole-disk mode. ZFS relies on the partition offset to locate ZFS objects and their blocks on the vdev children. So moving or shrinking the partition sector offsets of the rpool mirror to make space for a vfat/efi partition was not possible. i.e. creating a suitable vfat/efi partition was not possible without breaking ZFS.

This means the following approach would not work: https://pve.proxmox.com/wiki/ZFS:_Switch_Legacy-Boot_to_Proxmox_Boot_Tool

Problems:

zpool upgradeon the rpool - high risk the system becomes unbootable with the legacy approach.- Boot fragility when modifying /boot on the rpool e.g. kernel updates.

proxmox-boot-toolcould not be used without an ESP partition.

- Keep the rpool and existing proxmox install - avoid fresh install

- Resolve fragile boot issues - migrate /boot from ZFS to vfat partition

- Start using

proxmox-boot-tool - Be able to zpool upgrade the rpool without fear of breaking the system (boot)

- Lay the groundwork for later switching from legacy BIOS mode to UEFI mode and from

grubtosystemd.

Infographic: visualisation of AS-IS and TARGET setup

Flow chart of the steps

I created the first revision of this flowchart to get my head around the steps prior to committing to the effort of actually doing the steps. What you see shared here is the latest revision, which reflects the actual flow and benefits from some tweaks made during the writing process.You might wish to study this flowchart rather than reading the actual verbose steps to get an idea if any of this content can be useful to your scenario.

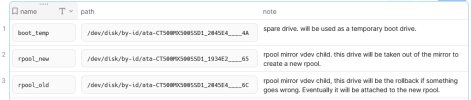

Drive overview

The name column in the following table is the name of the shell variable used in this guide.

The Steps

Prerequisites

As a prerequisite, I strongly recommend that you study the following two proxmox documents carefully and become familiar with them:BIOS legacy OR UEFI? grub OR systemd? Secure boot?

The system in my procedures was using BIOS in legacy mode, non-secure boot and grub for the bootloader.Its important that you are aware and cognitive of the following points... and that you adjust your procedure accordingly:

- Is your BIOS set to legacy OR UEFI?

- Is your BIOS set to secure boot? [docs link]

- Is your bootloader grub OR systemd?

Setting up the bootloader on the temporary boot drive

In this stage, the objective is to get the system booting via theproxmox-boot-tool and retire the legacy boot approach.

Bash:

# set the $boot_temp variable, the first drive we will work with

boot_temp=/dev/disk/by-id/ata-CT500MX500SSD1_2045E4____4A

# make a note and be congnitive of the logical and physical sector sizes of the drive

# ⚠ this may be different for your drive ⚠

gdisk -l $boot_temp |grep ^Sector

output:

Sector size (logical/physical): 512/4096 bytes

# check the blockdev report too

# ⚠ this may be different for your drive ⚠

blockdev --report $boot_temp

output:

RO RA SSZ BSZ StartSec Size Device

rw 256 512 4096 0 500107862016 /dev/disk/by-id/ata-CT500MX500SSD1_2045E4____4A

# The --report shows SSZ = Sector Size, and BSZ = Block Size.

# These values will match the output from gdisk.

# The logical SSZ Sector Size: The sector size used by the operating system to address the device.

# The physical BSZ Block Size: The sector size used by the drive firmware to address the device.

# The CT500MX drives in this guide are 512e/4kn e for emulated, n for native.

# gdisk partitions drives in logical sectors.

# The physical sector size is usually greater than or equal to the logical sector size.

# For illustration, the CT500MX drives used in this guide have 976,773,168 x 512 logical sectors = 500,107,862,016 bytes, when rounded = 466GiB = 500GB

# The drives have 122,096,646 4KiB physical sectors.

# FYI: some modern, typically enterprise drives use 4kn native sectors - 4k logical and physical sectors. You can read more about 4kn here, here and here.

# make a note and be congnitive of the drives aliginment

# ⚠ this may be different for your drive/system ⚠

gdisk -l $boot_temp |grep aligned

output:

Partitions will be aligned on 2048-sector boundaries

# reference: 2048 * 512 = 1048576 bytes = 1 MiB sector boundaries

# set up the partitions on the new boot drive, paying attention to the block/sector alignment.

# ⚠ WARNING: misaligned partitions can adversely affect drive and system performance. In the case of SSDs, their lifespan may be reduced.

# Optimal partition alignment will mitigate performance issues and penalties such as Read/Modify/Write (RMW).

# knowledge on alignment here and here.

# a partition START and SIZE in BYTES should be devisable by the logical and physical sector size in BYTES

# partitions should only start on (be algined with) a drives sector boundaries, for the CT500MX drives this means 2048 sectors = 1 MiB

gdisk $boot_temp

# I created a +1M EF02 BIOS boot partition, starting at sector 2048.

# I created a +2G EF00 EFI system partition, following partition 1: start sector 4096.

# the $boot_temp drive doesn't technically need a 3rd partition, but since it's identical to the other CT500MX drives, I can create the 3rd partition now and simplify the steps required later.

# For ZFS rpool - I created a code:8300 Linux filesystem and left 1GiB of free space at the end of the drive.

# In my case the partition size was 970479616 sectors / 496885563392 bytes.

# here is $boot_temp drive and partition info in SECTORS

parted $boot_temp unit s print

output:

Model: ATA CT500MX500SSD1 (scsi)

Disk /dev/sdf: 976773168s # drive size in SECTORS

Sector size (logical/physical): 512B/4096B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 2048s 4095s 2048s zfs BIOS boot partition bios_grub

2 4096s 4194304s 4190209s fat32 EFI system partition boot, esp

3 4196352s 974675967s 970479616s zfs Linux filesystem

# Notice that this drive is aligned on 2048-sector boundaries.

# Note that partition 1 starts at sector 2048, and note that the delta between the END of partition 2 and the START of partition 3 is 2048 sectors. This is the correct 1MiB sector boundaries.

# here is $boot_temp drive and partition info in BYTES

parted $boot_temp unit B print

output:

Model: ATA CT500MX500SSD1 (scsi)

Disk /dev/sdf: 500107862016B # drive size in BYTES

Sector size (logical/physical): 512B/4096B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 1048576B 2097151B 1048576B zfs BIOS boot partition bios_grub

2 2097152B 2147484159B 2145387008B fat32 EFI system partition boot, esp

3 2148532224B 499034095615B 496885563392B zfs Linux filesystem

# check for optimal partition alignment with parted, assuming {1..3} partitions

for partition in {1..3}; do parted $boot_temp align-check optimal $partition; done

output:

1 aligned

2 aligned

3 aligned

# configure the bootloader

# 1. format the EFI partition

proxmox-boot-tool format ${boot_temp}-part2 --force

# 2. initialise the bootloader, read the output to check for info, warnings, errors

proxmox-boot-tool init ${boot_temp}-part2

# 3. check everything looks OK

proxmox-boot-tool status

# given this was thef first time using proxmox-boot-tool, I made some sanity checks.

# ⚠ your boot uuid's will be unique to your drive/system ⚠

esp_mount=/var/tmp/espmounts/52C0-4C16

mkdir -p $esp_mount

mount ${boot_temp}-part2 $esp_mount

# high level recursive diff

diff -rq /boot/grub/ ${esp_mount}/grub/

# inspect grub.cfg diff

vimdiff /boot/grub/grub.cfg ${esp_mount}/grub/grub.cfg

# now it is time to reboot and change the BIOS boot disk to $boot_temp drive which contains the 52C0-4C16 boot partition

shutdown -r now

# change BIOS boot disk to drive containing 52C0-4C16 partition ($boot_temp)

# save and boot

# the system should boot from the $boot_temp drive, all aspects of the system should be nominal.Breaking the ZFS mirror on purpose

At this stage the system has started to use theproxmox-boot-tool and should of booted from the $boot_temp drive.Now it is time to to break the mirror and repurpose one of the child vdevs

$rpool_new which will seed the new rpool.For the next steps, it helps to have a USB hard drive attached to the system for backups and such (or a similar mountable storage device with free space).

The proxmox console installer does attempt to obtain a DHCP IP address should you wish to mount network storage.

Bash:

# take an rpool checkpoint in case we need a pool rollback point

zpool checkpoint rpool

# reboot and boot from the proxmox installer, choose console mode

shutdown -r now

# after console mode has loaded, press CTRL+ALT+F3 for root shell

# .oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.oOo.

# console quality of life improvements, misc prerequisits and install required packages

apt update; apt install vim zstd pv mbuffer

# keyboard layout

vim /etc/default/keyboard # set to "us" or your preferred layout

# set up the console with the keyboard change

setupcon

# mount storage for backups and such to /mnt/<dir>

backup_path=/mnt/usb5TB

mkdir $backup_path; mount /path/to/device/partition $backup_path

# enable process substitution https://askubuntu.com/a/1250789

ln -s /proc/self/fd /dev/fd

# dirs for backups and mounting the rpool old and new

mkdir -p ${backup_path}/backup /mnt/rpool-old /mnt/rpool-new

# set variable for the drive we will work on

rpool_new=/dev/disk/by-id/ata-CT500MX500SSD1_1934E2____65

# for the next steps, I considered using zpool split or zpool detatch, but decided against it. I wanted to test the resilience of the rpool when wiping one of the ZFS mirror vdev child drives. For my scenario I didn't see a benefit of the split or detach approach.

# full backup of $rpool_new drive, includes partition table and boot sector

dd iflag=direct if=$rpool_new bs=128k | pv | zstdmt --stdout > ${backup_path}/backup/rpool-old.dd.zst ; sync

# backup partitions of the $rpool_new drive, saved to $HOME

wipefs --all --backup ${rpool_new}-part2

wipefs --all --backup ${rpool_new}-part1

wipefs --all --backup $rpool_new

# move the backup files to ${backup_path}/backup

mv -iv ~/wipefs* ${backup_path}/backup

# secondary partition backup, I wanted to compare wipefs output vs. sgdisk output

# AFAIK, from my quick checks the sgdisk backup only contains the partition table but not the filesystem data, at least from for ZFS ${rpool_new}-part2

sgdisk -b=${backup_path}/sgdisk-rpool-old.bin $rpool_new

# recap: at this stage you should have

# 1. full partition and filesystems backup from wipefs --all --backup commands

# 2. full $rpool_new drive backup via dd including boot sector, partition table, filesystems and filesystem data

# 3. the $rpool_old drive should be operational and the system should boot from it

# it doesn't hurt to test your dd backup by restoring it somewhere, even to $rpool_new drive

# ⚠ data destruction warning ⚠

# ⚠ the following set of commands are destructive, make sure you have a tested backup

# wipe the boot sector of $rpool_new

dd if=/dev/zero of=$rpool_new bs=512 count=1

# DRY RUN: wipe the partition table

wipefs --no-act --all ${rpool_new}-part2

wipefs --no-act --all ${rpool_new}-part1

wipefs --no-act --all ${rpool_new}

# study the output of the previous commands carefully to ensure that the following operation will do what you expect

# wipe the partition table

wipefs --all ${rpool_new}-part2

wipefs --all ${rpool_new}-part1

wipefs --all ${rpool_new}

# consider saving ~/.bash_history to ${backup_path}/backup, for example:

history -w ; cp -i ~/.bash_history ${backup_path}/backup ; sync

# reboot and verify the system is operational - rpool mirror will be degraded... reached the post size limit of ~16k characters

Rest of the steps are available here.

Last edited: