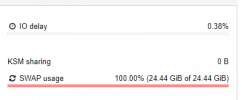

SWAP usage 100%

- Thread starter Exner

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi,

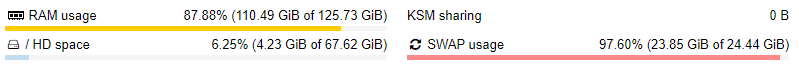

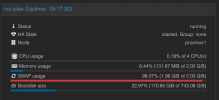

can also show your RAM usage and maybe give some more details such as how many VMs/CTs are running and how much memory they use? Also do you use zfs?

Generally you could try to reduce "swappiness". This will make the kernel use swap space less aggressively. You can do this with the

[1]: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#zfs_swap

can also show your RAM usage and maybe give some more details such as how many VMs/CTs are running and how much memory they use? Also do you use zfs?

Generally you could try to reduce "swappiness". This will make the kernel use swap space less aggressively. You can do this with the

sysctl -w vm.swappiness=10 command. Some more information about the "swappiness" values and their significance is available in the manual [1].[1]: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#zfs_swap

I use 93% in ram and use 10 vmHi,

can also show your RAM usage and maybe give some more details such as how many VMs/CTs are running and how much memory they use? Also do you use zfs?

Generally you could try to reduce "swappiness". This will make the kernel use swap space less aggressively. You can do this with thesysctl -w vm.swappiness=10command. Some more information about the "swappiness" values and their significance is available in the manual [1].

[1]: https://pve.proxmox.com/pve-docs/pve-admin-guide.html#zfs_swap

I new in proxmox i think i don't use zfs

I don't have much experience if I do what you say will there be a lag in vm

Attachments

Last edited:

This depends on what you want:Is there a solution to this?

- buy more ram

- configure more ram

- run less VMs or with less rman each.

can't buy more ram I have 128 GBThis depends on what you want:

- buy more ram

- configure more ram

- run less VMs or with less rman each.

there a person tell me I want Enabling zram is this right ?

Why not? Is this an entry level XEON with a max of 128 GB? Then go and buy mid tier server hardware. The maximum amount of ram is 4-8 TB.can't buy more ram I have 128 GB

That will help, of course. But in the end, your machine is not big enough for running your workload. Increasing Swap will not increase performance.there a person tell me I want Enabling zram is this right ?

As @LnxBil has pointed out, the best way forward here would be to increase the amount of RAM. If you cannot do that, consider decreasing the workload.

If you absolutely cannot move forward with either of these two option, you can enable zram, but that will only help to a degree as it basically just compresses data that would be swapped out. This can only be done to a certain degree so don't expect to much. Note that there have also been reports of soft lock ups when using zram with Proxmox VE. You can read more on that in the wiki [1].

[1]: https://pve.proxmox.com/wiki/Zram

If you absolutely cannot move forward with either of these two option, you can enable zram, but that will only help to a degree as it basically just compresses data that would be swapped out. This can only be done to a certain degree so don't expect to much. Note that there have also been reports of soft lock ups when using zram with Proxmox VE. You can read more on that in the wiki [1].

[1]: https://pve.proxmox.com/wiki/Zram

I think this will only buy the author of this thread some time.Would it help to enable KSM sharing ?

It will take a hit on the CPU, but also it will "free" some RAM + Swap.

If it is too much, it is too much.

If the swap is at 100% already then I think there is not much to do other than increase resources OR reduce load.

I usually set the swappiness to 0. then it won't swap at all except there is a case where it would need to otherwise kill guest guests. So no swap performance penalty and no additional SSD wear but still OOM protection.All my nodes swap, even with tons of RAM available.

That also really depends on the use case. When you can't trust the guests then it's recommended to disable KSM, as KSM will weaken the isolation. And how much KSM will save also really depends on the running guests. Without ZFS (as host's ARC and page file caching of the guests often cache the same data in RAM) or a lot of VMs running totally different services or OSs, the RAM savings might not be that high.Would it help to enable KSM sharing ?

It will take a hit on the CPU, but also it will "free" some RAM + Swap.

But yes, here it works fine and is saving me 9-11GB of 64GB RAM.

I set it to 10 on my nodes.I usually set the swappiness to 0. then it won't swap at all except there is a case where it would need to otherwise kill guest guests. So no swap performance penalty and no additional SSD wear but still OOM protection.

Are you using ZFS?I set it to 10 on my nodes.

The ARC usage does not show up in the UI, hence you might have less RAM available than it is obvious.

I am, and have it capped to 16G max on ARC.Are you using ZFS?

The ARC usage does not show up in the UI, hence you might have less RAM available than it is obvious.

I always thought that the ARC was unfortunately counted as used memory, in contrast to normal Linux filesystem cache.The ARC usage does not show up in the UI, hence you might have less RAM available than it is obvious.

I am, and have it capped to 16G max on ARC.

That can easily be checked within which case I don't have an answer for your observation. Except the limit is not correctly honored (had this once...).

arc_summary.About 2% of your memory is in swap and IO delay is low, so maybe there is no problem? Some memory has been swapped out but your system does not appear to be heavily reading from swap Therefore it looks like swap just gave you 8 more GB to use for other stuff. Are you experiencing problems (beside the red color of the 100% swap usage)?

arc_summary:About 2% of your memory is in swap and IO delay is low, so maybe there is no problem? Some memory has been swapped out but your system does not appear to be heavily reading from swap Therefore it looks like swap just gave you 8 more GB to use for other stuff. Are you experiencing problems (beside the red color of the 100% swap usage)?

Code:

ARC size (current): < 0.1 % 17.5 KiB

Target size (adaptive): 73.8 % 11.8 GiB

Min size (hard limit): 50.0 % 8.0 GiB

Max size (high water): 2:1 16.0 GiBNo problems, yet, just can't explain why it is happening when it shouldn't be.

Keep also in mind that you will swap

- if you're running containers and their assigned RAM is not enough, even if the host as enough ram free.

- if you're requesting large memory segments and due to memory fragmentation have not enough contiguous memory available (see /proc/buddyinfo). Swapping is the only automatic method to get smaller segments out of the way.

How much it swaps is a complicated interaction the processes and VMs that are running, their memory allocations, file I/O and probably other factors (some of which can be tuned).No problems, yet, just can't explain why it is happening when it shouldn't be.

It is normal for the Linux kernel to swap out (allocated but not actively used) memory, which is good because you have more memory for your VMs. You can disable this behavior or double your swap space several times until it at a percentages that makes you happy.