Hi @pfornara ,

do you also use ZFS for the datastore or something else? What disk layout?

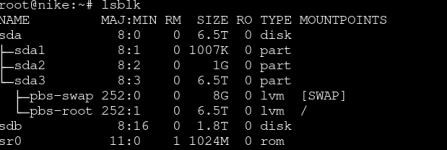

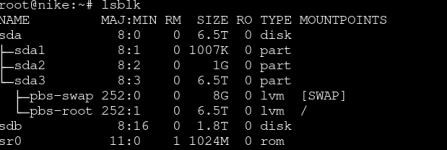

No ZFS, just LVM :

Hardware Raid on HP ProLiant ML350p Gen8 with Smart Array P420i Controller (3.42 firmware) with 8 disks.

Hi @pfornara ,

do you also use ZFS for the datastore or something else? What disk layout?

The ZFS kernel module is the same version 2.3.4+pve1 in both kernel 6.14 and 6.17, so the likely cause of the issue is in the rest of the kernel code. Unfortunately, the difference between 6.14 and 6.17 is very big. If anybody is not using ZFS and still affected by the issue at hand, you could test mainline builds to help narrow it down:

https://kernel.ubuntu.com/mainline/v6.15/

https://kernel.ubuntu.com/mainline/v6.16/

(the amd64/linux-image... and amd64/linux-modules... packages need to be installed).

Hi,Hi,

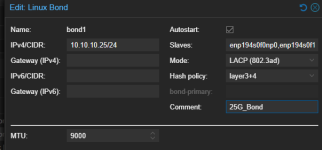

I think it's not a storage backend issue, it looks like something network related. Today with kernel 6.14., there was a slow backup on 1 VM, but no starving. It finished, but slow. I'm using network bonds on both sides, pve and pbs with mtu 9000. Maybe someone else is using network bonds with jumbo frames?

Best

Knuut

Hi Chris,Does the issue also show up independent from the PBS, e.g. by doing a FIO benchmark on the PBS host booted into the affected kernel version and compare the results to ones obtained from an older, not affected one?

You could check by running the following test when located on the filesystem backing the datastore (also making sure you have enough space).

Code:fio --ioengine=libaio --direct=1 --sync=1 --rw=write --bs=4M --numjobs=4 --iodepth=64 --runtime=600 --time_based --name write_4M --filename=write_test.fio --size=100G

Thanks! Can you also share your network hardware, driver in use and configuration (e.g. mtu, bond config, ecc..)Hi Chris,

attached you'll find two fio benchmarks executed on kernel 6.14 and 6.17. I don't see any obvious differences between the two tests.

Hi,Thanks! Can you also share your network hardware, driver in use and configuration (e.g. mtu, bond config, ecc..)

Same problem here; backups very sloooow and frozen, vm disk corrupted (more windows vms) with PVE 8.4 and PBS 4.1.

So I have upgraded the cluster (6 nodes /ceph) to 9.1.1 and now i will wait, but:

- I am in the middle of an upgrade, so 4 nodes are 9.1.1 and the other 2 nodes still last 8.4

- if i restore from a 9.1 node, is really slow (2 hours for 1% of a 700Gbyte drive with 25% full).

- if i restore from a 8.4 node, the full restore goes in less than 1 hour

Now i need to suspend every backup because vms will be freezed or corrupted from it

PS: all is dell hardware, R730 xd with 12ssd + 12 sas drives, 4x10Gbit single bond with different vlans for ceph and management (and vms using sdn). PBS is with a 2x10Gbit, 12x8Tbyte sas drives for backup pool

Welcome to the partySame problem here; backups very sloooow and frozen, vm disk corrupted (more windows vms) with PVE 8.4 and PBS 4.1.

Do not wait finishing upgrading your PVE cluster. PBS is the problem here so either do not upgrade PBS, or 'downgrade' PBS to the 6.14 kernel. The PVE machines are not an issue here and seem to be safe to upgrade to 9.So I have upgraded the cluster (6 nodes /ceph) to 9.1.1 and now i will wait.

Welcome to the party

Do not wait finishing upgrading your PVE cluster. PBS is the problem here so either do not upgrade PBS, or 'downgrade' PBS to the 6.14 kernel. The PVE machines are not an issue here and seem to be safe to upgrade to 9.

For right now in our env I have:

- Disabled all automated backups, and will manually start them tonight.

- Downgraded the PBS kernel to 6.14 and PBS version to 4.0.19, see comments on page 3 of this treat.

very strange...I have a slowness problem in restore too, and I can confirm that with a 8.4 host the problem disappear.

Restoring with a 9.1.1 host, after a couple of minutes, i can see only one chunk per minute (or less), and now, for example, I have the restore process frozen at 35% (128Gbyte drive).

PBS is started with 6.14.11-4 kernel.

On a 9.1.1 node:very strange...

- 8 PVEs 8.4.14 no issues restoring from PBS 4.1.0 with 6.14.11 kernel

- 1 test PVE 9.1.1 no issues restoring from PBS 4.1.0 with 6.14.11 kernel

maybe something not related to PBS kernel issue on backup ?

On a 9.1.1 node:

uname -a

Linux nodo4-cluster1 6.17.2-2-pve #1 SMP PREEMPT_DYNAMIC PMX 6.17.2-2 (2025-11-26T12:33Z) x86_64 GNU/Linux

I use the same hardware for PBS and PVE (dell R730) and both with bond of 10Gbit ethernet on board (2 or 4), broadcom nextreme II. If the kernel's problem is related to this chipset, also on PVE i need to downgrade the kernel ?

We use essential cookies to make this site work, and optional cookies to enhance your experience.