Hello everyone,

Since yesterday, after all the updates to PVE 9.1.1 and PBS 4.0.20 (all with no-subscription repository), the backup has become super slow.

Obviously, after the updates, I restarted both the nodes and the VMs.

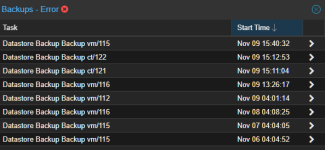

I found my daily backup job still running after hours.

And some VMs even frozen. I had to kill all backup processes, both from the pve nodes and from the PBS.

Once killed, I also had to unlock the VMs that were being backed up and force them to stop.

Then, once normal operation was restored, I launched a manual backup, but for some VMs, the backup is still very slow and in some cases, the backup is even aborted due to timeout.

For example:

When this happens, the VM is completely frozen.

The vm console is inaccessible, and from the summary, even the qemu agent does not appear to be active.

The only way to unblock it is to force a stop.

All VMs vdisks are in a ceph rdb volume of 3 pve nodes.

PBS runs as a VM, but the disks (including the backup repository) are qcow2 files in an NFS share on a Sinology NAS.

This is the first time this has happened since I installed the cluster months ago.

What steps can I take to diagnose the problem?

Thank you for your suggestions.

Since yesterday, after all the updates to PVE 9.1.1 and PBS 4.0.20 (all with no-subscription repository), the backup has become super slow.

Obviously, after the updates, I restarted both the nodes and the VMs.

I found my daily backup job still running after hours.

And some VMs even frozen. I had to kill all backup processes, both from the pve nodes and from the PBS.

Once killed, I also had to unlock the VMs that were being backed up and force them to stop.

Then, once normal operation was restored, I launched a manual backup, but for some VMs, the backup is still very slow and in some cases, the backup is even aborted due to timeout.

For example:

Code:

()

INFO: starting new backup job: vzdump 103 --storage BackupRepository --notes-template '{{guestname}}' --node pve03 --mode snapshot --notification-mode notification-system --remove 0

INFO: Starting Backup of VM 103 (qemu)

INFO: Backup started at 2025-11-21 07:09:48

INFO: status = running

INFO: VM Name: HomeSrvWin01

INFO: include disk 'scsi0' 'CephRBD:vm-103-disk-0' 90G

INFO: backup mode: snapshot

INFO: ionice priority: 7

INFO: creating Proxmox Backup Server archive 'vm/103/2025-11-21T13:09:48Z'

INFO: issuing guest-agent 'fs-freeze' command

INFO: issuing guest-agent 'fs-thaw' command

INFO: started backup task '798f2410-2ddf-4552-81a9-df71b384288f'

INFO: resuming VM again

INFO: scsi0: dirty-bitmap status: created new

INFO: 0% (700.0 MiB of 90.0 GiB) in 3s, read: 233.3 MiB/s, write: 141.3 MiB/s

INFO: 1% (1.0 GiB of 90.0 GiB) in 6s, read: 112.0 MiB/s, write: 30.7 MiB/s

INFO: 2% (1.8 GiB of 90.0 GiB) in 13s, read: 115.4 MiB/s, write: 29.1 MiB/s

INFO: 3% (2.8 GiB of 90.0 GiB) in 20s, read: 144.0 MiB/s, write: 17.1 MiB/s

INFO: 4% (3.6 GiB of 90.0 GiB) in 54s, read: 25.4 MiB/s, write: 9.5 MiB/s

ERROR: VM 103 qmp command 'query-backup' failed - got timeout

INFO: aborting backup job

ERROR: VM 103 qmp command 'backup-cancel' failed - unable to connect to VM 103 qmp socket - timeout after 5973 retries

INFO: resuming VM again

ERROR: Backup of VM 103 failed - VM 103 qmp command 'cont' failed - unable to connect to VM 103 qmp socket - timeout after 449 retries

INFO: Failed at 2025-11-21 07:35:47

INFO: Backup job finished with errors

INFO: notified via target `HeracleosMailServer`

TASK ERROR: job errorsWhen this happens, the VM is completely frozen.

The vm console is inaccessible, and from the summary, even the qemu agent does not appear to be active.

The only way to unblock it is to force a stop.

All VMs vdisks are in a ceph rdb volume of 3 pve nodes.

PBS runs as a VM, but the disks (including the backup repository) are qcow2 files in an NFS share on a Sinology NAS.

This is the first time this has happened since I installed the cluster months ago.

What steps can I take to diagnose the problem?

Thank you for your suggestions.

Last edited: