Hi,

recently i updated to 8.3 (with this also ceph to 18.2.4).

my hardware: intel N100, some 1tb nvme (local storage) and a sata samsung 870QVO for ceph

I also messed around with microcode updates (which im currently searching how to revert) and cpu powersaving govoner (which i already undo) (both from tteck(RIP) helper scripts)

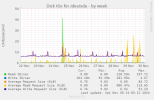

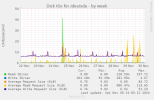

now i noticed high io wait within the guests (like 800-1000ms) before it was like ~40ms

and i found it becasue everything was lagging and i started to investigate

and i found it becasue everything was lagging and i started to investigate

as this started I also found on one of my 3 ceph nodes the sdb (samsung ssd) io wait increased from like 5 to 50ms and the utilisation of the ssd to 100% like 30hours later the two other nodes joind this club

some on reddit mentioned already consumer ssd are not the best idea but anyway i have it like this here at home

first i thought it has to do with the microcode update and the powersaving stuff... but powersaving was undo and its not better... the microcode is not yet reverted as i don't know howto from a timely manner it could fit to the one node but i did this changes to all nodes within short time and not with this 30hour delay as the two other nodes joind the club

so now the question is how can i hunt this down...im new to proxmox an ceph but i want to learn more... but here im stuck right now

all hints are welcome!

Regards

recently i updated to 8.3 (with this also ceph to 18.2.4).

my hardware: intel N100, some 1tb nvme (local storage) and a sata samsung 870QVO for ceph

I also messed around with microcode updates (which im currently searching how to revert) and cpu powersaving govoner (which i already undo) (both from tteck(RIP) helper scripts)

now i noticed high io wait within the guests (like 800-1000ms) before it was like ~40ms

and i found it becasue everything was lagging and i started to investigate

and i found it becasue everything was lagging and i started to investigateas this started I also found on one of my 3 ceph nodes the sdb (samsung ssd) io wait increased from like 5 to 50ms and the utilisation of the ssd to 100% like 30hours later the two other nodes joind this club

some on reddit mentioned already consumer ssd are not the best idea but anyway i have it like this here at home

first i thought it has to do with the microcode update and the powersaving stuff... but powersaving was undo and its not better... the microcode is not yet reverted as i don't know howto from a timely manner it could fit to the one node but i did this changes to all nodes within short time and not with this 30hour delay as the two other nodes joind the club

so now the question is how can i hunt this down...im new to proxmox an ceph but i want to learn more... but here im stuck right now

all hints are welcome!

Regards