My previously rock solid system has become nearly unusable and I'm not even remotely sure where to look. I understand my Proxmox version is in need of upgrading, but unfortunately there are some issues with me doing so at this time. Please help?!

Last night around 2300 hours the system become completely unresponsive. After a reset button, I managed to get logged in and found something managed to fill up the / root partition, which I was able to clear out and get my CT/VMs started up. Ever since that time, the system continues to exhibit >70% I/O wait and becomes unresponsive. Checking in top along with iotop, there is nothing showing any actual load on the disks yet the I/O wait remains very high and system becomes unresponsive for long periods of time. Example, running "pct list" takes minutes to display anything.

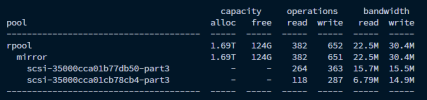

Proxmox is bare metal on a ZFS mirror vdev of 2x HGST HUS72302CLAR2000 7200rpm 2tb disks. ZFS Scrub hasn't found any issues with the disks, nor has smartctl (that I can decrypt anyway.) DIsk1 and Disk2 smartctl details.

As another test, I shut down all CTs but one and all VMs but two to see if anything changes, and it does not. The three I left running are necessarry for my network/internet functionality and have very little overhead. With nothing running but those three, I still have this:

Once again, checking in iotop/htop/top, nothing is accessing the disks. Attempting to run 'pveversion -v' at the console took almost 45 seconds for the output to be displayed.

root@pve2 ~# pveversion -v

proxmox-ve: 6.4-1 (running kernel: 5.4.203-1-pve)

pve-manager: 6.4-15 (running version: 6.4-15/af7986e6)

pve-kernel-5.4: 6.4-20

pve-kernel-helper: 6.4-20

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.203-1-pve: 5.4.203-1

pve-kernel-5.4.195-1-pve: 5.4.195-1

pve-kernel-5.4.140-1-pve: 5.4.140-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph-fuse: 12.2.11+dfsg1-2.1+deb10u1

corosync: 3.1.5-pve2~bpo10+1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 3.0.0-1+pve4~bpo10

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.22-pve2~bpo10+1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.1.0-1

libpve-access-control: 6.4-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-5

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.14-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.6-2

pve-cluster: 6.4-1

pve-container: 3.3-6

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.3-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.7-pve1

Last night around 2300 hours the system become completely unresponsive. After a reset button, I managed to get logged in and found something managed to fill up the / root partition, which I was able to clear out and get my CT/VMs started up. Ever since that time, the system continues to exhibit >70% I/O wait and becomes unresponsive. Checking in top along with iotop, there is nothing showing any actual load on the disks yet the I/O wait remains very high and system becomes unresponsive for long periods of time. Example, running "pct list" takes minutes to display anything.

Proxmox is bare metal on a ZFS mirror vdev of 2x HGST HUS72302CLAR2000 7200rpm 2tb disks. ZFS Scrub hasn't found any issues with the disks, nor has smartctl (that I can decrypt anyway.) DIsk1 and Disk2 smartctl details.

As another test, I shut down all CTs but one and all VMs but two to see if anything changes, and it does not. The three I left running are necessarry for my network/internet functionality and have very little overhead. With nothing running but those three, I still have this:

Once again, checking in iotop/htop/top, nothing is accessing the disks. Attempting to run 'pveversion -v' at the console took almost 45 seconds for the output to be displayed.

root@pve2 ~# pveversion -v

proxmox-ve: 6.4-1 (running kernel: 5.4.203-1-pve)

pve-manager: 6.4-15 (running version: 6.4-15/af7986e6)

pve-kernel-5.4: 6.4-20

pve-kernel-helper: 6.4-20

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.203-1-pve: 5.4.203-1

pve-kernel-5.4.195-1-pve: 5.4.195-1

pve-kernel-5.4.140-1-pve: 5.4.140-1

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-5.3.10-1-pve: 5.3.10-1

ceph-fuse: 12.2.11+dfsg1-2.1+deb10u1

corosync: 3.1.5-pve2~bpo10+1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 3.0.0-1+pve4~bpo10

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.22-pve2~bpo10+1

libproxmox-acme-perl: 1.1.0

libproxmox-backup-qemu0: 1.1.0-1

libpve-access-control: 6.4-3

libpve-apiclient-perl: 3.1-3

libpve-common-perl: 6.4-5

libpve-guest-common-perl: 3.1-5

libpve-http-server-perl: 3.2-5

libpve-storage-perl: 6.4-1

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.6-2

lxcfs: 4.0.6-pve1

novnc-pve: 1.1.0-1

proxmox-backup-client: 1.1.14-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.6-2

pve-cluster: 6.4-1

pve-container: 3.3-6

pve-docs: 6.4-2

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-4

pve-firmware: 3.3-2

pve-ha-manager: 3.1-1

pve-i18n: 2.3-1

pve-qemu-kvm: 5.2.0-8

pve-xtermjs: 4.7.0-3

qemu-server: 6.4-2

smartmontools: 7.2-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 2.0.7-pve1