Hi all,

I setup a LXC with a subvolume but have since change to doing a bind mount.

While the mount works perfectly and I have it all working right, I removed the subvol configs but for some reason when I restart the LXC it keeps re-creating this empty folder.

Is there a setting within the LXC that keeps making it recreate this at boot?

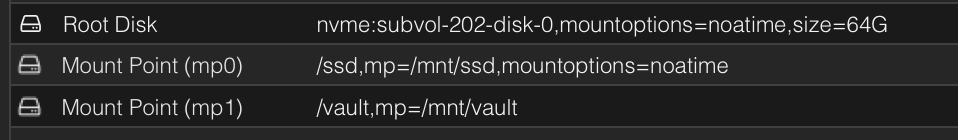

201.config

nvme is fine as thats the boot and so on, which I want there.

Its just the folder being created on /vault I no longer want.

Looking now, I can see it creating on /ssd as well...

I setup a LXC with a subvolume but have since change to doing a bind mount.

While the mount works perfectly and I have it all working right, I removed the subvol configs but for some reason when I restart the LXC it keeps re-creating this empty folder.

Is there a setting within the LXC that keeps making it recreate this at boot?

root@pve-namek:/vault# ls

media subvol-201-disk-0

201.config

mp0: /ssd,mp=/mnt/ssd,mountoptions=noatime

mp1: /vault,mp=/mnt/vault

rootfs: nvme:subvol-201-disk-0,mountoptions=noatime,replicate=0,size=64G

nvme is fine as thats the boot and so on, which I want there.

Its just the folder being created on /vault I no longer want.

Looking now, I can see it creating on /ssd as well...