Hi all.

I have been struggling with my new cluster set up. First attempt and having some issues.

I have 2 proxmox servers and a small intel with proxmox used as the quroum.

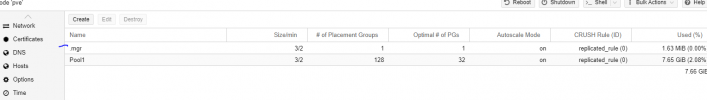

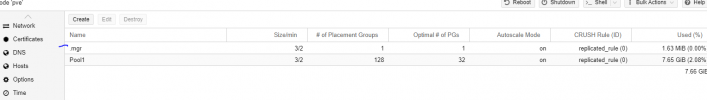

Set up the cluster nodes and quorum, added the ceph disks and pool. The pool shows all 3 disks online (although I do have a

warning.

Degraded data redundancy: 1056/3168 objects degraded (33.333%), 129 pgs degraded, 129 pgs undersized

The CTs will migrate if I select migrate.

All Cts were in the HA list and started.

I attempted to pull the plug on node 1. Nothing happened - they did not failover to the 2nd node. When I tried to restart the node 1 all went into a 'freeze' state.

I had to select ignore to restart them.

pvesm status:

Also when node 1 went down I saw that the ceph Pool went offline on the other nodes. Is this normal? I was assuming not.

What is this first pool named .mgr? If was there before I created my Pool1.

It's a learning curve so please be gentle!

It's a learning curve so please be gentle!

Let me know what other information I need to provide.

Many thanks

I have been struggling with my new cluster set up. First attempt and having some issues.

I have 2 proxmox servers and a small intel with proxmox used as the quroum.

Set up the cluster nodes and quorum, added the ceph disks and pool. The pool shows all 3 disks online (although I do have a

warning.

Degraded data redundancy: 1056/3168 objects degraded (33.333%), 129 pgs degraded, 129 pgs undersized

The CTs will migrate if I select migrate.

All Cts were in the HA list and started.

I attempted to pull the plug on node 1. Nothing happened - they did not failover to the 2nd node. When I tried to restart the node 1 all went into a 'freeze' state.

I had to select ignore to restart them.

pvesm status:

pve-status:Pool1 rbd active 193342076 4090444 189251632 2.12%

local dir active 222683160 50444004 162575880 22.65%

proxmox cifs active 3907000316 2542908876 1364091440 65.09%

proxmox2 cifs active 3907000316 2542908876 1364091440 65.09%

root@pve:~# pvecm status

Cluster information

-------------------

Name: pve-cluster

Config Version: 4

Transport: knet

Secure auth: on

Quorum information

------------------

Date: Sun Nov 27 10:58:25 2022

Quorum provider: corosync_votequorum

Nodes: 3

Node ID: 0x00000001

Ring ID: 1.d8

Quorate: Yes

Votequorum information

----------------------

Expected votes: 4

Highest expected: 4

Total votes: 4

Quorum: 3

Flags: Quorate Qdevice

Membership information

----------------------

Nodeid Votes Qdevice Name

0x00000001 1 A,V,NMW 192.168.1.218 (local)

0x00000002 1 A,V,NMW 192.168.1.93

0x00000003 1 NR 192.168.1.43

0x00000000 1 Qdevice

Also when node 1 went down I saw that the ceph Pool went offline on the other nodes. Is this normal? I was assuming not.

What is this first pool named .mgr? If was there before I created my Pool1.

It's a learning curve so please be gentle!

It's a learning curve so please be gentle! Let me know what other information I need to provide.

Many thanks