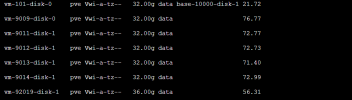

Yes, that's related to the case I was mentioning - moving them to a different volume will delink them, and can also make them fat.

HOWEVER, in that case, having discard enabled and running `fstrim` will thin them out again. Enabling the qemu-guest-agent can be helpful for periodically requesting fstrim from the Host.

You can't relink them, but unless your base image is quite large, or the changes you make from the base image are very small (which is the ideal case for a linked clone, for sure), the discard and trim operations will save you significant space.

If `discard` + `fstrim` doesn't make a difference, and you really need the linked clone savings, you could do some trickery moving the template, creating a new clone, manually mounting volumes, and using `rsync -avhP /mnt/{old-vm-disk}/ /mnt/{new-vm-disk}/` (or doing so via ssh with both VMs booted).