Dear community,

I have about 20 years experience with smaller enterprise-class VMware deployments using SAN-storage. The first thing I had to learn about ProxMox is: it's using entirely different concepts regarding design and use of storage, so I need some advice for my planning.

observed differences to Vmware:

#1 as VMware is using its cluster aware filesystem VMFS, so you can use any volume for any purpose.

#2 usually you share volumes across all servers in the cluster and avoid using local storage.

#3 backup and replication of VMs are usually done by 3rd party tools (like VeeAm or Nakivo).

#4 in most cases you are using raw LUNs, which are provided from dedicated storage-systems by iSCSI or fibre channel.

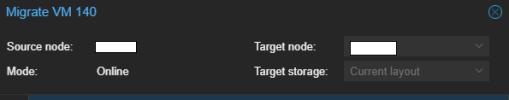

#5 you can eihter migrate the processing of the VM to another host, while leaving the storage the same place (as it is shared, usually), or you migrate the VM to another store due space or performance reasons. In VMware this will alwys work with the Vm being shutdown and in manycases with a VM running. In ProxMox it appears to be the other way round.

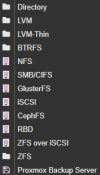

What I like to find out is, which model, filesystem and technique to use best, when storage-systems are already in place? My NAS-devices can provide storage by NFS/SMB or by iSCSI.

How to plan where to store ISOs, backups and VMs? (we don't use containers right now)

How to asynchronously replicate VMs offsite for deasaster reovery purposes? (offsite => different town, datacenter)

When to "publish" storage assigned to one node to the cluster?

In the end I plan to attach 2 or 3 nodes in order to run VMs and have one storage-device for running business and another one for keeping backups and maybe replicas.

Is there any guide or manual available recommending when to use Ceph, Gluster, ZFS or LVMs and what hardware-components are needed to build such an environment?

For my taste, the "storage section" in the proxmox manual opens more questions than it answers for the first step.

Thanks in advance for helping me a bit out of this confusion.

I have about 20 years experience with smaller enterprise-class VMware deployments using SAN-storage. The first thing I had to learn about ProxMox is: it's using entirely different concepts regarding design and use of storage, so I need some advice for my planning.

observed differences to Vmware:

#1 as VMware is using its cluster aware filesystem VMFS, so you can use any volume for any purpose.

#2 usually you share volumes across all servers in the cluster and avoid using local storage.

#3 backup and replication of VMs are usually done by 3rd party tools (like VeeAm or Nakivo).

#4 in most cases you are using raw LUNs, which are provided from dedicated storage-systems by iSCSI or fibre channel.

#5 you can eihter migrate the processing of the VM to another host, while leaving the storage the same place (as it is shared, usually), or you migrate the VM to another store due space or performance reasons. In VMware this will alwys work with the Vm being shutdown and in manycases with a VM running. In ProxMox it appears to be the other way round.

What I like to find out is, which model, filesystem and technique to use best, when storage-systems are already in place? My NAS-devices can provide storage by NFS/SMB or by iSCSI.

How to plan where to store ISOs, backups and VMs? (we don't use containers right now)

How to asynchronously replicate VMs offsite for deasaster reovery purposes? (offsite => different town, datacenter)

When to "publish" storage assigned to one node to the cluster?

In the end I plan to attach 2 or 3 nodes in order to run VMs and have one storage-device for running business and another one for keeping backups and maybe replicas.

Is there any guide or manual available recommending when to use Ceph, Gluster, ZFS or LVMs and what hardware-components are needed to build such an environment?

For my taste, the "storage section" in the proxmox manual opens more questions than it answers for the first step.

Thanks in advance for helping me a bit out of this confusion.