Thanks, I created a vmbr2 in the same IP range and everything went smooth.

Then I tried rebooting the host and broke everything

I have to do the " /sbin/rpool import -N 'rpool' " command on boot. I've tried :

- the rootdelay=10 in grub

- ZFS_INITRD_PRE_MOUNTROOT_SLEEP='5'+ ZFS_INITRD_POST_MODPROBE_SLEEP='5' and update-initramfs -u

- apt-get install --reinstall zfsutils-linux

- setting "ZPOOL_IMPORT_PATH" in /etc/default/zfs to "/dev/disk/by-vdev:/dev/disk/by-id" and regenerating the initramfs with "update-initramfs -u" to force mounting with IDs

- tried booting on the previous kernel

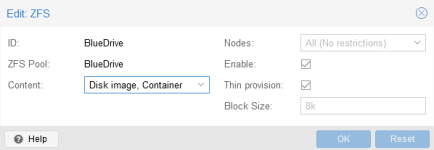

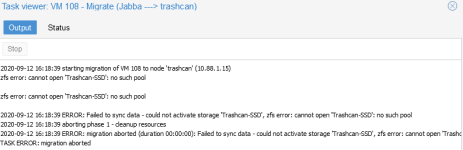

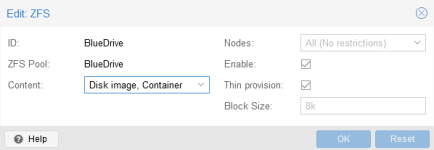

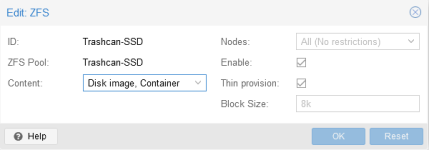

The ssd where my VM are stored (wich is called "BlueDrive") is available anymore.

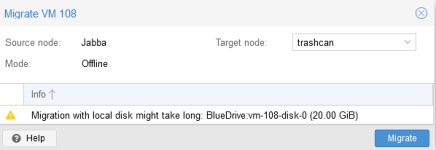

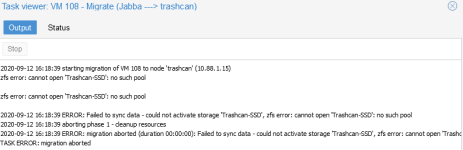

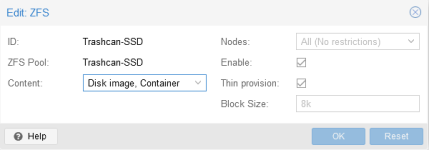

Some images of my configurations

Here is the "pveversion -v" :

Code:

proxmox-ve: 6.2-1 (running kernel: 5.4.60-1-pve)

pve-manager: 6.2-11 (running version: 6.2-11/22fb4983)

pve-kernel-5.4: 6.2-6

pve-kernel-helper: 6.2-6

pve-kernel-5.3: 6.1-6

pve-kernel-5.4.60-1-pve: 5.4.60-2

pve-kernel-5.4.44-2-pve: 5.4.44-2

pve-kernel-4.15: 5.4-6

pve-kernel-5.3.18-3-pve: 5.3.18-3

pve-kernel-4.15.18-18-pve: 4.15.18-44

pve-kernel-4.15.18-12-pve: 4.15.18-36

ceph-fuse: 12.2.11+dfsg1-2.1+b1

corosync: 3.0.4-pve1

criu: 3.11-3

glusterfs-client: 5.5-3

ifupdown: residual config

ifupdown2: 3.0.0-1+pve2

ksm-control-daemon: 1.3-1

libjs-extjs: 6.0.1-10

libknet1: 1.16-pve1

libproxmox-acme-perl: 1.0.5

libpve-access-control: 6.1-2

libpve-apiclient-perl: 3.0-3

libpve-common-perl: 6.2-2

libpve-guest-common-perl: 3.1-3

libpve-http-server-perl: 3.0-6

libpve-storage-perl: 6.2-6

libqb0: 1.0.5-1

libspice-server1: 0.14.2-4~pve6+1

lvm2: 2.03.02-pve4

lxc-pve: 4.0.3-1

lxcfs: 4.0.3-pve3

novnc-pve: 1.1.0-1

proxmox-mini-journalreader: 1.1-1

proxmox-widget-toolkit: 2.2-12

pve-cluster: 6.1-8

pve-container: 3.2-1

pve-docs: 6.2-5

pve-edk2-firmware: 2.20200531-1

pve-firewall: 4.1-2

pve-firmware: 3.1-3

pve-ha-manager: 3.1-1

pve-i18n: 2.2-1

pve-qemu-kvm: 5.1.0-1

pve-xtermjs: 4.7.0-2

qemu-server: 6.2-14

smartmontools: 7.1-pve2

spiceterm: 3.1-1

vncterm: 1.6-2

zfsutils-linux: 0.8.4-pve1

I've already spent a few hours on this, it might by time to ask for help...

Thanks