trying to start a vm with a pcie passthrough device, specifically an nvidia vGPU device, and starting the vm in the gui times out. when i try to

i get

this seems like a pve issue. troubleshooting steps i tried:

shut down already running vGPU vm and start it again with qm start, get the same error. start previously running vGPU vm with gui, start works.

there are already 8 2b vm's running on this rtx a5000, the nvidia docs say you can run 12. the host itself has about 100GB ram left to start this vm, but somehow still has a problem allocating the 16GB memory for this vm. i'm honestly by now not sure if this is an nvidia driver issue or pve issue.

Code:

qm start vmid --timeout 300

Code:

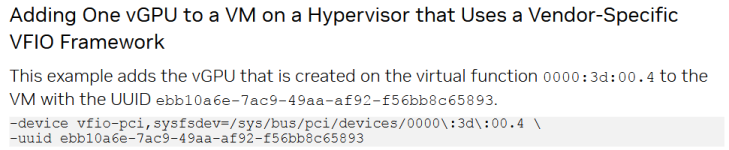

kvm: -device vfio-pci,sysfsdev=sys/bus/pci/devices/0000:16:01.3: vfio sys/bus/pci/devices/0000:16:01.3: no such host device: No such file or directory

start failed: QEMU exited with code 1shut down already running vGPU vm and start it again with qm start, get the same error. start previously running vGPU vm with gui, start works.

there are already 8 2b vm's running on this rtx a5000, the nvidia docs say you can run 12. the host itself has about 100GB ram left to start this vm, but somehow still has a problem allocating the 16GB memory for this vm. i'm honestly by now not sure if this is an nvidia driver issue or pve issue.

Last edited: