Hello,

I'd like to put my guest VMs in a different subnet from my proxmox host.

I have the following setup:

Single ethernet cable / vmbr0

192.168.4.1 ---------------------------------------------- 192.168.4.2

(Dedicated openWRT router) Proxmox host

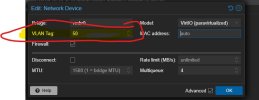

I've put the vmbr0 as vlan aware, tagged my VM1 and VM2 traffic.

192.168.10.1 <----------VLAN 1------------------------ Guest VM 1

192.168.20.1 <----------VLAN 2------------------------ Guest VM 2

On the openWRT side I've configured a VLAN attached to the port of the proxmox host.

I can see some traffic going through the VLAN interface, but I cannot get an ip with DHCP on the guest VMs.

Is this the correct approach to split guest VMs in different subnet than the proxmox host?

I want to manage as much as possible the network stuff in openwrt directly.

Do you have a guide to recommend or any documentation to understand a bit more how I can achieve something like this.

Thanks for your help!

I'd like to put my guest VMs in a different subnet from my proxmox host.

I have the following setup:

Single ethernet cable / vmbr0

192.168.4.1 ---------------------------------------------- 192.168.4.2

(Dedicated openWRT router) Proxmox host

I've put the vmbr0 as vlan aware, tagged my VM1 and VM2 traffic.

192.168.10.1 <----------VLAN 1------------------------ Guest VM 1

192.168.20.1 <----------VLAN 2------------------------ Guest VM 2

On the openWRT side I've configured a VLAN attached to the port of the proxmox host.

I can see some traffic going through the VLAN interface, but I cannot get an ip with DHCP on the guest VMs.

Is this the correct approach to split guest VMs in different subnet than the proxmox host?

I want to manage as much as possible the network stuff in openwrt directly.

Do you have a guide to recommend or any documentation to understand a bit more how I can achieve something like this.

Thanks for your help!