I have a VM in a hardlocked condition. This VM had 2 disks on NAS storage, and was set to migrate in HA. As a short term fix, I moved one of those 2 disks to the local ZFS storage on the node, but I did NOT configure a replication job (oversight). A power event caused the node to drop, and the VM's migrated. Now, the VM can't start because it doesn't have access to a disk, I can't migrate/replicate the disk, and when I try to migrate the VM back, it errors with the command output "No volume specified"

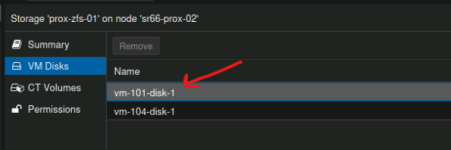

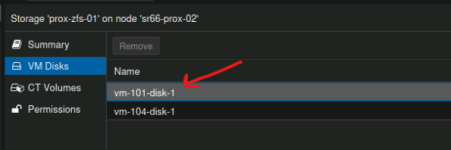

I either need to get the VM to migrate back to node 2 so the disk shows back up, or I need to replicate the disk from node 2 to node 1 with some sort of ZFS send, but I'm not sure the best way to do that. Ultimately, I do have backups, but I would prefer not to revert to that if I can recover the disk; I can see the disk looking at the ZFS LUN on node 2.

Code:

root@sr66-prox-01:~# /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=sr66-prox-02' -o 'UserKnownHostsFile=/etc/pve/nodes/sr66-prox-02/ssh_known_hosts' -o 'GlobalKnownHostsFile=none' root@10.10.2.10 -- pvesr prepare-local-job 101-0 --last_sync 0

no volumes specifiedI either need to get the VM to migrate back to node 2 so the disk shows back up, or I need to replicate the disk from node 2 to node 1 with some sort of ZFS send, but I'm not sure the best way to do that. Ultimately, I do have backups, but I would prefer not to revert to that if I can recover the disk; I can see the disk looking at the ZFS LUN on node 2.