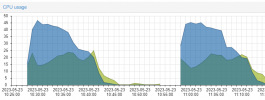

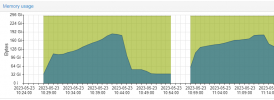

I upgraded to PVE 7.4-3, enabled persistent L2ARC and IO threads. With no more disk IO bottleneck, I could see some decent CPU utilization. I just had to conduct every test twice to ensure repeatability now that L2ARC is persistent. All 10 VMs were done booting and benchmarking after about 5 minutes, so I average the node's CPU usage over the first 10 minutes. Test 1 & 2 had ballooning enabled. Test 3 & 4 had ballooning disabled. With Test 5 & 6 I upgraded to the lastest drivers in virtio-win-0.1.229.iso.

- Test 1 & 2 Average % CPU usage: 11.30%

- Balloon Driver Date: 8/30/2021

- Balloon Driver Version: 100.85.104.20800

- Test 3 & 4 Average % CPU usage: 10.23%

- VM Memory -> Balloon=0

- VirtIO Balloon Device disabled

- BalloonService disabled

- Test 5 & 6 Average % CPU usage: 11.21%

- Balloon Driver Date: 11/15/2022

- Balloon Driver Version: 100.92.104.22900

Later on, I discovered the qm guest exec command can be used to toggle on/off the balloon driver in real time (no restart required!). I disabled both the VirtIO Balloon Device and "BalloonService" windows service just to be safe, but disabling either one would result in the VM's memory usage spiking up.

Disable Ballooning on VMID 123:

Bash:

qm guest exec 123 "pnputil.exe" "/disable-device" "PCI\VEN_1AF4&DEV_1002&SUBSYS_00051AF4&REV_00\3&267A616A&1&18"

qm guest exec 123 "net" "stop" "balloonservice"

Enable Ballooning on VMID 123:

Bash:

qm guest exec 123 "pnputil.exe" "/enable-device" "PCI\VEN_1AF4&DEV_1002&SUBSYS_00051AF4&REV_00\3&267A616A&1&18"

qm guest exec 123 "net" "start" "balloonservice"

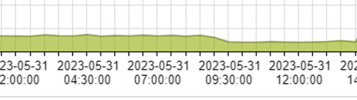

Next, I looked at individual VM CPU usage while idle. I toggled on the balloon driver for 5 hours, and toggled it off for 5 hours. This was done for 4 VMs.

The idle average % CPU usage increase was 1.12% when the balloon device was enabled.

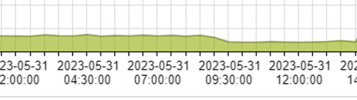

Finally, I looked at VM CPU usage while under load. PowerShell was used to max out a single vCPU, while writing and releasing about 1.5GB of RAM over and over. This caused a pretty consistent 61% - 62% load. This test was smaller, ran on only 2 VMs over the course of 7 hours (3.5 hours on, 3.5 hours off).

The under-load average % CPU usage increase was 0.35% when the balloon device was enabled.

Here's the Powershell one-liner that I used:

Code:

do{$str = "01234567";while(($str.length/1024) -lt (512*1024)){$str+=$str};Clear-Variable -Name "str"}while($true)

My conclusion was that the VirtIO balloon device had a negligible impact on performance (about 1% CPU utilization of a 2.3 GHz Xeon). By using the balloon device, I can save 2-3 GB of RAM per VM. Exceptions: I only tested Windows 10 VMs, not Windows Server. I'm also not using PCIe Passthrough, which is know to conflict with the Balloon device too.