Snapshot causes VM to become unresponsive.

- Thread starter nivek1612

- Start date

You are using an out of date browser. It may not display this or other websites correctly.

You should upgrade or use an alternative browser.

You should upgrade or use an alternative browser.

Hi,

I have the same issue, but not with snapshots, but when doing heavy IO work.

Every night I dump an external mariadb to this VM with around 11GB, then making a home-assistant internal backup and finally copying this 3.5GB file to a samba share.

This was no problem in the past, but since a few weeks during these operations the VM becomes unresponsive and I need to manually restart it.

I have the same issue, but not with snapshots, but when doing heavy IO work.

Every night I dump an external mariadb to this VM with around 11GB, then making a home-assistant internal backup and finally copying this 3.5GB file to a samba share.

This was no problem in the past, but since a few weeks during these operations the VM becomes unresponsive and I need to manually restart it.

Hi

@fiona , all my VMs are using

Regards,

@fiona , all my VMs are using

VirtIO SCSI single and IO Thread by default, yesterday morning i had another Debian12 VM unresponsive (without snapshot this time) freezing at 01 Am for no apparent reason, i had a partial local console connection but no prompt, had too reboot (force reboot) to regain control over the VM. If i snapshot with Memory the same problem may occur.Regards,

It seems like you are using the (default in the backend)Hi

@fiona , all my VMs are usingVirtIO SCSI singleandIO Threadby default, yesterday morning i had another Debian12 VM unresponsive (without snapshot this time) freezing at 01 Am for no apparent reason, i had a partial local console connection but no prompt, had too reboot (force reboot) to regain control over the VM. If i snapshot with Memory the same problem may occur.

Regards,

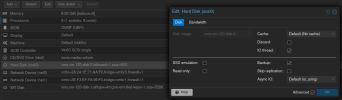

View attachment 76667View attachment 76668

kvm64 Processor/CPU type, which is not recommended anymore nowadays. Can you try switching to x86-64-v2-AES or something close to your physical model: https://pve.proxmox.com/pve-docs/chapter-qm.html#_cpu_typeWould also be interesting to know, what is your physical CPU model? Do you have latest microcode installed: https://pve.proxmox.com/pve-docs/chapter-sysadmin.html#sysadmin_firmware_cpu ?

Hi,

My physical CPUs are 2x Intel(R) Xeon(R) Silver 4316 CPU @ 2.30GHz

I don't have latest microcode manually installed

I tried with my prod VM after a shutdown x86-64-v2-AES , after 2 snapshots with ram => same problem VM begun to stall dmesg/journalctl warning

Then i halt change to host cpu and boot because my VM is in production.

I changed on my second proxmox server (cluster) same hardware configuration and done some more tests on another debian12 VM (2 Cpus 1G RAM)

Done 2 snapshot without issue, then i decide to simulate the CPU and IOs charge on this VM i used a stress and dd like this in a screen

simple-stress.sh

and a dd randowm write in loop

DD result is OK

After that i use a top and btop to watch the process

Here are the results after a second run (the first one was near same results) where i changed the guest cpu from kvm64 to hosts

first snapshot under stress => some failure

dd not so good after that

Next snapshot is OK but dd perf is down

dd =>

I continue snapshots untill stalling/saturation , each time dd is down ..

Message dmesg/journalctl

Time to reboot VM ..

PS: sorry for my English it's not my native language

My physical CPUs are 2x Intel(R) Xeon(R) Silver 4316 CPU @ 2.30GHz

I don't have latest microcode manually installed

I tried with my prod VM after a shutdown x86-64-v2-AES , after 2 snapshots with ram => same problem VM begun to stall dmesg/journalctl warning

oct. 23 15:33:10 xxxxx kernel: clocksource: Long readout interval, skipping watchdog check: cs_nsec: 1000034688 wd_nsec: 1000034116Then i halt change to host cpu and boot because my VM is in production.

I changed on my second proxmox server (cluster) same hardware configuration and done some more tests on another debian12 VM (2 Cpus 1G RAM)

Done 2 snapshot without issue, then i decide to simulate the CPU and IOs charge on this VM i used a stress and dd like this in a screen

simple-stress.sh

Bash:

#!/bin/bash

while true; do

stress -c 1 --timeout 1

sleep 0.5

doneand a dd randowm write in loop

Bash:

while [ 1 ]; do date ; dd if=/dev/random of=/tmp/FILE-BIG-1G bs=1M count=1000 ;doneDD result is OK

1048576000 octets (1,0 GB, 1000 MiB) copiés, 3,3899 s, 309 MB/sAfter that i use a top and btop to watch the process

Here are the results after a second run (the first one was near same results) where i changed the guest cpu from kvm64 to hosts

first snapshot under stress => some failure

saving VM state and RAM using storage 'vms'4.02 MiB in 0s1.12 GiB in 1s1.49 GiB in 2s1.80 GiB in 3s2.05 GiB in 4s2.36 GiB in 5ssnapshot create failed: starting cleanupTASK ERROR: unable to save VM state and RAM - qemu_savevm_state_complete_precopy error -5dd not so good after that

1048576000 octets (1,0 GB, 1000 MiB) copiés, 4,84992 s, 216 MB/sNext snapshot is OK but dd perf is down

saving VM state and RAM using storage 'vms'1.51 MiB in 0s882.54 MiB in 1s1008.48 MiB in 2s1.22 GiB in 3s1.39 GiB in 5s1.56 GiB in 6s1.69 GiB in 7s1.84 GiB in 8s1.95 GiB in 9s2.10 GiB in 10s2.18 GiB in 11s2.19 GiB in 12scompleted saving the VM state in 13s, saved 2.44 GiBsnapshotting 'drive-scsi0' (vms:vm-111-disk-0)snapshotting 'drive-efidisk0' (vms:vm-111-disk-1)TASK OKdd =>

1048576000 octets (1,0 GB, 1000 MiB) copiés, 11,4522 s, 91,6 MB/sI continue snapshots untill stalling/saturation , each time dd is down ..

1048576000 octets (1,0 GB, 1000 MiB) copiés, 17,3274 s, 60,5 MB/s1048576000 octets (1,0 GB, 1000 MiB) copiés, 35,1212 s, 29,9 MB/s1048576000 octets (1,0 GB, 1000 MiB) copiés, 253,325 s, 4,1 MB/sMessage dmesg/journalctl

oct. 23 17:32:15 debian12 kernel: clocksource: Long readout interval, skipping watchdog check: cs_nsec: 1076691946 wd_nsec: 1076691530Time to reboot VM ..

PS: sorry for my English it's not my native language

Hi @fionaHi,

@derjoerg. Are you using theIO Threadsetting on for the VM disks? You might want to try and see if that helps. Turning it on also requires selecting theVirtIO SCSI singlecontroller.

Might also be worth giving a shot for other people in this thread.

I made the modificiations as requested, but as soon the vm-internal backup job is running (high network load and high io) the VM becomes unresponsive.

So we've been dealing with this for 3-4 months now. I have a half dozen single node PVE on ZFS instances with this issue. Every one is using the non-subscription repos. Does this issue affect subscription repository systems? Really no fix?

I have systems that were installed as PVE 6 and in-place upgraded through 6-7 and 7-8 to current that have the issue; Virtio drivers and Q35 version upgraded to current. I also have servers that were installed as PVE 8 on ZFS with UEFI AND SECURE BOOT just last month that do this. VMs are Windows Server 2016-2022 and Ubuntu 20.04 to Oracle Linux 9.

The weirdest part is that, as an MSP builder that prefers Proxmox, I have my own unique cluster at home. I'm using some very old gaming hardware. i7-2600k with local ZFS storage and ZFS over iSCSI to TrueNAS Scale (i5-2570k) clustered with an i7-9700k local ZFS on NVMe single disk and no ZFS over iSCSI access. Snapshots of running VMs, Linux or Windows, DO NOT CRASH VMs. I've tried to replicate the issue in the lab as hard as I can, be it old OSes or old VirtIO driver versions/Q35 versions and can't replicate. That goes for both nodes.

The way I see it, the issue has to be related to hypervisor hardware. The ancient Intel K series hardware with the same software doesn't have the same issues as a wide variation of Xeon chips with ECC RAM.

I have systems that were installed as PVE 6 and in-place upgraded through 6-7 and 7-8 to current that have the issue; Virtio drivers and Q35 version upgraded to current. I also have servers that were installed as PVE 8 on ZFS with UEFI AND SECURE BOOT just last month that do this. VMs are Windows Server 2016-2022 and Ubuntu 20.04 to Oracle Linux 9.

The weirdest part is that, as an MSP builder that prefers Proxmox, I have my own unique cluster at home. I'm using some very old gaming hardware. i7-2600k with local ZFS storage and ZFS over iSCSI to TrueNAS Scale (i5-2570k) clustered with an i7-9700k local ZFS on NVMe single disk and no ZFS over iSCSI access. Snapshots of running VMs, Linux or Windows, DO NOT CRASH VMs. I've tried to replicate the issue in the lab as hard as I can, be it old OSes or old VirtIO driver versions/Q35 versions and can't replicate. That goes for both nodes.

The way I see it, the issue has to be related to hypervisor hardware. The ancient Intel K series hardware with the same software doesn't have the same issues as a wide variation of Xeon chips with ECC RAM.

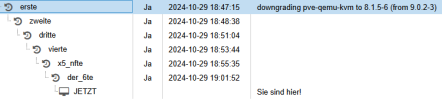

Unfortunately, I still wasn't able to reproduce the issue. Can anyone test if the issue is a regression in QEMU by downgrading with

apt install pve-qemu-kvm=8.1.5-6? A VM needs to be shut down and started again to be running with the newly installed QEMU binary. The Reboot button in web interface also works, reboot inside the VM is not enough!I have the same problem and downgrading pve-qemu-kvm to 8.1.5-6 (from 9.0.2-2) solved it. Back to 9.0.2-2 and the problem returns. I noticed there was a 9.0.2-3 available so I tried that and it didn't fix it. Installed all available updates and it's still broken.

Host CPU is i5-12400F.

In case it's significant, I noticed the snapshot under 8.1.5-6 was a lot faster, taking 1 or 2 seconds for the RAM compared to about 20 seconds with 9.0.2-2/3

Host CPU is i5-12400F.

In case it's significant, I noticed the snapshot under 8.1.5-6 was a lot faster, taking 1 or 2 seconds for the RAM compared to about 20 seconds with 9.0.2-2/3

I am having the same issue. Took a snapshot with RAM as I did already several times before, and after the snapshot it was unresponsive. This is the output ofqm status <ID> --verbose

balloon: 4294967296

ballooninfo:

actual: 4294967296

free_mem: 363380736

last_update: 1727510890

major_page_faults: 11611

max_mem: 4294967296

mem_swapped_in: 0

mem_swapped_out: 8192

minor_page_faults: 1266619200

total_mem: 4105109504

Just a wild stab in the dark here, but have you tried disabling memory ballooning while you troubleshoot the issue?

To gather debug information, you can run

replacing

apt install pve-qemu-kvm-dbgsym gdb and then, once the VM is sluggish or stuck after the snapshot, run

Code:

gdb --batch --ex 't a a bt' -p $(cat /var/run/qemu-server/100.pid)100 with the actual ID of the VM.Hi,Hello again,

today after 3 Snapshots (one everyday at 8:33) the VM crashes.

i use cv4pve-autosnap in crontab. did anyone know how to disable RAM-Snapshot? (--help cant help)

whats the downside of Snapshotting without RAM?

Normaly the Memory Is not saved.

For help cv4pve-autosnap snap --help

There is a preliminary patch on the mailing list now that might solve the issue for snapshot with RAM: https://lore.proxmox.com/pve-devel/20241030095240.11452-1-f.ebner@proxmox.com/T/#u

It's not yet in any released package.

It's not yet in any released package.

The GDB output can help to check if it is the same issue.To gather debug information, you can runapt install pve-qemu-kvm-dbgsym gdband then, once the VM is sluggish or stuck after the snapshot, run

replacingCode:gdb --batch --ex 't a a bt' -p $(cat /var/run/qemu-server/100.pid)100with the actual ID of the VM.

When will this patch be released?There is a preliminary patch on the mailing list now that might solve the issue for snapshot with RAM: https://lore.proxmox.com/pve-devel/20241030095240.11452-1-f.ebner@proxmox.com/T/#u

It's not yet in any released package.

The GDB output can help to check if it is the same issue.