Hi,

this post is part a solution and part of question to developers/community.

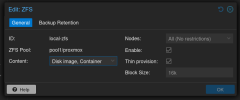

We have some small servers with ZFS. Setup is simple, 2 SSDs with ZFS mirror for OS and VM data.

The problem was really slow performance and IO delay, the fix was to disable "sync".

Now performance is almost 10x better. Since we have UPS this shouldn't be a problem. Nevertheless my question is why "sync=standard" is so slow? I understand some performance boost, but 10x? Some more details:

We use Micron 1100 and Crucial MX200 SSDs. Some perfomance test mit "dbench" in a VM with virtio. On Promox itself the performance is similar just a bit faster (1000 MB/s vs. 100 MB/s)

With sync=standard

with sync=disabled

this post is part a solution and part of question to developers/community.

We have some small servers with ZFS. Setup is simple, 2 SSDs with ZFS mirror for OS and VM data.

Code:

zpool status

pool: rpool

state: ONLINE

scan: scrub repaired 0B in 3h58m with 0 errors on Sun Feb 10 04:22:39 2019

config:

NAME STATE READ WRITE CKSUM

rpool ONLINE 0 0 0

mirror-0 ONLINE 0 0 0

sda2 ONLINE 0 0 0

sdb2 ONLINE 0 0 0The problem was really slow performance and IO delay, the fix was to disable "sync".

Code:

zfs set sync=disabled rpoolNow performance is almost 10x better. Since we have UPS this shouldn't be a problem. Nevertheless my question is why "sync=standard" is so slow? I understand some performance boost, but 10x? Some more details:

pveversion -v

proxmox-ve: 5.2-2 (running kernel: 4.15.18-8-pve)

pve-manager: 5.2-10 (running version: 5.2-10/6f892b40)

pve-kernel-4.15: 5.2-11

pve-kernel-4.15.18-8-pve: 4.15.18-28

pve-kernel-4.15.18-7-pve: 4.15.18-27

pve-kernel-4.15.17-1-pve: 4.15.17-9

corosync: 2.4.2-pve5

criu: 2.11.1-1~bpo90

glusterfs-client: 3.8.8-1

ksm-control-daemon: 1.2-2

libjs-extjs: 6.0.1-2

libpve-access-control: 5.0-8

libpve-apiclient-perl: 2.0-5

libpve-common-perl: 5.0-41

libpve-guest-common-perl: 2.0-18

libpve-http-server-perl: 2.0-11

libpve-storage-perl: 5.0-30

libqb0: 1.0.1-1

lvm2: 2.02.168-pve6

lxc-pve: 3.0.2+pve1-3

lxcfs: 3.0.2-2

novnc-pve: 1.0.0-2

proxmox-widget-toolkit: 1.0-20

pve-cluster: 5.0-30

pve-container: 2.0-29

pve-docs: 5.2-9

pve-firewall: 3.0-14

pve-firmware: 2.0-6

pve-ha-manager: 2.0-5

pve-i18n: 1.0-6

pve-libspice-server1: 0.14.1-1

pve-qemu-kvm: 2.12.1-1

pve-xtermjs: 1.0-5

qemu-server: 5.0-38

smartmontools: 6.5+svn4324-1

spiceterm: 3.0-5

vncterm: 1.5-3

zfsutils-linux: 0.7.11-pve2~bpo1

We use Micron 1100 and Crucial MX200 SSDs. Some perfomance test mit "dbench" in a VM with virtio. On Promox itself the performance is similar just a bit faster (1000 MB/s vs. 100 MB/s)

With sync=standard

Code:

Throughput 40.5139 MB/sec 2 clients 2 procs max_latency=138.780 mswith sync=disabled

Code:

Throughput 408.308 MB/sec 2 clients 2 procs max_latency=30.781 ms