Currently have proxmox 8.2 and PBS 3.2, backup of a 1.65TiB takes around 1 hour (which is pretty fast).

But restore took us 14 hours....which is very long.

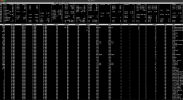

You can find attached image of a PBS benchmark:

Machine specs:

i9-10940X CPU @ 3.30GHz CPU @ 3.3GHz

128G DDR4 3000MT/s

4TiB P2 NVMe PCIe SSD

Upload speed is ~60MB/s

Below you can find fio random read benchmark:

Remote proxmox has specs like in the picture:

When running iotop, upload speed is around 6MB/s, disk read tops at 28MB/s at best, what's the issue here ?

But restore took us 14 hours....which is very long.

You can find attached image of a PBS benchmark:

Machine specs:

i9-10940X CPU @ 3.30GHz CPU @ 3.3GHz

128G DDR4 3000MT/s

4TiB P2 NVMe PCIe SSD

Upload speed is ~60MB/s

Below you can find fio random read benchmark:

Code:

sudo fio --filename=/dev/nvme0n1 --direct=1 --rw=randread --bs=4k --ioengine=libaio --iodepth=256 --runtime=120 --numjobs=4 --time_based --group_reporting --name=iops-test-job --eta-newline=1 --readonly

...

iops-test-job: (groupid=0, jobs=4): err= 0: pid=9063: Mon Jun 3 10:59:15 2024

read: IOPS=214k, BW=835MiB/s (875MB/s)(97.8GiB/120008msec)

slat (nsec): min=1038, max=266104, avg=1849.36, stdev=703.19

clat (usec): min=783, max=14359, avg=4788.89, stdev=1264.40

lat (usec): min=785, max=14360, avg=4790.74, stdev=1264.49

clat percentiles (usec):

| 1.00th=[ 2507], 5.00th=[ 3228], 10.00th=[ 3425], 20.00th=[ 3687],

| 30.00th=[ 4047], 40.00th=[ 4490], 50.00th=[ 4621], 60.00th=[ 4817],

| 70.00th=[ 5014], 80.00th=[ 5342], 90.00th=[ 7046], 95.00th=[ 7439],

| 99.00th=[ 7963], 99.50th=[ 8160], 99.90th=[ 8979], 99.95th=[ 9372],

| 99.99th=[10290]

bw ( KiB/s): min=566744, max=1359152, per=100.00%, avg=855669.28, stdev=34033.89, samples=956

iops : min=141686, max=339788, avg=213917.33, stdev=8508.48, samples=956

lat (usec) : 1000=0.01%

lat (msec) : 2=0.17%, 4=28.91%, 10=70.91%, 20=0.02%

cpu : usr=6.05%, sys=14.03%, ctx=15253024, majf=0, minf=1065

IO depths : 1=0.1%, 2=0.1%, 4=0.1%, 8=0.1%, 16=0.1%, 32=0.1%, >=64=100.0%

submit : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.0%

complete : 0=0.0%, 4=100.0%, 8=0.0%, 16=0.0%, 32=0.0%, 64=0.0%, >=64=0.1%

issued rwts: total=25645666,0,0,0 short=0,0,0,0 dropped=0,0,0,0

latency : target=0, window=0, percentile=100.00%, depth=256

Run status group 0 (all jobs):

READ: bw=835MiB/s (875MB/s), 835MiB/s-835MiB/s (875MB/s-875MB/s), io=97.8GiB (105GB), run=120008-120008msec

Disk stats (read/write):

nvme0n1: ios=25611281/202, merge=0/118, ticks=122611533/491, in_queue=122612180, util=99.97%Remote proxmox has specs like in the picture:

When running iotop, upload speed is around 6MB/s, disk read tops at 28MB/s at best, what's the issue here ?