Hi all,

we are going to build a production 3 node cluster use an ISCSI 10gb SAN Lenovo DE.

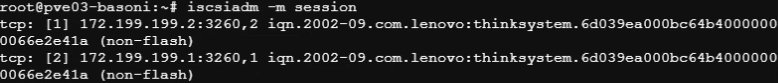

We are at the beginning and we have configured the first node using two 10gb network cards and a single LUN on the SAN.I attach the multipath configuration, the paths that are active and the performance I obtain.Anyone in my situation or can give me some advice?All mtu are set to 1500, changing to 9000 has no effect.Thank you

here my multipath.conf:

blacklist {

wwid .*

}

blacklist_exceptions {

wwid "36d039ea000bc680e000000ff66fd6ed2"

}

multipaths {

multipath {

wwid "36d039ea000bc680e000000ff66fd6ed2"

alias mpath0

}

}

defaults {

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

uid_attribute ID_SERIAL

rr_min_io 100

failback immediate

no_path_retry queue

user_friendly_names yes

}

devices {

device {

vendor "LENOVO"

product "DE_Series"

product_blacklist "Universal Xport"

path_grouping_policy "group_by_prio"

path_checker "rdac"

features "2 pg_init_retries 50"

hardware_handler "1 rdac"

prio "rdac"

failback immediate

rr_weight "uniform"

no_path_retry 30

retain_attached_hw_handler yes

detect_prio yes

}

}

we are going to build a production 3 node cluster use an ISCSI 10gb SAN Lenovo DE.

We are at the beginning and we have configured the first node using two 10gb network cards and a single LUN on the SAN.I attach the multipath configuration, the paths that are active and the performance I obtain.Anyone in my situation or can give me some advice?All mtu are set to 1500, changing to 9000 has no effect.Thank you

here my multipath.conf:

blacklist {

wwid .*

}

blacklist_exceptions {

wwid "36d039ea000bc680e000000ff66fd6ed2"

}

multipaths {

multipath {

wwid "36d039ea000bc680e000000ff66fd6ed2"

alias mpath0

}

}

defaults {

polling_interval 2

path_selector "round-robin 0"

path_grouping_policy multibus

uid_attribute ID_SERIAL

rr_min_io 100

failback immediate

no_path_retry queue

user_friendly_names yes

}

devices {

device {

vendor "LENOVO"

product "DE_Series"

product_blacklist "Universal Xport"

path_grouping_policy "group_by_prio"

path_checker "rdac"

features "2 pg_init_retries 50"

hardware_handler "1 rdac"

prio "rdac"

failback immediate

rr_weight "uniform"

no_path_retry 30

retain_attached_hw_handler yes

detect_prio yes

}

}