Hello, i need an help with an old install of proxmox 5.4-14

Configuration :

HP DL380 G8 2X8 Core Xeon E5-2670

64Gb Ram

RAID HP smartArray 800

Disks :

1 x raid1 2x300gb sas for Proxmox

4 x raid0 4 x 1TB SSD (prosumers) HP sd700 (forming a ZFS pool raidz1)

Network HP 570 2 x 10Gb Fiber connectecd to aruba 1930 with sfp+

With large files, the trasfer rate from pc to samba share is about 100 MB/S (windows copy)

With Small files the performances are unacceptable : not costant and may vary from 10 to 40 MBS (Windows copy)

The zpool is ok

I'm going to review the server

Reading the support the problem is in the storage area

I also know the problem to boot with USB in controller set in HBA Mode

What do you advise for better performances ?

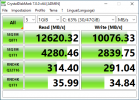

In the attachment a test with a win10 Virtual machine

Configuration :

HP DL380 G8 2X8 Core Xeon E5-2670

64Gb Ram

RAID HP smartArray 800

Disks :

1 x raid1 2x300gb sas for Proxmox

4 x raid0 4 x 1TB SSD (prosumers) HP sd700 (forming a ZFS pool raidz1)

Network HP 570 2 x 10Gb Fiber connectecd to aruba 1930 with sfp+

With large files, the trasfer rate from pc to samba share is about 100 MB/S (windows copy)

With Small files the performances are unacceptable : not costant and may vary from 10 to 40 MBS (Windows copy)

The zpool is ok

I'm going to review the server

Reading the support the problem is in the storage area

I also know the problem to boot with USB in controller set in HBA Mode

What do you advise for better performances ?

In the attachment a test with a win10 Virtual machine

Attachments

Last edited: