Well, depending on what the autoscaler now determines to be the new optimal PG num for the pool nvmePool, it will automatically adapt it slowly.

You should see that the PGnum for the pool will increase slowly over time. Until then, the warning can show up, but once the optimal number of PGs is reached, it should be back at a power of 2.

Would this setting what you mention in your answer? Do I have to set den Min PG?

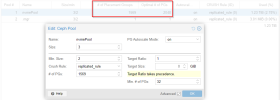

Please leave the min PG setting at the default of 32. With a target ratio, any with just the one pool, the autoscaler will know that you expect this pool to take up all the available space in the cluster. It will determine the correct PG num.

Can I increase the PG on a existing Pool, it fails on the GUI? Or do I have to create a new Pool?

On a replicated pool (

erasure coded pools are another possibilty), you can change the PG num on a running cluster. Ceph will then rebalance the data and either split or merge PGs.

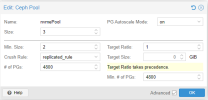

Did you get an error when trying to set it to 4800 PGs? It might be a bit too much or not a power of 2. The

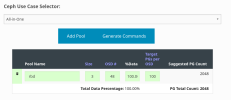

PG Calculator, thinks 2048 PGs are what works best for that number of OSDs. Therefore, the autoscaler should come to the same conclusion.

Yes the Hosts are overprovisioned for CEPH only but the same Hosts are virtualisation Hosts also for around 100VMs.

To avoid any misunderstandings here. With overprovisioned I mean not that the CPU is sized too large, but rather, that there is too much load for the CPU. E.g. it is overprovisioned in what should be running on it, vs. what it can handle.

You already see some CPU pressure, which means, some processes have to wait until they get CPU time. Adding additional guests to the hosts will most likely only worsen the situation. That's why you need to scale your hosts considerably larger when you plan a hyperconverged setup with not just additional memory, but also additional CPU cores.

Ignoring the threads, as hyperthreading will only help in some situations, you have 12 OSDs per node, + 1 MON plus at minimum a core for Proxmox VE and its services, better two.

That means, of the 24 available cores, you are already spending 15 just to keep the cluster working. If you then hand out additional cores to VMs, the situation will get worse. Depending of course, on how CPU intensive your guests are. If they are idling almost all the time, you can overprovision (configure more CPU cores than physically available) the hosts quite a bit, but if the guests will run CPU intensive tasks, you will see the CPU pressure go up. The result can be worse performance and, in the worst case, an unstable Ceph cluster, once OSDs and other services need to wait too long for CPU time.