Hey Guys,

Sorry for my Englisch...

I have a strange behaviour here... I reused a previous ZFS Raid SSD, formated it, "wiped" it via the PVE GUI and wanted it to use as a LVM-Thin.

"Create ThinPool" via GUI doesn't work for me, it seems to do nothing and after a few minutes, all VMs are greyed out. Only logging via SHH is possible anymore. And Only a reboot seems to work, but the pool is not initialized correctly. So wipe again and create it via Console:

pvcreate /dev/sda

vgcreate vgrp1 /dev/sda

lvcreate -l 100%FREE --thinpool thpl1 vgrp1 -Zn

and adding a Storage via GUI works.

BUT: If I want to move a disk of a VM (online) from "data2" to "vgrp1-thpl1", it starts with 500MB/s for a few seconds, and falls down to 1-2 MB/s.

If tested it with serveral VM Disks, all the same...

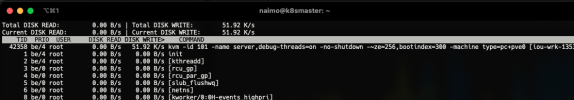

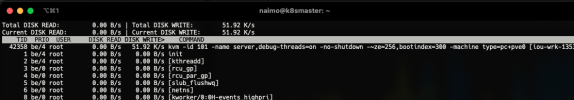

iotop during coping disk of VM id 101, but it's the same for id 201 from above:

qm config 201

lsblk

SMART Tests are PASSED

After the Disk is moved, the VM is also incredible slow.

If I create a new VM on the Same storage, its normally fast.

I don't have any idea, why the move it soooo slow? Do you?

I'll appreciate any help or hint, you can give me. If I can provide more informations, please let me know.

Thanks a lot in advance.

Greets,

Benjamin

Sorry for my Englisch...

I have a strange behaviour here... I reused a previous ZFS Raid SSD, formated it, "wiped" it via the PVE GUI and wanted it to use as a LVM-Thin.

"Create ThinPool" via GUI doesn't work for me, it seems to do nothing and after a few minutes, all VMs are greyed out. Only logging via SHH is possible anymore. And Only a reboot seems to work, but the pool is not initialized correctly. So wipe again and create it via Console:

pvcreate /dev/sda

vgcreate vgrp1 /dev/sda

lvcreate -l 100%FREE --thinpool thpl1 vgrp1 -Zn

and adding a Storage via GUI works.

BUT: If I want to move a disk of a VM (online) from "data2" to "vgrp1-thpl1", it starts with 500MB/s for a few seconds, and falls down to 1-2 MB/s.

If tested it with serveral VM Disks, all the same...

iotop during coping disk of VM id 101, but it's the same for id 201 from above:

qm config 201

Code:

agent: 1

bios: seabios

boot: c

cores: 2

cpu: kvm64

description: Ubuntu Server jammy Image

hotplug: network,disk,usb

ide0: local-lvm:vm-201-cloudinit,media=cdrom,size=4M

ide2: none,media=cdrom

ipconfig0: ip=10.0.0.14/24,gw=10.0.0.254

kvm: 1

memory: 1024

meta: creation-qemu=7.2.0,ctime=1687976277

name: adguard2

net0: virtio=12:99:C9:BF:C9:1B,bridge=vmbr0

numa: 0

onboot: 1

ostype: other

scsihw: virtio-scsi-pci

smbios1: uuid=41610610-5260-4311-88f3-fba1fe8fffa9

sockets: 1

tablet: 1

unused0: data2:vm-201-disk-0

virtio0: vmimages:vm-201-disk-0,iothread=0,size=32G

vmgenid: de76adea-b72d-449a-a4fe-abefeebf91b4lsblk

Code:

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINTS

sda 8:0 0 931.5G 0 disk

|-vgrp1-thpl1_tmeta 252:6 0 120M 0 lvm

| `-vgrp1-thpl1-tpool 252:22 0 931.3G 0 lvm

| |-vgrp1-thpl1 252:23 0 931.3G 1 lvm

| |-vgrp1-vm--115--disk--0 252:24 0 32G 0 lvm

| `-vgrp1-vm--201--disk--0 252:25 0 32G 0 lvm

`-vgrp1-thpl1_tdata 252:21 0 931.3G 0 lvm

`-vgrp1-thpl1-tpool 252:22 0 931.3G 0 lvm

|-vgrp1-thpl1 252:23 0 931.3G 1 lvm

|-vgrp1-vm--115--disk--0 252:24 0 32G 0 lvm

`-vgrp1-vm--201--disk--0 252:25 0 32G 0 lvm

sdb 8:16 0 238.5G 0 disk

|-data2-data2_tmeta 252:4 0 2.4G 0 lvm

| `-data2-data2-tpool 252:12 0 233.6G 0 lvm

| |-data2-data2 252:13 0 233.6G 1 lvm

| |-data2-vm--113--disk--0 252:14 0 32G 0 lvm

| |-data2-vm--114--disk--0 252:15 0 20G 0 lvm

| |-data2-vm--201--disk--0 252:16 0 32G 0 lvm

| |-data2-vm--300--cloudinit 252:17 0 4M 0 lvm

| |-data2-vm--300--disk--0 252:18 0 32G 0 lvm

| |-data2-vm--101--disk--0 252:19 0 50G 0 lvm

| `-data2-vm--110--disk--0 252:20 0 50G 0 lvm

`-data2-data2_tdata 252:5 0 233.6G 0 lvm

`-data2-data2-tpool 252:12 0 233.6G 0 lvm

|-data2-data2 252:13 0 233.6G 1 lvm

|-data2-vm--113--disk--0 252:14 0 32G 0 lvm

|-data2-vm--114--disk--0 252:15 0 20G 0 lvm

|-data2-vm--201--disk--0 252:16 0 32G 0 lvm

|-data2-vm--300--cloudinit 252:17 0 4M 0 lvm

|-data2-vm--300--disk--0 252:18 0 32G 0 lvm

|-data2-vm--101--disk--0 252:19 0 50G 0 lvm

`-data2-vm--110--disk--0 252:20 0 50G 0 lvm

sdc 8:32 0 931.5G 0 disk

nvme0n1 259:0 0 238.5G 0 disk

|-nvme0n1p1 259:1 0 1007K 0 part

|-nvme0n1p2 259:2 0 512M 0 part

`-nvme0n1p3 259:3 0 238G 0 part

|-pve-swap 252:0 0 8G 0 lvm [SWAP]

|-pve-root 252:1 0 69.5G 0 lvm /

|-pve-data_tmeta 252:2 0 1.4G 0 lvm

| `-pve-data-tpool 252:7 0 141.6G 0 lvm

| |-pve-data 252:8 0 141.6G 1 lvm

| |-pve-vm--102--disk--0 252:9 0 32G 0 lvm

| |-pve-vm--110--disk--0 252:10 0 50G 0 lvm

| `-pve-vm--201--cloudinit 252:11 0 4M 0 lvm

`-pve-data_tdata 252:3 0 141.6G 0 lvm

`-pve-data-tpool 252:7 0 141.6G 0 lvm

|-pve-data 252:8 0 141.6G 1 lvm

|-pve-vm--102--disk--0 252:9 0 32G 0 lvm

|-pve-vm--110--disk--0 252:10 0 50G 0 lvm

`-pve-vm--201--cloudinit 252:11 0 4M 0 lvmSMART Tests are PASSED

After the Disk is moved, the VM is also incredible slow.

If I create a new VM on the Same storage, its normally fast.

I don't have any idea, why the move it soooo slow? Do you?

I'll appreciate any help or hint, you can give me. If I can provide more informations, please let me know.

Thanks a lot in advance.

Greets,

Benjamin

Attachments

Last edited: