Hi,

I have searched the forum, but nobody seems to have faced this problem. I have a small cluster with 2 nodes, on each node, VMs are on a zfs pool and replication is active.

Everything works fine, live migration is fast except for VM 500, on which snapshots were made through the GUI and later deleted. The snapshots were deleted without errors, they are not visible in the GUI but with a shell:

For each migration of the VM-500, the 2 snapshots are also moved to the other node:

My question is: can I safely remove these snapshots? If yes, what is the safest way?

Laurent LESTRADE

I have searched the forum, but nobody seems to have faced this problem. I have a small cluster with 2 nodes, on each node, VMs are on a zfs pool and replication is active.

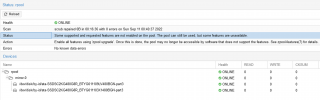

Everything works fine, live migration is fast except for VM 500, on which snapshots were made through the GUI and later deleted. The snapshots were deleted without errors, they are not visible in the GUI but with a shell:

Code:

root@remus:~# zfs list

NAME USED AVAIL REFER MOUNTPOINT

rpool 264G 166G 96K /rpool

rpool/ROOT 7.05G 166G 96K /rpool/ROOT

rpool/ROOT/pve-1 7.05G 166G 7.05G /

rpool/data 257G 166G 96K /rpool/data

rpool/data/vm-500-disk-0 24.5G 166G 24.5G -

rpool/data/vm-500-state-Avant_reverse_proxy 2.41G 166G 2.41G -

rpool/data/vm-500-state-maj_solstice 2.58G 166G 2.58G -

rpool/data/vm-501-disk-0 15.0G 166G 15.0G -

rpool/data/vm-501-disk-1 151G 166G 151G -

rpool/data/vm-502-disk-0 23.5G 166G 23.5G -

rpool/data/vm-503-disk-0 12.7G 166G 12.7G -

rpool/data/vm-504-disk-0 14.6G 166G 14.6G -

rpool/data/vm-505-disk-0 10.6G 166G 10.6G -For each migration of the VM-500, the 2 snapshots are also moved to the other node:

Code:

2022-08-31 18:51:50 starting migration of VM 500 to node 'remus' (192.168.1.199)

2022-08-31 18:51:50 found local, replicated disk 'local-zfs:vm-500-disk-0' (in current VM config)

2022-08-31 18:51:50 found local disk 'local-zfs:vm-500-state-Avant_reverse_proxy' (via storage)

2022-08-31 18:51:50 found local disk 'local-zfs:vm-500-state-maj_solstice' (via storage)

2022-08-31 18:51:50 scsi0: start tracking writes using block-dirty-bitmap 'repl_scsi0'

2022-08-31 18:51:50 replicating disk images

2022-08-31 18:51:50 start replication job

2022-08-31 18:51:50 guest => VM 500, running => 4047041

2022-08-31 18:51:50 volumes => local-zfs:vm-500-disk-0

2022-08-31 18:51:51 freeze guest filesystem

2022-08-31 18:51:51 create snapshot '__replicate_500-0_1661964710__' on local-zfs:vm-500-disk-0

2022-08-31 18:51:51 thaw guest filesystem

2022-08-31 18:51:51 using secure transmission, rate limit: none

2022-08-31 18:51:51 incremental sync 'local-zfs:vm-500-disk-0' (__replicate_500-0_1661963411__ => __replicate_500-0_1661964710__)

2022-08-31 18:51:52 send from @__replicate_500-0_1661963411__ to rpool/data/vm-500-disk-0@__replicate_500-0_1661964710__ estimated size is 27.0M

2022-08-31 18:51:52 total estimated size is 27.0M

2022-08-31 18:51:53 successfully imported 'local-zfs:vm-500-disk-0'

2022-08-31 18:51:53 delete previous replication snapshot '__replicate_500-0_1661963411__' on local-zfs:vm-500-disk-0

2022-08-31 18:51:53 (remote_finalize_local_job) delete stale replication snapshot '__replicate_500-0_1661963411__' on local-zfs:vm-500-disk-0

2022-08-31 18:51:53 end replication job

2022-08-31 18:51:53 copying local disk images

2022-08-31 18:51:55 full send of rpool/data/vm-500-state-Avant_reverse_proxy@__replicate_500-0_1649678400__ estimated size is 3.77G

2022-08-31 18:51:55 send from @__replicate_500-0_1649678400__ to rpool/data/vm-500-state-Avant_reverse_proxy@__migration__ estimated size is 624B

2022-08-31 18:51:55 total estimated size is 3.77G

2022-08-31 18:51:56 TIME SENT SNAPSHOT rpool/data/vm-500-state-Avant_reverse_proxy@__replicate_500-0_1649678400__

2022-08-31 18:51:56 18:51:56 106M rpool/data/vm-500-state-Avant_reverse_proxy@__replicate_500-0_1649678400__

........................................cut.................................;

2022-08-31 18:52:29 18:52:29 3.70G rpool/data/vm-500-state-Avant_reverse_proxy@__replicate_500-0_1649678400__

2022-08-31 18:52:30 successfully imported 'local-zfs:vm-500-state-Avant_reverse_proxy'

2022-08-31 18:52:30 volume 'local-zfs:vm-500-state-Avant_reverse_proxy' is 'local-zfs:vm-500-state-Avant_reverse_proxy' on the target

2022-08-31 18:52:31 full send of rpool/data/vm-500-state-maj_solstice@__replicate_500-0_1661961600__ estimated size is 3.85G

2022-08-31 18:52:31 send from @__replicate_500-0_1661961600__ to rpool/data/vm-500-state-maj_solstice@__migration__ estimated size is 624B

2022-08-31 18:52:31 total estimated size is 3.85G

2022-08-31 18:52:32 TIME SENT SNAPSHOT rpool/data/vm-500-state-maj_solstice@__replicate_500-0_1661961600__

2022-08-31 18:52:32 18:52:32 105M rpool/data/vm-500-state-maj_solstice@__replicate_500-0_1661961600__

........................................cut........................................

2022-08-31 18:53:06 18:53:06 3.81G rpool/data/vm-500-state-maj_solstice@__replicate_500-0_1661961600__

2022-08-31 18:53:08 successfully imported 'local-zfs:vm-500-state-maj_solstice'

2022-08-31 18:53:08 volume 'local-zfs:vm-500-state-maj_solstice' is 'local-zfs:vm-500-state-maj_solstice' on the target

2022-08-31 18:53:08 starting VM 500 on remote node 'remus'

2022-08-31 18:53:10 volume 'local-zfs:vm-500-disk-0' is 'local-zfs:vm-500-disk-0' on the target

2022-08-31 18:53:10 start remote tunnel

2022-08-31 18:53:10 ssh tunnel ver 1

2022-08-31 18:53:10 starting storage migration

2022-08-31 18:53:10 scsi0: start migration to nbd:unix:/run/qemu-server/500_nbd.migrate:exportname=drive-scsi0

drive mirror re-using dirty bitmap 'repl_scsi0'

drive mirror is starting for drive-scsi0

drive-scsi0: transferred 0.0 B of 6.4 MiB (0.00%) in 0s

drive-scsi0: transferred 6.4 MiB of 6.4 MiB (100.00%) in 1s, ready

all 'mirror' jobs are ready

2022-08-31 18:53:11 starting online/live migration on unix:/run/qemu-server/500.migrate

2022-08-31 18:53:11 set migration capabilities

2022-08-31 18:53:11 migration downtime limit: 100 ms

2022-08-31 18:53:11 migration cachesize: 512.0 MiB

2022-08-31 18:53:11 set migration parameters

2022-08-31 18:53:11 start migrate command to unix:/run/qemu-server/500.migrate

2022-08-31 18:53:12 migration active, transferred 113.3 MiB of 4.0 GiB VM-state, 113.7 MiB/s

...............................................cut...........................

2022-08-31 18:53:43 migration active, transferred 3.5 GiB of 4.0 GiB VM-state, 117.0 MiB/s

2022-08-31 18:53:44 migration active, transferred 3.6 GiB of 4.0 GiB VM-state, 121.7 MiB/s

2022-08-31 18:53:46 average migration speed: 117.5 MiB/s - downtime 98 ms

2022-08-31 18:53:46 migration status: completed

all 'mirror' jobs are ready

drive-scsi0: Completing block job_id...

drive-scsi0: Completed successfully.

drive-scsi0: mirror-job finished

2022-08-31 18:53:47 # /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=remus' root@192.168.1.199 pvesr set-state 500 \''{"local/romulus":{"last_iteration":1661964710,"last_try":1661964710,"duration":3.112224,"storeid_list":["local-zfs"],"last_node":"romulus","last_sync":1661964710,"fail_count":0}}'\'

2022-08-31 18:53:47 stopping NBD storage migration server on target.

2022-08-31 18:53:52 migration finished successfully (duration 00:02:02)

TASK OKMy question is: can I safely remove these snapshots? If yes, what is the safest way?

Laurent LESTRADE