I'm having a similar difficulty to this thread that I'm hoping someone can help me with.

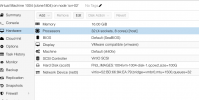

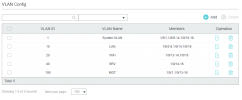

I have a cluster of Dell servers, each with 512G ram, and they are not stressed (screenshot at bottom). Each has a 1G NIC for corosync, and a 10G each for Image traffic (VLAN 101) and 10G for VM traffic (VLAN 100 - config below).

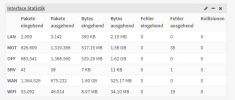

iperf on the host and the client are good (see below) and near the 10G limit. I have upgraded to AltaFiber 2G down/1G up connectivity, and I'm regularly getting it through my Unifi hardware. I'm using the VIRTIO network driver for the VM, and in the GUI my speeds are quite low, and using speedtest-cli on the host and the VM are significantly lower. The host gets nearly 1G down, the client about 1/2 that, and they are both limited to about 400M up. Web based speedtestets on the client are significantly works (149Mbps both ways). How can I improve performance?

pveversion -v

Host Networking

HOST iperf

Host speedtest-cli

Client config (qm config 701)

Client network

Client iperf (Ubuntu 22.04)

Client speedtest-cli

Host Load

I have a cluster of Dell servers, each with 512G ram, and they are not stressed (screenshot at bottom). Each has a 1G NIC for corosync, and a 10G each for Image traffic (VLAN 101) and 10G for VM traffic (VLAN 100 - config below).

iperf on the host and the client are good (see below) and near the 10G limit. I have upgraded to AltaFiber 2G down/1G up connectivity, and I'm regularly getting it through my Unifi hardware. I'm using the VIRTIO network driver for the VM, and in the GUI my speeds are quite low, and using speedtest-cli on the host and the VM are significantly lower. The host gets nearly 1G down, the client about 1/2 that, and they are both limited to about 400M up. Web based speedtestets on the client are significantly works (149Mbps both ways). How can I improve performance?

pveversion -v

root@svr-04:~# pveversion -v

proxmox-ve: 7.3-1 (running kernel: 5.15.74-1-pve)

pve-manager: 7.3-3 (running version: 7.3-3/c3928077)

pve-kernel-5.15: 7.2-14

pve-kernel-helper: 7.2-14

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.15.60-2-pve: 5.15.60-2

pve-kernel-5.15.60-1-pve: 5.15.60-1

pve-kernel-5.15.53-1-pve: 5.15.53-1

pve-kernel-5.15.39-4-pve: 5.15.39-4

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.15.35-1-pve: 5.15.35-3

pve-kernel-5.15.30-2-pve: 5.15.30-3

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-5-pve: 5.13.19-13

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-4.15: 5.4-8

pve-kernel-4.15.18-20-pve: 4.15.18-46

pve-kernel-4.15.18-12-pve: 4.15.18-36

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-1

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-1

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.1-1

proxmox-backup-file-restore: 2.3.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-1

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.5-6

pve-ha-manager: 3.5.1

pve-i18n: 2.8-1

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-1

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.6-pve1

proxmox-ve: 7.3-1 (running kernel: 5.15.74-1-pve)

pve-manager: 7.3-3 (running version: 7.3-3/c3928077)

pve-kernel-5.15: 7.2-14

pve-kernel-helper: 7.2-14

pve-kernel-5.13: 7.1-9

pve-kernel-5.11: 7.0-10

pve-kernel-5.15.74-1-pve: 5.15.74-1

pve-kernel-5.15.64-1-pve: 5.15.64-1

pve-kernel-5.15.60-2-pve: 5.15.60-2

pve-kernel-5.15.60-1-pve: 5.15.60-1

pve-kernel-5.15.53-1-pve: 5.15.53-1

pve-kernel-5.15.39-4-pve: 5.15.39-4

pve-kernel-5.15.39-1-pve: 5.15.39-1

pve-kernel-5.15.35-2-pve: 5.15.35-5

pve-kernel-5.15.35-1-pve: 5.15.35-3

pve-kernel-5.15.30-2-pve: 5.15.30-3

pve-kernel-5.13.19-6-pve: 5.13.19-15

pve-kernel-5.13.19-5-pve: 5.13.19-13

pve-kernel-5.13.19-2-pve: 5.13.19-4

pve-kernel-5.11.22-7-pve: 5.11.22-12

pve-kernel-4.15: 5.4-8

pve-kernel-4.15.18-20-pve: 4.15.18-46

pve-kernel-4.15.18-12-pve: 4.15.18-36

ceph-fuse: 15.2.17-pve1

corosync: 3.1.7-pve1

criu: 3.15-1+pve-1

glusterfs-client: 9.2-1

ifupdown: residual config

ifupdown2: 3.1.0-1+pmx3

ksm-control-daemon: 1.4-1

libjs-extjs: 7.0.0-1

libknet1: 1.24-pve2

libproxmox-acme-perl: 1.4.2

libproxmox-backup-qemu0: 1.3.1-1

libpve-access-control: 7.2-5

libpve-apiclient-perl: 3.2-1

libpve-common-perl: 7.3-1

libpve-guest-common-perl: 4.2-3

libpve-http-server-perl: 4.1-5

libpve-storage-perl: 7.3-1

libqb0: 1.0.5-1

libspice-server1: 0.14.3-2.1

lvm2: 2.03.11-2.1

lxc-pve: 5.0.0-3

lxcfs: 4.0.12-pve1

novnc-pve: 1.3.0-3

proxmox-backup-client: 2.3.1-1

proxmox-backup-file-restore: 2.3.1-1

proxmox-mini-journalreader: 1.3-1

proxmox-offline-mirror-helper: 0.5.0-1

proxmox-widget-toolkit: 3.5.3

pve-cluster: 7.3-1

pve-container: 4.4-2

pve-docs: 7.3-1

pve-edk2-firmware: 3.20220526-1

pve-firewall: 4.2-7

pve-firmware: 3.5-6

pve-ha-manager: 3.5.1

pve-i18n: 2.8-1

pve-qemu-kvm: 7.1.0-4

pve-xtermjs: 4.16.0-1

qemu-server: 7.3-1

smartmontools: 7.2-pve3

spiceterm: 3.2-2

swtpm: 0.8.0~bpo11+2

vncterm: 1.7-1

zfsutils-linux: 2.1.6-pve1

Host Networking

root@svr-04:/etc/network# more interfaces

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto enp4s0f0

iface enp4s0f0 inet manual

auto eno1

iface eno1 inet static

address 192.168.102.14/24

iface eno2 inet manual

iface eno3 inet manual

iface eno4 inet manual

auto enp4s0f1

iface enp4s0f1 inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.100.14/24

gateway 192.168.100.1

bridge-ports enp4s0f0

bridge-stp off

bridge-fd 0

auto vmbr1

iface vmbr1 inet static

address 192.168.101.14/24

bridge-ports enp4s0f1

bridge-stp off

bridge-fd 0

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto enp4s0f0

iface enp4s0f0 inet manual

auto eno1

iface eno1 inet static

address 192.168.102.14/24

iface eno2 inet manual

iface eno3 inet manual

iface eno4 inet manual

auto enp4s0f1

iface enp4s0f1 inet manual

auto vmbr0

iface vmbr0 inet static

address 192.168.100.14/24

gateway 192.168.100.1

bridge-ports enp4s0f0

bridge-stp off

bridge-fd 0

auto vmbr1

iface vmbr1 inet static

address 192.168.101.14/24

bridge-ports enp4s0f1

bridge-stp off

bridge-fd 0

HOST iperf

Connecting to host 192.168.100.39, port 5201

[ 5] local 192.168.100.14 port 35440 connected to 192.168.100.39 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.07 GBytes 9.22 Gbits/sec 278 1.01 MBytes

[ 5] 1.00-2.00 sec 1.08 GBytes 9.28 Gbits/sec 277 1004 KBytes

[ 5] 2.00-3.00 sec 1.08 GBytes 9.31 Gbits/sec 151 980 KBytes

[ 5] 3.00-4.00 sec 1.09 GBytes 9.32 Gbits/sec 56 983 KBytes

[ 5] 4.00-5.00 sec 1.07 GBytes 9.19 Gbits/sec 85 916 KBytes

[ 5] 5.00-6.00 sec 1.08 GBytes 9.28 Gbits/sec 27 598 KBytes

[ 5] 6.00-7.00 sec 1.08 GBytes 9.29 Gbits/sec 77 1.35 MBytes

[ 5] 7.00-8.00 sec 1.08 GBytes 9.31 Gbits/sec 61 981 KBytes

[ 5] 8.00-9.00 sec 1.09 GBytes 9.32 Gbits/sec 38 1.01 MBytes

[ 5] 9.00-10.00 sec 1.08 GBytes 9.31 Gbits/sec 10 1.01 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 10.8 GBytes 9.28 Gbits/sec 1060 sender

[ 5] 0.00-10.04 sec 10.8 GBytes 9.24 Gbits/sec receiver

[ 5] local 192.168.100.14 port 35440 connected to 192.168.100.39 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1.07 GBytes 9.22 Gbits/sec 278 1.01 MBytes

[ 5] 1.00-2.00 sec 1.08 GBytes 9.28 Gbits/sec 277 1004 KBytes

[ 5] 2.00-3.00 sec 1.08 GBytes 9.31 Gbits/sec 151 980 KBytes

[ 5] 3.00-4.00 sec 1.09 GBytes 9.32 Gbits/sec 56 983 KBytes

[ 5] 4.00-5.00 sec 1.07 GBytes 9.19 Gbits/sec 85 916 KBytes

[ 5] 5.00-6.00 sec 1.08 GBytes 9.28 Gbits/sec 27 598 KBytes

[ 5] 6.00-7.00 sec 1.08 GBytes 9.29 Gbits/sec 77 1.35 MBytes

[ 5] 7.00-8.00 sec 1.08 GBytes 9.31 Gbits/sec 61 981 KBytes

[ 5] 8.00-9.00 sec 1.09 GBytes 9.32 Gbits/sec 38 1.01 MBytes

[ 5] 9.00-10.00 sec 1.08 GBytes 9.31 Gbits/sec 10 1.01 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 10.8 GBytes 9.28 Gbits/sec 1060 sender

[ 5] 0.00-10.04 sec 10.8 GBytes 9.24 Gbits/sec receiver

Host speedtest-cli

root@svr-04:~# speedtest-cli --server 14757

Retrieving speedtest.net configuration...

Testing from Cincinnati Bell (74.83.94.146)...

Retrieving speedtest.net server list...

Retrieving information for the selected server...

Hosted by Pineville Fiber (Pineville, NC) [564.44 km]: 29.822 ms

Testing download speed................................................................................

Download: 923.29 Mbit/s

Testing upload speed......................................................................................................

Upload: 308.68 Mbit/s

Retrieving speedtest.net configuration...

Testing from Cincinnati Bell (74.83.94.146)...

Retrieving speedtest.net server list...

Retrieving information for the selected server...

Hosted by Pineville Fiber (Pineville, NC) [564.44 km]: 29.822 ms

Testing download speed................................................................................

Download: 923.29 Mbit/s

Testing upload speed......................................................................................................

Upload: 308.68 Mbit/s

Client config (qm config 701)

root@svr-04:~# qm config 701

agent: 1

bootdisk: scsi0

cores: 4

memory: 16384

name: Tautulli

net0: virtio=66:6F:8E:08:21:17,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

parent: BeforePythonFixes

scsi0: FN3_IMAGES:701/vm-701-disk-0.qcow2,discard=on,iothread=1,size=100G

scsihw: virtio-scsi-pci

smbios1: uuid=a4fec121-6a55-4fc8-9973-3fd08abfead0

sockets: 4

vga: vmware

agent: 1

bootdisk: scsi0

cores: 4

memory: 16384

name: Tautulli

net0: virtio=66:6F:8E:08:21:17,bridge=vmbr0,firewall=1

numa: 0

ostype: l26

parent: BeforePythonFixes

scsi0: FN3_IMAGES:701/vm-701-disk-0.qcow2,discard=on,iothread=1,size=100G

scsihw: virtio-scsi-pci

smbios1: uuid=a4fec121-6a55-4fc8-9973-3fd08abfead0

sockets: 4

vga: vmware

Client network

bferrell@tautulli:/etc/netplan$ more 50-cloud-init.yaml

# This file is generated from information provided by

# the datasource. Changes to it will not persist across an instance.

# To disable cloud-init's network configuration capabilities, write a file

# /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg with the following:

# network: {config: disabled}

network:

ethernets:

ens18:

dhcp4: true

version: 2

# This file is generated from information provided by

# the datasource. Changes to it will not persist across an instance.

# To disable cloud-init's network configuration capabilities, write a file

# /etc/cloud/cloud.cfg.d/99-disable-network-config.cfg with the following:

# network: {config: disabled}

network:

ethernets:

ens18:

dhcp4: true

version: 2

Client iperf (Ubuntu 22.04)

Connecting to host 192.168.100.39, port 5201

[ 5] local 192.168.100.137 port 36338 connected to 192.168.100.39 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1015 MBytes 8.51 Gbits/sec 951 979 KBytes

[ 5] 1.00-2.00 sec 1.03 GBytes 8.85 Gbits/sec 7 913 KBytes

[ 5] 2.00-3.00 sec 1.05 GBytes 9.02 Gbits/sec 0 1.47 MBytes

[ 5] 3.00-4.00 sec 1005 MBytes 8.43 Gbits/sec 0 1.70 MBytes

[ 5] 4.00-5.00 sec 780 MBytes 6.54 Gbits/sec 0 1.81 MBytes

[ 5] 5.00-6.00 sec 931 MBytes 7.81 Gbits/sec 16 1.14 MBytes

[ 5] 6.00-7.00 sec 1015 MBytes 8.52 Gbits/sec 42 949 KBytes

[ 5] 7.00-8.00 sec 1004 MBytes 8.41 Gbits/sec 0 1.33 MBytes

[ 5] 8.00-9.00 sec 1024 MBytes 8.59 Gbits/sec 121 1.10 MBytes

[ 5] 9.00-10.00 sec 975 MBytes 8.18 Gbits/sec 297 1.18 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 9.65 GBytes 8.29 Gbits/sec 1434 sender

[ 5] 0.00-10.04 sec 9.65 GBytes 8.26 Gbits/sec receiver

[ 5] local 192.168.100.137 port 36338 connected to 192.168.100.39 port 5201

[ ID] Interval Transfer Bitrate Retr Cwnd

[ 5] 0.00-1.00 sec 1015 MBytes 8.51 Gbits/sec 951 979 KBytes

[ 5] 1.00-2.00 sec 1.03 GBytes 8.85 Gbits/sec 7 913 KBytes

[ 5] 2.00-3.00 sec 1.05 GBytes 9.02 Gbits/sec 0 1.47 MBytes

[ 5] 3.00-4.00 sec 1005 MBytes 8.43 Gbits/sec 0 1.70 MBytes

[ 5] 4.00-5.00 sec 780 MBytes 6.54 Gbits/sec 0 1.81 MBytes

[ 5] 5.00-6.00 sec 931 MBytes 7.81 Gbits/sec 16 1.14 MBytes

[ 5] 6.00-7.00 sec 1015 MBytes 8.52 Gbits/sec 42 949 KBytes

[ 5] 7.00-8.00 sec 1004 MBytes 8.41 Gbits/sec 0 1.33 MBytes

[ 5] 8.00-9.00 sec 1024 MBytes 8.59 Gbits/sec 121 1.10 MBytes

[ 5] 9.00-10.00 sec 975 MBytes 8.18 Gbits/sec 297 1.18 MBytes

- - - - - - - - - - - - - - - - - - - - - - - - -

[ ID] Interval Transfer Bitrate Retr

[ 5] 0.00-10.00 sec 9.65 GBytes 8.29 Gbits/sec 1434 sender

[ 5] 0.00-10.04 sec 9.65 GBytes 8.26 Gbits/sec receiver

Client speedtest-cli

bferrell@tautulli:~$ speedtest-cli --server 14757

Retrieving speedtest.net configuration...

Testing from Cincinnati Bell (74.83.94.146)...

Retrieving speedtest.net server list...

Retrieving information for the selected server...

Hosted by Pineville Fiber (Pineville, NC) [564.44 km]: 30.316 ms

Testing download speed................................................................................

Download: 405.70 Mbit/s

Testing upload speed......................................................................................................

Upload: 366.38 Mbit/s

Retrieving speedtest.net configuration...

Testing from Cincinnati Bell (74.83.94.146)...

Retrieving speedtest.net server list...

Retrieving information for the selected server...

Hosted by Pineville Fiber (Pineville, NC) [564.44 km]: 30.316 ms

Testing download speed................................................................................

Download: 405.70 Mbit/s

Testing upload speed......................................................................................................

Upload: 366.38 Mbit/s

Host Load

Last edited: