I have a running environment which I use to run also MacOs.

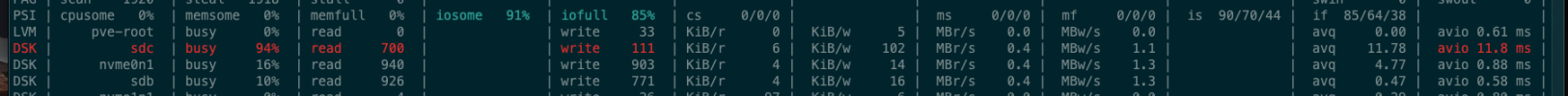

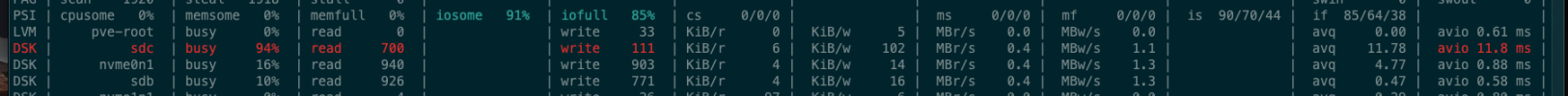

Sometimes the guest VM freezes and does not respond for some seconds, trying to see what happens I have found the following indication by looking in atop:

So it seems that one device of the pool gets busy, this is the pool:

as you can see sdc is member of the pool, I know this is a spurious pool in which I mix an nvme disk with two sata:

But is the "mix" the cause of the problem or it is else?

I am buying a third sata ssd so to rebalance that situation but I would like to know if that is indeed the cause of the problem

Sometimes the guest VM freezes and does not respond for some seconds, trying to see what happens I have found the following indication by looking in atop:

So it seems that one device of the pool gets busy, this is the pool:

Code:

root@proxmox:~# zpool status

pool: fastzfs

state: ONLINE

scan: scrub repaired 0B in 00:22:49 with 0 errors on Sun Sep 11 00:46:50 2022

config:

NAME STATE READ WRITE CKSUM

fastzfs ONLINE 0 0 0

raidz1-0 ONLINE 0 0 0

nvme-CT1000P1SSD8_1934E21B33B1 ONLINE 0 0 0

ata-CT1000BX500SSD1_2150E5F04304 ONLINE 0 0 0

ata-CT1000BX500SSD1_2150E5F06346 ONLINE 0 0 0

Code:

root@proxmox:~# lsblk -o +FSTYPE

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT FSTYPE

sda 8:0 0 1.8T 0 disk LVM2_member

├─slow_storage-vm--1500--disk--0 253:2 0 500G 0 lvm

├─slow_storage-vm--1001--disk--0 253:3 0 20G 0 lvm ext4

└─slow_storage-vm--1201--disk--0 253:4 0 4M 0 lvm

sdb 8:16 0 931.5G 0 disk

├─sdb1 8:17 0 931.5G 0 part zfs_member

└─sdb9 8:25 0 8M 0 part

sdc 8:32 0 931.5G 0 disk

├─sdc1 8:33 0 931.5G 0 part zfs_member

└─sdc9 8:41 0 8M 0 part

sdd 8:48 1 15G 0 disk

└─sdd1 8:49 1 15G 0 part BitLocker

zd0 230:0 0 500G 0 disk

├─zd0p1 230:1 0 200M 0 part vfat

└─zd0p2 230:2 0 499.8G 0 part apfs

zd16 230:16 0 1M 0 disk

zd32 230:32 0 1M 0 disk

zd48 230:48 0 32G 0 disk

├─zd48p1 230:49 0 512M 0 part vfat

└─zd48p2 230:50 0 31.5G 0 part ext4

zd64 230:64 0 1M 0 disk

zd80 230:80 0 110G 0 disk

├─zd80p1 230:81 0 100M 0 part vfat

├─zd80p2 230:82 0 16M 0 part

├─zd80p3 230:83 0 109.4G 0 part ntfs

└─zd80p4 230:84 0 516M 0 part ntfs

zd96 230:96 0 250G 0 disk

├─zd96p1 230:97 0 200M 0 part vfat

└─zd96p2 230:98 0 249.8G 0 part apfs

nvme0n1 259:0 0 931.5G 0 disk

├─nvme0n1p1 259:1 0 931.5G 0 part zfs_member

└─nvme0n1p9 259:2 0 8M 0 part

nvme1n1 259:3 0 465.8G 0 disk

├─nvme1n1p1 259:4 0 1007K 0 part

├─nvme1n1p2 259:5 0 512M 0 part /boot/efi vfat

└─nvme1n1p3 259:6 0 465.3G 0 part LVM2_member

├─pve-swap 253:0 0 8G 0 lvm [SWAP] swap

└─pve-root 253:1 0 457.3G 0 lvm / ext4I am buying a third sata ssd so to rebalance that situation but I would like to know if that is indeed the cause of the problem