Hello, new here.

I have VMware 7.0 that I've struggled with to setup a two node HA vsan cluster with a witness node. Here is my hardware:

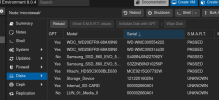

3 Lenovo dual CPU RD440 servers with 128gb ram each. All three have 118gb optane drives for caching and 1tb ssds for boot. All three have 8 port Avago tri mode raid/hba cards. Servers hold 8 drives but I have the boot ssds installed where the DVD used to be. My two servers for VM/vsan have 8 1tb ssds currently in raid 5 with hot spare. Third server has boot drive, optane as mentioned, unused 1tb ssd, and 5 8tb HDDs I plan to use with a backup VM.

I need to run two windows servers and eventually an SQL server and want to do this on an HA cluster. My plan was two physical servers to hold vsan data and be the two nodes and use the third server as a witness (monitor in proxmox) by running the esxi witness appliance. This would leave resources free on that server to run a backup solution likely in a VM.

For networking all three servers have the 1g hardware management port (IPMI I believe) 2 1g onboard NICs currently unused. 2 spp+ 10g dual port cards and 2 10g RJ45 dual port cards. At present 1 SFP card in each machine is attached to a standalone L3 switch for vsan traffic, the other SFP plugs into my L3 frontend switch, and the RJ45 cards will also plug into the L3 frontend. Actually in time I will move one server to another building using existing fiber and existing L3 switch identical to the current frontend switch. These switches are connected by a pair of fiber connections and a pair of RJ45 over cat6a.

My goal: have two windows domain controllers with one serving a database and the other a file server. In time this Access databases back end will be migrated to SQL. I need HA for these servers. I would like to not have to buy a fourth server. My backup storage is enough for nightly, weekly, monthly and annual backups. I don't have a lot of data, around 2tb at most. Only a small portion needs nightly backups, around 200gb and I only need to backup changed files.

SO! Can I do two main storage nodes using CEPH or something like it and use the third server just for the quorum? Can the third server then run my backup solution (looking at proxmox here as well)? Is it ok to keep my hardware raid intact? Can I cache the storage traffic with my optane drives? Lastly can I set all this up on the free version for testing and then easily add subscriptions later?

In short I am about to just ditch the $7k we spent on VMware essentials because its a pain in the butt, not intuitive, clumsy interface, limited hardware compatibility (my servers can't run 8.0) etc. I'd rather pay annually for Proxmox if it can make my life easier. I'm small business that normally could run on a single server and a backup solution but the need for a database for production has arisen and it will need to run on HA, even of its minimalist as every part of my production process will have a PC running the frontend of the database for data entry to get us off a paper system. The database is built and has been beta tested. Now I just need the hardware and virtual HA solution to run it on and I'm not impressed with VMware.

So I guess my main point is SELL me on Proxmox! Can it do what I think it can do and what recommendations might you experts have because I've not even installed it yet but some reading online and watching videos is really pushing towards it.

I have VMware 7.0 that I've struggled with to setup a two node HA vsan cluster with a witness node. Here is my hardware:

3 Lenovo dual CPU RD440 servers with 128gb ram each. All three have 118gb optane drives for caching and 1tb ssds for boot. All three have 8 port Avago tri mode raid/hba cards. Servers hold 8 drives but I have the boot ssds installed where the DVD used to be. My two servers for VM/vsan have 8 1tb ssds currently in raid 5 with hot spare. Third server has boot drive, optane as mentioned, unused 1tb ssd, and 5 8tb HDDs I plan to use with a backup VM.

I need to run two windows servers and eventually an SQL server and want to do this on an HA cluster. My plan was two physical servers to hold vsan data and be the two nodes and use the third server as a witness (monitor in proxmox) by running the esxi witness appliance. This would leave resources free on that server to run a backup solution likely in a VM.

For networking all three servers have the 1g hardware management port (IPMI I believe) 2 1g onboard NICs currently unused. 2 spp+ 10g dual port cards and 2 10g RJ45 dual port cards. At present 1 SFP card in each machine is attached to a standalone L3 switch for vsan traffic, the other SFP plugs into my L3 frontend switch, and the RJ45 cards will also plug into the L3 frontend. Actually in time I will move one server to another building using existing fiber and existing L3 switch identical to the current frontend switch. These switches are connected by a pair of fiber connections and a pair of RJ45 over cat6a.

My goal: have two windows domain controllers with one serving a database and the other a file server. In time this Access databases back end will be migrated to SQL. I need HA for these servers. I would like to not have to buy a fourth server. My backup storage is enough for nightly, weekly, monthly and annual backups. I don't have a lot of data, around 2tb at most. Only a small portion needs nightly backups, around 200gb and I only need to backup changed files.

SO! Can I do two main storage nodes using CEPH or something like it and use the third server just for the quorum? Can the third server then run my backup solution (looking at proxmox here as well)? Is it ok to keep my hardware raid intact? Can I cache the storage traffic with my optane drives? Lastly can I set all this up on the free version for testing and then easily add subscriptions later?

In short I am about to just ditch the $7k we spent on VMware essentials because its a pain in the butt, not intuitive, clumsy interface, limited hardware compatibility (my servers can't run 8.0) etc. I'd rather pay annually for Proxmox if it can make my life easier. I'm small business that normally could run on a single server and a backup solution but the need for a database for production has arisen and it will need to run on HA, even of its minimalist as every part of my production process will have a PC running the frontend of the database for data entry to get us off a paper system. The database is built and has been beta tested. Now I just need the hardware and virtual HA solution to run it on and I'm not impressed with VMware.

So I guess my main point is SELL me on Proxmox! Can it do what I think it can do and what recommendations might you experts have because I've not even installed it yet but some reading online and watching videos is really pushing towards it.