What in the world does this mean?

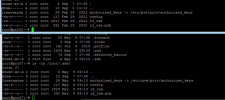

Error 500: can't activate storage 'nfs-iso' on node 'pro07':

command '/usr/bin/ssh -o 'BatchMode=yes' 10.0.0.76 -- /usr/sbin/pvesm status --storage nfs-iso' failed: exit code 255

I recently lost three guests because something happened to the storage in a cluster. Bit nervous about what seems to be happening here.

As far as I understand, when you enter a node into the cluster, each gets access to the storage set up on any node no?

I don't see any obvious problem in the GUI and I can see the share and the files from the command line so what's going on?

Error 500: can't activate storage 'nfs-iso' on node 'pro07':

command '/usr/bin/ssh -o 'BatchMode=yes' 10.0.0.76 -- /usr/sbin/pvesm status --storage nfs-iso' failed: exit code 255

I recently lost three guests because something happened to the storage in a cluster. Bit nervous about what seems to be happening here.

As far as I understand, when you enter a node into the cluster, each gets access to the storage set up on any node no?

I don't see any obvious problem in the GUI and I can see the share and the files from the command line so what's going on?

Last edited: