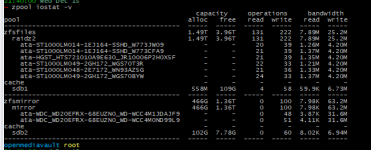

I have a zpool with three volumes in RAID-Z2, each with 7 disks.

I created the Zpool with volumes with the same number of disks.

This zpool is associated with a mirrored log and a cache, by NVMe.

So you basically got a single pool of 3x raidz2 of 7 disks each striped together?

If yes keep in mind to change the volblocksize from the default of 8K to somethign like 64K or above or you will waste half of the total capacity due to padding overhead.

Can I bind to this the same mirrored log and the cache used with the other zpool?

First you don't need to mirror a cache disk. Your L2ARC is only a read cache so if you loose it you wont loose any data. For the SLOG a mirror can be useful in the unlikely case that your SLOG drive is dying at the same time a power outage occures.

Second you should consider if using a L2ARC is useful in your case. The ARC in RAM is faster than a L2ARC on SSD and the more L2ARC you use the less ARC in RAM will be usable. So using a L2ARC you are basically sacrificing a bit of very fast read cache to get more but slower read cache. Its often said that L2ARC should only be used if you already maxed out your RAM, still need more read cache but can't add more because you ran out of RAM slots. So you should check if a L2ARC is actually helping you because it also can have a negative effect. If I remember right your L2ARC shouln't be higher than 10 times the size of your ARC.

And you don't need to add complete drives as SLOG or L2ARC. You can also use partitions. So you can for example partition both SSDs the same way using CLI with three partitions each. For example partition one and two using 10% of the capacity and partition three 80%. You can then use both first partitions as a mirrored SLOG for one pool, both second partitions as a mirrored SLOG for the second pool. The third partition of one of the SSDs (without mirroring) as a L2ARC for your first pool and the third partition of the other SSD as the L2ARC for your second pool.

But if you don't got alot of sync writes I guess using the SSDs as a special device would make more sense. But then a mirror of 3 SSDs would be best so that it fits the reliability of your raidz2 HDDs. Because if you loose the special devices all data on all HDDs is lost so a good redundancy is important.

If not how can I use these two HDDs.

You could use one of them as a hot spare for your pool and put the other one somewhere near so you already got one if you need to replace a disk. If one of your drives fails and there is a hot spare the pool will start the resilvering using that hot space disk as a temporary replacement for the failed disk. You then replace the failed disk with a new one (which you already have laying around), the hotspare will copy its data over to the new disk and will continue to work as a hot spare waiting for the next disk to die.