Hi,

so I recently separated out a dedicated storage network on VLAN 99 so it does not need to go through my pfSense box. Performance is good, I get around 9.3Gbs/s from a VM to my TrueNAS host. When going from a VM to another VM however, I only get around 6-7Gbs/s.

Here I thought I might get a bit more performance by enabling jumbo frames on that storage network only. I tried setting the MTU in my config on VLAN 99 to 9000, but it seems I have to set that value on both physical interfaces, the bond and the bridge for it to work, as seen in the example here: https://pve.proxmox.com/wiki/Open_vSwitch#Example_2:_Bond_+_Bridge_+_Internal_Ports

My question now is how would that impact the VMs on my other VLANs, where I did not explicitly set the MTU on the attached virtio network adapters, so they are still using the default MTU of 1500. Will it stay that way or will they switch over automatically to an MTU of 9000 as well? Do I need to explicitly set an MTU of 1500 on the VMs?

After configuring this and setting an MTU on a virtio NIC in Proxmox, do I still also need to configure an MTU in the VM itself? Or will it just work? I am using mostly Debian 12 configured with cloud-init, with one Ubuntu 24.04 Server VM for a media server.

I hope you can help me out here, kind of don't want to bork my network doing this.

For reference, here is my hardware:

- pfSense on N100 Mini PC with dual 10G SFP+

- Ubiquiti USW-Aggregation Switch

- TrueNAS with Supermicro A2SDI-8C with dual port Intel X520-DA2 10G SFP+ NIC

- PVE on MS-01 with dual X710 SFP+ 10G NICs

- Cables are DAC-cables by FS.com

My current interface configuration:

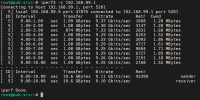

iperf3 from VM to TrueNAS:

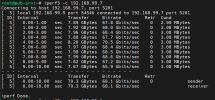

iperf3 from VM to another VM:

so I recently separated out a dedicated storage network on VLAN 99 so it does not need to go through my pfSense box. Performance is good, I get around 9.3Gbs/s from a VM to my TrueNAS host. When going from a VM to another VM however, I only get around 6-7Gbs/s.

Here I thought I might get a bit more performance by enabling jumbo frames on that storage network only. I tried setting the MTU in my config on VLAN 99 to 9000, but it seems I have to set that value on both physical interfaces, the bond and the bridge for it to work, as seen in the example here: https://pve.proxmox.com/wiki/Open_vSwitch#Example_2:_Bond_+_Bridge_+_Internal_Ports

My question now is how would that impact the VMs on my other VLANs, where I did not explicitly set the MTU on the attached virtio network adapters, so they are still using the default MTU of 1500. Will it stay that way or will they switch over automatically to an MTU of 9000 as well? Do I need to explicitly set an MTU of 1500 on the VMs?

After configuring this and setting an MTU on a virtio NIC in Proxmox, do I still also need to configure an MTU in the VM itself? Or will it just work? I am using mostly Debian 12 configured with cloud-init, with one Ubuntu 24.04 Server VM for a media server.

I hope you can help me out here, kind of don't want to bork my network doing this.

For reference, here is my hardware:

- pfSense on N100 Mini PC with dual 10G SFP+

- Ubiquiti USW-Aggregation Switch

- TrueNAS with Supermicro A2SDI-8C with dual port Intel X520-DA2 10G SFP+ NIC

- PVE on MS-01 with dual X710 SFP+ 10G NICs

- Cables are DAC-cables by FS.com

My current interface configuration:

Code:

# network interface settings; autogenerated

# Please do NOT modify this file directly, unless you know what

# you're doing.

#

# If you want to manage parts of the network configuration manually,

# please utilize the 'source' or 'source-directory' directives to do

# so.

# PVE will preserve these directives, but will NOT read its network

# configuration from sourced files, so do not attempt to move any of

# the PVE managed interfaces into external files!

auto lo

iface lo inet loopback

auto enp87s0

iface enp87s0 inet manual

auto enp2s0f0np0

iface enp2s0f0np0 inet manual

auto enp2s0f1np1

iface enp2s0f1np1 inet manual

auto enp90s0

iface enp90s0 inet manual

auto vlan10

iface vlan10 inet static

address 192.168.10.10/24

gateway 192.168.10.254

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=10

#MGMT

auto vlan99

iface vlan99 inet static

address 192.168.99.4/24

ovs_type OVSIntPort

ovs_bridge vmbr0

ovs_options tag=99

#STORAGE

auto bond0

iface bond0 inet manual

ovs_bonds enp2s0f0np0 enp2s0f1np1

ovs_type OVSBond

ovs_bridge vmbr0

ovs_options lacp=active bond_mode=balance-tcp

auto vmbr0

iface vmbr0 inet manual

ovs_type OVSBridge

ovs_ports bond0 vlan10 vlan99

source /etc/network/interfaces.d/*iperf3 from VM to TrueNAS:

iperf3 from VM to another VM:

Last edited: