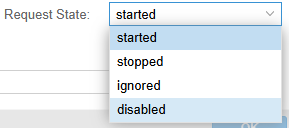

I was trying to stop one of my containers:

How do I disable a LXC in Proxmox?

I tried googling it, searched through the Proxmox documentation,

Your help is greatly appreciated.

(As a sidebar: I am getting this error that's a corollary of this other error that I am getting:

Not really sure how to fix this as well.)

This is part of a three-node Proxmox HA cluster.

Here is the LXC config:

Here is the output for

Here is

And here is

Your help is greatly appreciated.

Thank you.

Code:

root@minipc3:/etc/pve/nodes# pct stop 4090

Requesting HA stop for CT 4090

service 'ct:4090' in error state, must be disabled and fixed first

command 'ha-manager crm-command stop ct:4090 0' failed: exit code 255How do I disable a LXC in Proxmox?

I tried googling it, searched through the Proxmox documentation,

pct help, and also search the forums here, but nothing explicitly came up for this.Your help is greatly appreciated.

(As a sidebar: I am getting this error that's a corollary of this other error that I am getting:

Code:

root@minipc3:/etc/pve/nodes# pct delsnapshot 4090 vzdump

rbd error: error setting snapshot context: (2) No such file or directoryNot really sure how to fix this as well.)

This is part of a three-node Proxmox HA cluster.

Here is the LXC config:

Code:

arch: amd64

features: mount=nfs,nesting=1

hostname: proxmox-gtr5-plex

memory: 2048

nameserver: x.x.x.x,x.x.x.x

net0: name=eth0,bridge=vmbr2,firewall=1,gw=x.x.x.x,,hwaddr=06:AE:B4:E5:91:E0,ip=x.x.x.x/24,type=veth

onboot: 1

ostype: ubuntu

parent: vzdump

rootfs: ceph-erasure:vm-4090-disk-0,size=300G

searchdomain: local.home

startup: order=3,up=5

swap: 256

tags: gpu;ubuntu

lxc.cgroup.devices.allow: c 195:* rwm

lxc.cgroup.devices.allow: c 509:* rwm

lxc.cgroup.devices.allow: c 226:* rwm

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

lxc.mount.entry: /dev/nvram dev/nvram none bind,optional,create=file

[vzdump]

#vzdump backup snapshot

arch: amd64

features: mount=nfs,nesting=1

hostname: proxmox-gtr5-plex

memory: 2048

nameserver: x.x.x.x,x.x.x.x

net0: name=eth0,bridge=vmbr2,firewall=1,gw=x.x.x.x,hwaddr=06:AE:B4:E5:91:E0,ip=x.x.x.x/24,type=veth

onboot: 1

ostype: ubuntu

rootfs: ceph-erasure:vm-4090-disk-0,size=300G

searchdomain: local.home

snapstate: delete

snaptime: 1749357173

startup: order=3,up=5

swap: 256

tags: gpu;ubuntu

lxc.cgroup.devices.allow: c 195:* rwm

lxc.cgroup.devices.allow: c 509:* rwm

lxc.cgroup.devices.allow: c 226:* rwm

lxc.mount.entry: /dev/nvidia0 dev/nvidia0 none bind,optional,create=file

lxc.mount.entry: /dev/nvidiactl dev/nvidiactl none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-modeset dev/nvidia-modeset none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm dev/nvidia-uvm none bind,optional,create=file

lxc.mount.entry: /dev/nvidia-uvm-tools dev/nvidia-uvm-tools none bind,optional,create=file

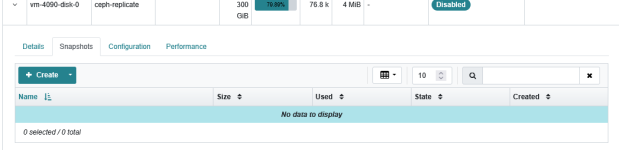

lxc.mount.entry: /dev/nvram dev/nvram none bind,optional,create=fileHere is the output for

rbd snap ls:

Code:

root@minipc3:/etc/pve/nodes/minipc3/lxc# rbd snap ls ceph-replicate/vm-4090-disk-0

root@minipc3:/etc/pve/nodes/minipc3/lxc#Here is

cat /etc/pve/storage.cfg:

Code:

dir: local

path /var/lib/vz

content iso,vztmpl

shared 0

lvmthin: local-lvm

thinpool data

vgname pve

content rootdir,images

cephfs: cephfs

path /mnt/pve/cephfs

content snippets,vztmpl,iso,backup

fs-name cephfs

prune-backups keep-all=1

rbd: ceph-replicate

content rootdir,images

krbd 1

pool ceph-replicate

rbd: ceph-erasure

content rootdir,images

data-pool ceph-erasure

krbd 1

pool ceph-replicate

pbs: debian-pbs

datastore debian-pbs

server x

content backup

fingerprint x

namespace x

prune-backups keep-all=1

username x

pbs: debian-pbs-6700k-pve

datastore debian-pbs

server x.x.x.x

content backup

fingerprint x

namespace x

prune-backups keep-all=1

username xAnd here is

cat /etc/pve/ceph.conf:

Code:

[global]

auth_client_required = cephx

auth_cluster_required = cephx

auth_service_required = cephx

cluster_network = x

fsid = 85daf8e9-198d-4ca5-86c6-cc0e9186a491

mon_allow_pool_delete = true

mon_host = x

ms_bind_ipv4 = true

ms_bind_ipv6 = false

osd_pool_default_min_size = 2

osd_pool_default_size = 3

public_network = x

[client]

keyring = /etc/pve/priv/$cluster.$name.keyring

[client.crash]

keyring = /etc/pve/ceph/$cluster.$name.keyring

[mds]

keyring = /var/lib/ceph/mds/ceph-$id/keyring

[mds.minipc1]

host = minipc1

mds_standby_for_name = pve

[mds.minipc2]

host = minipc2

mds_standby_for_name = pve

[mds.minipc3]

host = minipc3

mds_standby_for_name = pve

[mon.minipc1]

public_addr = x

[mon.minipc2]

public_addr = x

[mon.minipc3]

public_addr = xYour help is greatly appreciated.

Thank you.