Hi All,

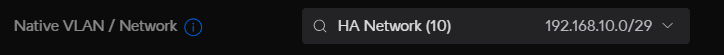

I have a 2 node cluster I have added a second 1g nic to each and created a bond, i have also created a separate vlan for High availability traffic.

When trying to migrate a container i get the following error:

# /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=TEST-NODE01' -o 'UserKnownHostsFile=/etc/pve/nodes/TEST-NODE01/ssh_known_hosts' -o 'GlobalKnownHostsFile=none' root@10.100.1.2 /bin/true

ssh: connect to host 10.100.1.2 port 22: No route to host

ERROR: migration aborted (duration 00:00:03): Can't connect to destination address using public key

TASK ERROR: migration aborted

I am not sure where to start with troubleshooting the issue.

I have a 2 node cluster I have added a second 1g nic to each and created a bond, i have also created a separate vlan for High availability traffic.

When trying to migrate a container i get the following error:

# /usr/bin/ssh -e none -o 'BatchMode=yes' -o 'HostKeyAlias=TEST-NODE01' -o 'UserKnownHostsFile=/etc/pve/nodes/TEST-NODE01/ssh_known_hosts' -o 'GlobalKnownHostsFile=none' root@10.100.1.2 /bin/true

ssh: connect to host 10.100.1.2 port 22: No route to host

ERROR: migration aborted (duration 00:00:03): Can't connect to destination address using public key

TASK ERROR: migration aborted

I am not sure where to start with troubleshooting the issue.