@Tmanok

My first priority is to stabilized the setup, anything else can come after that. I'm not against learning, but more a software guy, infrastructure and dev-ops is an add-on rather than a passion.

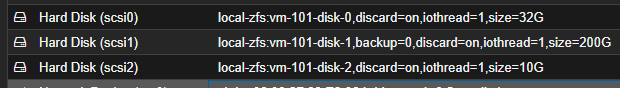

Right now, I have a corrupted ZFS and can't perform backups properly and it presents itself as a new use case to my backup single disk problem.

Generally, I separate content from executable code, for the main purpose to backup only content. My recovery usually involves re-installation as malware/viruses are also my target.

The 2nd use case presented itself when CentOS 8 decided to cut short its life. That was when I had to move my emails to Ubuntu and storing mails on a separate disk made it very simple.

And now, with this bit rot problem, I can lose a few emails here and there, but recovery has to include reinstallation of the OS, to ensure no executable has rotted. In the same vein, this separation also makes this operation easy.

That said, I still have daily backups of the VM themselves (without the content) as a convenience.

I can also confirm that setting

backup=no in PVE 7.4, those disks will be detached, but not wiped.

Likewise if we directly passthrough a disk using

qm set xxx -scsi..., these become detached and untouched, i.e. not wiped.

Let me try out your steps in a bit after I extract my emails from the corrupted ZFS. Thank you.