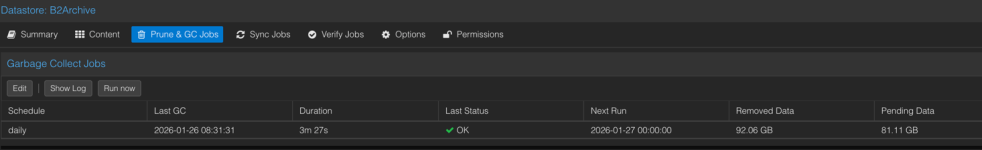

Hey all. I have been testing an S3 (Backblaze B2) bucket on PBS 4.x for an archive.

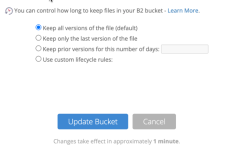

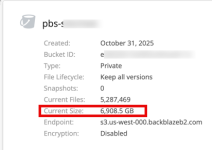

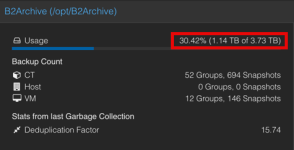

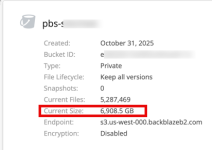

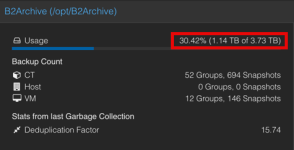

I've setup a sync to it with basic retentions of only about 14 days. The odd thing is the local size is showing about 1TB of use, but the bucket shows 7x that.

I am thinking files are not being deleted properly on the prune cycle, and being in tech preview wasn't sure if I should log this more as a bug officially? It grabbed my attention when the storage cost jumped. Happy to log in bugzilla but I need to see how to get the bucket use back down a bit and more in alignment in the meantime.

For the record I've seen a similar issue with other backup products on their initial use of S3 before GA, so it's not uncommon.

I've setup a sync to it with basic retentions of only about 14 days. The odd thing is the local size is showing about 1TB of use, but the bucket shows 7x that.

I am thinking files are not being deleted properly on the prune cycle, and being in tech preview wasn't sure if I should log this more as a bug officially? It grabbed my attention when the storage cost jumped. Happy to log in bugzilla but I need to see how to get the bucket use back down a bit and more in alignment in the meantime.

For the record I've seen a similar issue with other backup products on their initial use of S3 before GA, so it's not uncommon.

Last edited: